Abstract

This is a review of the physics and cosmology of the cosmological constant. Focusing on recent developments, I present a pedagogical overview of cosmology in the presence of a cosmological constant, observational constraints on its magnitude, and the physics of a small (and potentially nonzero) vacuum energy.

Similar content being viewed by others

1 Introduction

1.1 Truth and beauty

Science is rarely tidy. We ultimately seek a unified explanatory framework characterized by elegance and simplicity; along the way, however, our aesthetic impulses must occasionally be sacrificed to the desire to encompass the largest possible range of phenomena (i.e., to fit the data). It is often the case that an otherwise compelling theory, in order to be brought into agreement with observation, requires some apparently unnatural modification. Some such modifications may eventually be discarded as unnecessary once the phenomena are better understood; at other times, advances in our theoretical understanding will reveal that a certain theoretical compromise is only superficially distasteful, when in fact it arises as the consequence of a beautiful underlying structure.

General relativity is a paradigmatic example of a scientific theory of impressive power and simplicity. The cosmological constant, meanwhile, is a paradigmatic example of a modification, originally introduced [80] to help fit the data, which appears at least on the surface to be superfluous and unattractive. Its original role, to allow static homogeneous solutions to Einstein’s equations in the presence of matter, turned out to be unnecessary when the expansion of the universe was discovered [131], and there have been a number of subsequent episodes in which a nonzero cosmological constant was put forward as an explanation for a set of observations and later withdrawn when the observational case evaporated. Meanwhile, particle theorists have realized that the cosmological constant can be interpreted as a measure of the energy density of the vacuum. This energy density is the sum of a number of apparently unrelated contributions, each of magnitude much larger than the upper limits on the cosmological constant today; the question of why the observed vacuum energy is so small in comparison to the scales of particle physics has become a celebrated puzzle, although it is usually thought to be easier to imagine an unknown mechanism which would set it precisely to zero than one which would suppress it by just the right amount to yield an observationally accessible cosmological constant.

This checkered history has led to a certain reluctance to consider further invocations of a nonzero cosmological constant; however, recent years have provided the best evidence yet that this elusive quantity does play an important dynamical role in the universe. This possibility, although still far from a certainty, makes it worthwhile to review the physics and astrophysics of the cosmological constant (and its modern equivalent, the energy of the vacuum).

There are a number of other reviews of various aspects of the cosmological constant; in the present article I will outline the most relevant issues, but not try to be completely comprehensive, focusing instead on providing a pedagogical introduction and explaining recent advances. For astrophysical aspects, I did not try to duplicate much of the material in Carroll, Press and Turner [48], which should be consulted for numerous useful formulae and a discussion of several kinds of observational tests not covered here. Some earlier discussions include [85, 50, 221], and subsequent reviews include [58, 218, 246]. The classic discussion of the physics of the cosmological constant is by Weinberg [264], with more recent work discussed by [58, 218]. For introductions to cosmology, see [149, 160, 189].

1.2 Introducing the cosmological constant

Einstein’s original field equations are:

(I use conventions in which c = 1, and will also set ћ =1 in most of the formulae to follow, but Newton’s constant will be kept explicit.) On very large scales the universe is spatially homogeneous and isotropic to an excellent approximation, which implies that its metric takes the Robertson-Walker form,

where dΩ2 = dθ2 + sin2θdϕ2 is the metric on a two-sphere. The curvature parameter k takes on values +1, 0, or -1 for positively curved, flat, and negatively curved spatial sections, respectively. The scale factor characterizes the relative size of the spatial sections as a function of time; we have written it in a normalized form a(t) = R(t)/R0, where the subscript 0 will always refer to a quantity evaluated at the present time. The redshift z undergone by radiation from a comoving object as it travels to us today is related to the scale factor at which it was emitted by

The energy-momentum sources may be modeled as a perfect fluid, specified by an energy density ρ and isotropic pressure p in its rest frame. The energy-momentum tensor of such a fluid is

where Uμ is the fluid four-velocity. To obtain a Robertson-Walker solution to Einstein’s equations, the rest frame of the fluid must be that of a comoving observer in the metric (2); in that case, Einstein’s equations reduce to the two Friedmann equations,

where we have introduced the Hubble parameter H ≡ ȧ/a, and

Einstein was interested in finding static (ȧ = 0) solutions, both due to his hope that general relativity would embody Mach’s principle that matter determines inertia, and simply to account for the astronomical data as they were understood at the time. (This account gives short shrift to the details of what actually happened; for historical background see [264].) A static universe with a positive energy density is compatible with (5) if the spatial curvature is positive (k = +1) and the density is appropriately tuned; however, (6) implies that a will never vanish in such a spacetime if the pressure p is also nonnegative (which is true for most forms of matter, and certainly for ordinary sources such as stars and gas). Einstein therefore proposed a modification of his equations, to

where Λ is a new free parameter, the cosmological constant. Indeed, the left-hand side of (7) is the most general local, coordinate-invariant, divergenceless, symmetric, two-index tensor we can construct solely from the metric and its first and second derivatives. With this modification, the Friedmann equations become

and

These equations admit a static solution with positive spatial curvature and all the parameters ρ, p, and Λ nonnegative. This solution is called the “Einstein static universe.”

The discovery by Hubble that the universe is expanding eliminated the empirical need for a static world model (although the Einstein static universe continues to thrive in the toolboxes of theorists, as a crucial step in the construction of conformal diagrams). It has also been criticized on the grounds that any small deviation from a perfect balance between the terms in (9) will rapidly grow into a runaway departure from the static solution.

Pandora’s box, however, is not so easily closed. The disappearance of the original motivation for introducing the cosmological constant did not change its status as a legitimate addition to the gravitational field equations, or as a parameter to be constrained by observation. The only way to purge Λ from cosmological discourse would be to measure all of the other terms in (8) to sufficient precision to be able to conclude that the Λ/3 term is negligibly small in comparison, a feat which has to date been out of reach. As discussed below, there is better reason than ever before to believe that Λ is actually nonzero, and Einstein may not have blundered after all.

1.3 Vacuum energy

The cosmological constant Λ is a dimensionful parameter with units of (length)-2. From the point of view of classical general relativity, there is no preferred choice for what the length scale defined by Λ might be. Particle physics, however, brings a different perspective to the question. The cosmological constant turns out to be a measure of the energy density of the vacuum — the state of lowest energy — and although we cannot calculate the vacuum energy with any confidence, this identification allows us to consider the scales of various contributions to the cosmological constant [277, 33].

Consider a single scalar field ϕ, with potential energy V(ϕ). The action can be written

(where g is the determinant of the metric tensor gμν), and the corresponding energy-momentum tensor is

In this theory, the configuration with the lowest energy density (if it exists) will be one in which there is no contribution from kinetic or gradient energy, implying ∂μϕ = 0, for which Tμν = -V(ϕ0)gμν, where ϕ0 is the value of ϕ which minimizes V(ϕ). There is no reason in principle why V(ϕ0) should vanish. The vacuum energy-momentum tensor can thus be written

with ρvac in this example given by V(ϕ0). (This form for the vacuum energy-momentum tensor can also be argued for on the more general grounds that it is the only Lorentz-invariant form for T vacμν .) The vacuum can therefore be thought of as a perfect fluid as in (4), with

The effect of an energy-momentum tensor of the form (12) is equivalent to that of a cosmological constant, as can be seen by moving the Λgμν term in (7) to the right-hand side and setting

This equivalence is the origin of the identification of the cosmological constant with the energy of the vacuum. In what follows, I will use the terms “vacuum energy” and “cosmological constant” essentially interchangeably.

It is not necessary to introduce scalar fields to obtain a nonzero vacuum energy. The action for general relativity in the presence of a “bare” cosmological constant Λ0 is

where R is the Ricci scalar. Extremizing this action (augmented by suitable matter terms) leads to the equations (7). Thus, the cosmological constant can be thought of as simply a constant term in the Lagrange density of the theory. Indeed, (15) is the most general covariant action we can construct out of the metric and its first and second derivatives, and is therefore a natural starting point for a theory of gravity.

Classically, then, the effective cosmological constant is the sum of a bare term Λ0 and the potential energy V(ϕ), where the latter may change with time as the universe passes through different phases. Ωuantum mechanics adds another contribution, from the zero-point energies associated with vacuum fluctuations. Consider a simple harmonic oscillator, i.e. a particle moving in a one-dimensional potential of the form \(V(x) = {\textstyle{1 \over 2}}{\omega ^2}{x^2}\). Classically, the “vacuum” for this system is the state in which the particle is motionless and at the minimum of the potential (x = 0), for which the energy in this case vanishes. Quantum-mechanically, however, the uncertainty principle forbids us from isolating the particle both in position and momentum, and we find that the lowest energy state has an energy \({E_0} = {\textstyle{1 \over 2}}\hbar \omega \) (where I have temporarily re-introduced explicit factors of ћ for clarity). Of course, in the absence of gravity either system actually has a vacuum energy which is completely arbitrary; we could add any constant to the potential (including, for example, \( - \frac{1}{2}\hbar \omega \)) without changing the theory. It is important, however, that the zero-point energy depends on the system, in this case on the frequency ω.

A precisely analogous situation holds in field theory. A (free) quantum field can be thought of as a collection of an infinite number of harmonic oscillators in momentum space. Formally, the zero-point energy of such an infinite collection will be infinite. (See [264, 48] for further details.) If, however, we discard the very high-momentum modes on the grounds that we trust our theory only up to a certain ultraviolet momentum cutoff kmax, we find that the resulting energy density is of the form

This answer could have been guessed by dimensional analysis; the numerical constants which have been neglected will depend on the precise theory under consideration. Again, in the absence of gravity this energy has no effect, and is traditionally discarded (by a process known as “normal-ordering”). However, gravity does exist, and the actual value of the vacuum energy has important consequences. (And the vacuum fluctuations themselves are very real, as evidenced by the Casimir effect [49].)

The net cosmological constant, from this point of view, is the sum of a number of apparently disparate contributions, including potential energies from scalar fields and zero-point fluctuations of each field theory degree of freedom, as well as a bare cosmological constant Λ0. Unlike the last of these, in the first two cases we can at least make educated guesses at the magnitudes. In the Weinberg-Salam electroweak model, the phases of broken and unbroken symmetry are distinguished by a potential energy difference of approximately MEW ∼ 200 GeV (where 1 GeV = 1.6×10-3 erg); the universe is in the broken-symmetry phase during our current low-temperature epoch, and is believed to have been in the symmetric phase at sufficiently high temperatures at early times. The effective cosmological constant is therefore different in the two epochs; absent some form of prearrangement, we would naturally expect a contribution to the vacuum energy today of order

Similar contributions can arise even without invoking “fundamental” scalar fields. In the strong interactions, chiral symmetry is believed to be broken by a nonzero expectation value of the quark bilinear ̄qq (which is itself a scalar, although constructed from fermions). In this case the energy difference between the symmetric and broken phases is of order the QCD scale MQCD ∼ 0.3 GeV, and we would expect a corresponding contribution to the vacuum energy of order

These contributions are joined by those from any number of unknown phase transitions in the early universe, such as a possible contribution from grand unification of order MGUT ∼ 1016 GeV. In the case of vacuum fluctuations, we should choose our cutoff at the energy past which we no longer trust our field theory. If we are confident that we can use ordinary quantum field theory all the way up to the Planck scale MPl = (8πG)-1/2 ∼ 1018 GeV, we expect a contribution of order

Field theory may fail earlier, although quantum gravity is the only reason we have to believe it will fail at any specific scale.

As we will discuss later, cosmological observations imply

much smaller than any of the individual effects listed above. The ratio of (19) to (20) is the origin of the famous discrepancy of 120 orders of magnitude between the theoretical and observational values of the cosmological constant. There is no obstacle to imagining that all of the large and apparently unrelated contributions listed add together, with different signs, to produce a net cosmological constant consistent with the limit (20), other than the fact that it seems ridiculous. We know of no special symmetry which could enforce a vanishing vacuum energy while remaining consistent with the known laws of physics; this conundrum is the “cosmological constant problem”. In Section 4 we will discuss a number of issues related to this puzzle, which at this point remains one of the most significant unsolved problems in fundamental physics.

2 Cosmology with a Cosmological Constant

2.1 Cosmological parameters

From the Friedmann equation (5) (where henceforth we take the effects of a cosmological constant into account by including the vacuum energy density ρΛ into the total density ρ), for any value of the Hubble parameter H there is a critical value of the energy density such that the spatial geometry is flat (k = 0):

It is often most convenient to measure the total energy density in terms of the critical density, by introducing the density parameter

One useful feature of this parameterization is a direct connection between the value of Ω and the spatial geometry:

[Keep in mind that some references still use “Ω” to refer strictly to the density parameter in matter, even in the presence of a cosmological constant; with this definition (23) no longer holds.]

In general, the energy density ρ will include contributions from various distinct components. From the point of view of cosmology, the relevant feature of each component is how its energy density evolves as the universe expands. Fortunately, it is often (although not always) the case that individual components i have very simple equations of state of the form

with wi a constant. Plugging this equation of state into the energy-momentum conservation equation ∇μTμν = 0, we find that the energy density has a power-law dependence on the scale factor,

where the exponent is related to the equation of state parameter by

The density parameter in each component is defined in the obvious way,

which has the useful property that

The simplest example of a component of this form is a set of massive particles with negligible relative velocities, known in cosmology as “dust” or simply “matter”. The energy density of such particles is given by their number density times their rest mass; as the universe expands, the number density is inversely proportional to the volume while the rest masses are constant, yielding ρM ∝ a-3. For relativistic particles, known in cosmology as “radiation” (although any relativistic species counts, not only photons or even strictly massless particles), the energy density is the number density times the particle energy, and the latter is proportional to a-1 (redshifting as the universe expands); the radiation energy density therefore scales as ρR ∞ a-4. Vacuum energy does not change as the universe expands, so ρΛ ∞ a0; from (26) this implies a negative pressure, or positive tension, when the vacuum energy is positive. Finally, for some purposes it is useful to pretend that the -ka-2R -20 term in (5) represents an effective “energy density in curvature”, and define ρk = -(3k/8πGR 20 )a-2. We can define a corresponding density parameter

this relation is simply (5) divided by H2. Note that the contribution from Ωk is (for obvious reasons) not included in the definition of Ω. The usefulness of Ωk is that it contributes to the expansion rate analogously to the honest density parameters Ωi we can write

where the notation ∑i(k) reflects the fact that the sum includes Ωk in addition to the various components of Ω = ∑iΩi. The most popular equations of state for cosmological energy sources can thus be summarized as follows:

The ranges of values of the Ωi’s which are allowed in principle (as opposed to constrained by observation) will depend on a complete theory of the matter fields, but lacking that we may still invoke energy conditions to get a handle on what constitutes sensible values. The most appropriate condition is the dominant energy condition (DEC), which states that Tμνlμlν ≥ 0, and Tμνlμ is non-spacelike, for any null vector lμ; this implies that energy does not flow faster than the speed of light [117]. For a perfect-fluid energy-momentum tensor of the form (4), these two requirements imply that ρ + p ≥ 0 and |ρ| ≥ |p|, respectively. Thus, either the density is positive and greater in magnitude than the pressure, or the density is negative and equal in magnitude to a compensating positive pressure; in terms of the equation-of-state parameter w, we have either positive ρ and |w| ≤ 1 or negative ρ and w = -1. That is, a negative energy density is allowed only if it is in the form of vacuum energy. (We have actually modified the conventional DEC somewhat, by using only null vectors lμ rather than null or timelike vectors; the traditional condition would rule out a negative cosmological constant, which there is no physical reason to do.)

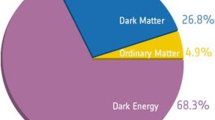

There are good reasons to believe that the energy density in radiation today is much less than that in matter. Photons, which are readily detectable, contribute Ωγ ∼ 5 × 10-5, mostly in the 2.73 K cosmic microwave background [211, 87, 225]. If neutrinos are sufficiently low mass as to be relativistic today, conventional scenarios predict that they contribute approximately the same amount [149]. In the absence of sources which are even more exotic, it is therefore useful to parameterize the universe today by the values of ΩM and ΩΛ, with Ωk = 1 - ΩM - ΩΛ, keeping the possibility of surprises always in mind.

One way to characterize a specific Friedmann-Robertson-Walker model is by the values of the Hubble parameter and the various energy densities ρi. (Of course, reconstructing the history of such a universe also requires an understanding of the microphysical processes which can exchange energy between the different states.) It may be difficult, however, to directly measure the different contributions to ρ, and it is therefore useful to consider extracting these quantities from the behavior of the scale factor as a function of time. A traditional measure of the evolution of the expansion rate is the deceleration parameter

where in the last line we have assumed that the universe is dominated by matter and the cosmological constant. Under the assumption that ΩΛ = 0, measuring q0 provides a direct measurement of the current density parameter ΩM0; however, once ΩΛ is admitted as a possibility there is no single parameter which characterizes various universes, and for most purposes it is more convenient to simply quote experimental results directly in terms of ΩM and ΩΛ . [Even this parameterization, of course, bears a certain theoretical bias which may not be justified; ultimately, the only unbiased method is to directly quote limits on a(t).]

Notice that positive-energy-density sources with n > 2 cause the universe to decelerate while n < 2 leads to acceleration; the more rapidly energy density redshifts away, the greater the tendency towards universal deceleration. An empty universe (Ω = 0, Ωk = 1) expands linearly with time; sometimes called the “Milne universe”, such a spacetime is really flat Minkowski space in an unusual time-slicing.

2.2 Model universes and their fates

In the remainder of this section we will explore the behavior of universes dominated by matter and vacuum energy, Ω = ΩM + ΩΛ = 1 - Ωk. According to (33), a positive cosmological constant accelerates the universal expansion, while a negative cosmological constant and/or ordinary matter tend to decelerate it. The relative contributions of these components change with time; according to (28) we have

For ΩΛ < 0, the universe will always recollapse to a Big Crunch, either because there is a sufficiently high matter density or due to the eventual domination of the negative cosmological constant. For ΩΛ > 0 the universe will expand forever unless there is sufficient matter to cause recollapse before ΩΛ becomes dynamically important. For ΩΛ = 0 we have the familiar situation in which ΩM ≤ 1 universes expand forever and ΩM > 1 universes recollapse; notice, however, that in the presence of a cosmological constant there is no necessary relationship between spatial curvature and the fate of the universe. (Furthermore, we cannot reliably determine that the universe will expand forever by any set of measurements of ΩΛ and ΩM; even if we seem to live in a parameter space that predicts eternal expansion, there is always the possibility of a future phase transition which could change the equation of state of one or more of the components.)

Given ΩM, the value of ΩΛ for which the universe will expand forever is given by

Conversely, if the cosmological constant is sufficiently large compared to the matter density, the universe has always been accelerating, and rather than a Big Bang its early history consisted of a period of gradually slowing contraction to a minimum radius before beginning its current expansion. The criterion for there to have been no singularity in the past is

where “coss” represents cosh when ΩM < 1/2, and cos when ΩM > 1/2.

The dynamics of universes with Ω = ΩM + ΩΛ are summarized in Figure 1, in which the arrows indicate the evolution of these parameters in an expanding universe. (In a contracting universe they would be reversed.) This is not a true phase-space plot, despite the superficial similarities. One important difference is that a universe passing through one point can pass through the same point again but moving backwards along its trajectory, by first going to infinity and then turning around (recollapse).

Figure 1 includes three fixed points, at (ΩM, ΩΛ) equal to (0,0), (0,1), and (1, 0). The attractor among these at (0,1) is known as de Sitter space — a universe with no matter density, dominated by a cosmological constant, and with scale factor growing exponentially with time. The fact that this point is an attractor on the diagram is another way of understanding the cosmological constant problem. A universe with initial conditions located at a generic point on the diagram will, after several expansion times, flow to de Sitter space if it began above the recollapse line, and flow to infinity and back to recollapse if it began below that line. Since our universe has expanded by many orders of magnitude since early times, it must have begun at a non-generic point in order not to have evolved either to de Sitter space or to a Big Crunch. The only other two fixed points on the diagram are the saddle point at (ΩM, ΩΛ) = (0, 0), corresponding to an empty universe, and the repulsive fixed point at (ΩM, ΩΛ) = (1, 0), known as the Einstein-de Sitter solution. Since our universe is not empty, the favored solution from this combination of theoretical and empirical arguments is the Einstein-de Sitter universe. The inflationary scenario [113, 159, 6] provides a mechanism whereby the universe can be driven to the line ΩM + ΩΛ = 1 (spatial flatness), so Einstein-de Sitter is a natural expectation if we imagine that some unknown mechanism sets Λ = 0. As discussed below, the observationally favored universe is located on this line but away from the fixed points, near (ΩM, ΩΛ) = (0.3,0.7). It is fair to conclude that naturalness arguments have a somewhat spotty track record at predicting cosmological parameters.

2.3 Surveying the universe

The lookback time from the present day to an object at redshift z* is given by

with H(a) given by (30). The age of the universe is obtained by taking the z* → ∞ (t* → 0) limit. For Ω = ΩM = 1, this yields the familiar answer t0 = (2/3)H -10 ; the age decreases as ΩM is increased, and increases as ΩΛ is increased. Figure 2 shows the expansion history of the universe for different values of these parameters and H0 fixed; it is clear how the acceleration caused by ΩΛ leads to an older universe. There are analytic approximation formulas which estimate (36) in various regimes [264, 149, 48], but generally the integral is straightforward to perform numerically.

In a generic curved spacetime, there is no preferred notion of the distance between two objects. Robertson-Walker spacetimes have preferred foliations, so it is possible to define sensible notions of the distance between comoving objects — those whose worldlines are normal to the preferred slices. Placing ourselves at r = 0 in the coordinates defined by (2), the coordinate distance r to another comoving object is independent of time. It can be converted to a physical distance at any specified time t* by multiplying by the scale factor R0a(t*), yielding a number which will of course change as the universe expands. However, intervals along spacelike slices are not accessible to observation, so it is typically more convenient to use distance measures which can be extracted from observable quantities. These include the luminosity distance,

where L is the intrinsic luminosity and F the measured flux; the proper-motion distance,

where u is the transverse proper velocity and ̇θ the observed angular velocity; and the angular-diameter distance,

where D is the proper size of the object and θ its apparent angular size. All of these definitions reduce to the usual notion of distance in a Euclidean space. In a Robertson-Walker universe, the proper-motion distance turns out to equal the physical distance along a spacelike slice at t = t0:

The three measures are related by

so any one can be converted to any other for sources of known redshift.

The proper-motion distance between sources at redshift z1 and z2 can be computed by using ds2 = 0 along a light ray, where ds2 is given by (2). We have

where we have used (5) to solve for \({R_0} = 1/({H_0}\sqrt {|{\Omega _{k0}}|} )\), H(a) is again given by (30), and “sinn(x)” denotes (x) when Ωk0 > 0, sin(x) when Ωk0 < 0, and x when Ωk0 = 0. An analytic approximation formula can be found in [193]. Note that, for large redshifts, the dependence of the various distance measures on z is not necessarily monotonic.

The comoving volume element in a Robertson-Walker universe is given by

which can be integrated analytically to obtain the volume out to a distance dM:

where “sinn” is defined as before (42).

2.4 Structure formation

The introduction of a cosmological constant changes the relationship between the matter density and expansion rate from what it would be in a matter-dominated universe, which in turn influences the growth of large-scale structure. The effect is similar to that of a nonzero spatial curvature, and complicated by hydrodynamic and nonlinear effects on small scales, but is potentially detectable through sufficiently careful observations.

The analysis of the evolution of structure is greatly abetted by the fact that perturbations start out very small (temperature anisotropies in the microwave background imply that the density perturbations were of order 10-5 at recombination), and linearized theory is effective. In this regime, the fate of the fluctuations is in the hands of two competing effects: the tendency of self-gravity to make overdense regions collapse, and the tendency of test particles in the background expansion to move apart. Essentially, the effect of vacuum energy is to contribute to expansion but not to the self-gravity of overdensities, thereby acting to suppress the growth of perturbations [149, 189].

For sub-Hubble-radius perturbations in a cold dark matter component, a Newtonian analysis suffices. (We may of course be interested in super-Hubble-radius modes, or the evolution of interacting or relativistic particles, but the simple Newtonian case serves to illustrate the relevant physical effect.) If the energy density in dynamical matter is dominated by CDM, the linearized Newtonian evolution equation is

The second term represents an effective frictional force due to the expansion of the universe, characterized by a timescale (ȧ/a)-1 = H-1, while the right hand side is a forcing term with characteristic timescale (4πGρM)-1/2 ≈ ΩM-1/2H-1. Thus, when ΩM ≈ 1, these effects are in balance and CDM perturbations gradually grow; when ΩM dips appreciably below unity (as when curvature or vacuum energy begin to dominate), the friction term becomes more important and perturbation growth effectively ends. In fact (45) can be directly solved [119] to yield

where H(a) is given by (30). There exist analytic approximations to this formula [48], as well as analytic expressions for flat universes [81]. Note that this analysis is consistent only in the linear regime; once perturbations on a given scale become of order unity, they break away from the Hubble flow and begin to evolve as isolated systems.

3 Observational Tests

It has been suspected for some time now that there are good reasons to think that a cosmology with an appreciable cosmological constant is the best fit to what we know about the universe [188, 248, 148, 79, 95, 147, 151, 181, 245]. However, it is only very recently that the observational case has tightened up considerably, to the extent that, as the year 2000 dawns, more experts than not believe that there really is a positive vacuum energy exerting a measurable effect on the evolution of the universe. In this section I review the major approaches which have led to this shift.

3.1 Type Ia supernovae

The most direct and theory-independent way to measure the cosmological constant would be to actually determine the value of the scale factor as a function of time. Unfortunately, the appearance of Ωk in formulae such as (42) renders this difficult. Nevertheless, with sufficiently precise information about the dependence of a distance measure on redshift we can disentangle the effects of spatial curvature, matter, and vacuum energy, and methods along these lines have been popular ways to try to constrain the cosmological constant.

Astronomers measure distance in terms of the “distance modulus” m - M, where m is the apparent magnitude of the source and M its absolute magnitude. The distance modulus is related to the luminosity distance via

Of course, it is easy to measure the apparent magnitude, but notoriously difficult to infer the absolute magnitude of a distant object. Methods to estimate the relative absolute luminosities of various kinds of objects (such as galaxies with certain characteristics) have been pursued, but most have been plagued by unknown evolutionary effects or simply large random errors [221].

Recently, significant progress has been made by using Type Ia supernovae as “standardizable candles”. Supernovae are rare — perhaps a few per century in a Milky-Way-sized galaxy — but modern telescopes allow observers to probe very deeply into small regions of the sky, covering a very large number of galaxies in a single observing run. Supernovae are also bright, and Type Ia’s in particular all seem to be of nearly uniform intrinsic luminosity (absolute magnitude M ∼ -19.5, typically comparable to the brightness of the entire host galaxy in which they appear) [36]. They can therefore be detected at high redshifts (z ∼ 1), allowing in principle a good handle on cosmological effects [236, 108].

The fact that all SNe Ia are of similar intrinsic luminosities fits well with our understanding of these events as explosions which occur when a white dwarf, onto which mass is gradually accreting from a companion star, crosses the Chandrasekhar limit and explodes. (It should be noted that our understanding of supernova explosions is in a state of development, and theoretical models are not yet able to accurately reproduce all of the important features of the observed events. See [274, 114, 121] for some recent work.) The Chandrasekhar limit is a nearly-universal quantity, so it is not a surprise that the resulting explosions are of nearly-constant luminosity. However, there is still a scatter of approximately 40% in the peak brightness observed in nearby supernovae, which can presumably be traced to differences in the composition of the white dwarf atmospheres. Even if we could collect enough data that statistical errors could be reduced to a minimum, the existence of such an uncertainty would cast doubt on any attempts to study cosmology using SNe Ia as standard candles.

Fortunately, the observed differences in peak luminosities of SNe Ia are very closely correlated with observed differences in the shapes of their light curves: Dimmer SNe decline more rapidly after maximum brightness, while brighter SNe decline more slowly [200, 213, 115]. There is thus a one-parameter family of events, and measuring the behavior of the light curve along with the apparent luminosity allows us to largely correct for the intrinsic differences in brightness, reducing the scatter from 40% to less than 15% — sufficient precision to distinguish between cosmological models. (It seems likely that the single parameter can be traced to the amount of 56Ni produced in the supernova explosion; more nickel implies both a higher peak luminosity and a higher temperature and thus opacity, leading to a slower decline. It would be an exaggeration, however, to claim that this behavior is well-understood theoretically.)

Following pioneering work reported in [180], two independent groups have undertaken searches for distant supernovae in order to measure cosmological parameters. Figure 3 shows the results for m - M vs. z for the High-Z Supernova Team [101, 223, 214, 102], and Figure 4 shows the equivalent results for the Supernova Cosmology Project [195, 196, 197]. Under the assumption that the energy density of the universe is dominated by matter and vacuum components, these data can be converted into limits on ΩM and ΩΛ, as shown in Figures 5 and 6.

Hubble diagram (distance modulus vs. redshift) from the High-Z Supernova Team [214]. The lines represent predictions from the cosmological models with the specified parameters. The lower plot indicates the difference between observed distance modulus and that predicted in an open-universe model.

Hubble diagram from the Supernova Cosmology Project [197]. The bottom plot shows the number of standard deviations of each point from the best-fit curve.

Constraints in the ΩM-ΩΛ plane from the High-Z Supernova Team [214].

Constraints in the ΩM-ΩΛ plane from the Supernova Cosmology Project [197].

It is clear that the confidence intervals in the ΩM-ΩΛ plane are consistent for the two groups, with somewhat tighter constraints obtained by the Supernova Cosmology Project, who have more data points. The surprising result is that both teams favor a positive cosmological constant, and strongly rule out the traditional (ΩM, ΩΛ) = (1,0) favorite universe. They are even inconsistent with an open universe with zero cosmological constant, given what we know about the matter density of the universe (see below).

Given the significance of these results, it is natural to ask what level of confidence we should have in them. There are a number of potential sources of systematic error which have been considered by the two teams; see the original papers [223, 214, 197] for a thorough discussion. The two most worrisome possibilities are intrinsic differences between Type Ia supernovae at high and low redshifts [75, 212], and possible extinction via intergalactic dust [2, 3, 4, 226, 241]. (There is also the fact that intervening weak lensing can change the distance-magnitude relation, but this seems to be a small effect in realistic universes [123, 143].) Both effects have been carefully considered, and are thought to be unimportant, although a better understanding will be necessary to draw firm conclusions. Here, I will briefly mention some of the relevant issues.

As thermonuclear explosions of white dwarfs, Type Ia supernovae can occur in a wide variety of environments. Consequently, a simple argument against evolution is that the high-redshift environments, while chronologically younger, should be a subset of all possible low-redshift environments, which include regions that are “young” in terms of chemical and stellar evolution. Nevertheless, even a small amount of evolution could ruin our ability to reliably constrain cosmological parameters [75]. In their original papers [223, 214, 197], the supernova teams found impressive consistency in the spectral and photometric properties of Type Ia supernovae over a variety of redshifts and environments (e.g., in elliptical vs. spiral galaxies). More recently, however, Riess et al. [212] have presented tentative evidence for a systematic difference in the properties of high- and low-redshift supernovae, claiming that the risetimes (from initial explosion to maximum brightness) were higher in the high-redshift events. Apart from the issue of whether the existing data support this finding, it is not immediately clear whether such a difference is relevant to the distance determinations: first, because the risetime is not used in determining the absolute luminosity at peak brightness, and second, because a process which only affects the very early stages of the light curve is most plausibly traced to differences in the outer layers of the progenitor, which may have a negligible affect on the total energy output. Nevertheless, any indication of evolution could bring into question the fundamental assumptions behind the entire program. It is therefore essential to improve the quality of both the data and the theories so that these issues may be decisively settled.

Other than evolution, obscuration by dust is the leading concern about the reliability of the supernova results. Ordinary astrophysical dust does not obscure equally at all wavelengths, but scatters blue light preferentially, leading to the well-known phenomenon of “reddening”. Spectral measurements by the two supernova teams reveal a negligible amount of reddening, implying that any hypothetical dust must be a novel “grey” variety. This possibility has been investigated by a number of authors [2, 3, 4, 226, 241]. These studies have found that even grey dust is highly constrained by observations: first, it is likely to be intergalactic rather than within galaxies, or it would lead to additional dispersion in the magnitudes of the supernovae; and second, intergalactic dust would absorb ultraviolet/optical radiation and re-emit it at far infrared wavelengths, leading to stringent constraints from observations of the cosmological far-infrared background. Thus, while the possibility of obscuration has not been entirely eliminated, it requires a novel kind of dust which is already highly constrained (and may be convincingly ruled out by further observations).

According to the best of our current understanding, then, the supernova results indicating an accelerating universe seem likely to be trustworthy. Needless to say, however, the possibility of a heretofore neglected systematic effect looms menacingly over these studies. Future experiments, including a proposed satellite dedicated to supernova cosmology [154], will both help us improve our understanding of the physics of supernovae and allow a determination of the distance/redshift relation to sufficient precision to distinguish between the effects of a cosmological constant and those of more mundane astrophysical phenomena. In the meantime, it is important to obtain independent corroboration using other methods.

3.2 Cosmic microwave background

The discovery by the COBE satellite of temperature anisotropies in the cosmic microwave background [228] inaugurated a new era in the determination of cosmological parameters. To characterize the temperature fluctuations on the sky, we may decompose them into spherical harmonics,

and express the amount of anisotropy at multipole moment l via the power spectrum,

Higher multipoles correspond to smaller angular separations on the sky, θ = 180°/l. Within any given family of models, Cl vs. l will depend on the parameters specifying the particular cosmology. Although the case is far from closed, evidence has been mounting in favor of a specific class of models — those based on Gaussian, adiabatic, nearly scale-free perturbations in a universe composed of baryons, radiation, and cold dark matter. (The inflationary universe scenario [113, 159, 6] typically predicts these kinds of perturbations.)

Although the dependence of the Cl’s on the parameters can be intricate, nature has chosen not to test the patience of cosmologists, as one of the easiest features to measure — the location in l of the first “Doppler peak”, an increase in power due to acoustic oscillations — provides one of the most direct handles on the cosmic energy density, one of the most interesting parameters. The first peak (the one at lowest l) corresponds to the angular scale subtended by the Hubble radius H -1CMB at the time when the CMB was formed (known variously as “decoupling” or “recombination” or ”last scattering”) [129]. The angular scale at which we observe this peak is tied to the geometry of the universe: In a negatively (positively) curved universe, photon paths diverge (converge), leading to a larger (smaller) apparent angular size as compared to a flat universe. Since the scale H -1CMB is set mostly by microphysics, this geometrical effect is dominant, and we can relate the spatial curvature as characterized by Ω to the observed peak in the CMB spectrum via [141, 138, 130]

More details about the spectrum (height of the peak, features of the secondary peaks) will depend on other cosmological quantities, such as the Hubble constant and the baryon density [34, 128, 137, 276].

Figure 7 shows a summary of data as of 1998, with various experimental results consolidated into bins, along with two theoretical models. Since that time, the data have continued to accumulate (see for example [172, 171]), and the near future should see a wealth of new results of ever-increasing precision. It is clear from the figure that there is good evidence for a peak at approximately lpeak ∼ 200, as predicted in a spatially-flat universe. This result can be made more quantitative by fitting the CMB data to models with different values of ΩM and ΩΛ [35, 26, 164, 210, 72], or by combining the CMB data with other sources, such as supernovae or large-scale structure [268, 238, 102, 127, 237, 78, 38, 18]. Figure 8 shows the constraints from the CMB in the ΩM-ΩΛ plane, using data from the 1997 test flight of the BOOMERANG experiment [171]. (Although the data used to make this plot are essentially independent of those shown in the previous figure, the constraints obtained are nearly the same.) It is clear that the CMB data provide constraints which are complementary to those obtained using supernovae; the two approaches yield confidence contours which are nearly orthogonal in the ΩM-ΩΛ plane. The region of overlap is in the vicinity of (ΩM, ΩΛ) = (0.3,0.7), which we will see below is also consistent with other determinations.

CMB data (binned) and two theoretical curves: The model with a peak at l ∼ 200 is a flat matter-dominated universe, while the one with a peak at l ∼ 400 is an open matter-dominated universe. From [35].

Constraints in the ΩM-ΩΛ plane from the North American flight of the BOOMERANG microwave background balloon experiment. From [171].

3.3 Matter density

Many cosmological tests, such as the two just discussed, will constrain some combination of ΩM and ΩΛ. It is therefore useful to consider tests of ΩM alone, even if our primary goal is to determine ΩΛ. (In truth, it is also hard to constrain ΩM alone, as almost all methods actually constrain some combination of ΩM and the Hubble constant h = H0/(100 km/sec/Mpc); the HST Key Project on the extragalactic distance scale finds h = 0.71 ± 0.06 [175], which is consistent with other methods [88], and what I will assume below.)

For years, determinations of ΩM based on dynamics of galaxies and clusters have yielded values between approximately 0.1 and 0.4 — noticeably larger than the density parameter in baryons as inferred from primordial nucleosynthesis, ΩB = (0.019±0.001)h-2 ≈ 0.04 [224, 41], but noticeably smaller than the critical density. The last several years have witnessed a number of new methods being brought to bear on the question; the quantitative results have remained unchanged, but our confidence in them has increased greatly.

A thorough discussion of determinations of ΩM requires a review all its own, and good ones are available [66, 14, 247, 88, 206]. Here I will just sketch some of the important methods.

The traditional method to estimate the mass density of the universe is to “weigh” a cluster of galaxies, divide by its luminosity, and extrapolate the result to the universe as a whole. Although clusters are not representative samples of the universe, they are sufficiently large that such a procedure has a chance of working. Studies applying the virial theorem to cluster dynamics have typically obtained values ΩM = 0.2 ± 0.1 [45, 66, 14]. Although it is possible that the global value of M/L differs appreciably from its value in clusters, extrapolations from small scales do not seem to reach the critical density [17]. New techniques to weigh the clusters, including gravitational lensing of background galaxies [227] and temperature profiles of the X-ray gas [155], while not yet in perfect agreement with each other, reach essentially similar conclusions.

Rather than measuring the mass relative to the luminosity density, which may be different inside and outside clusters, we can also measure it with respect to the baryon density [269], which is very likely to have the same value in clusters as elsewhere in the universe, simply because there is no way to segregate the baryons from the dark matter on such large scales. Most of the baryonic mass is in the hot intracluster gas [97], and the fraction fgas of total mass in this form can be measured either by direct observation of X-rays from the gas [173] or by distortions of the microwave background by scattering off hot electrons (the Sunyaev-Zeldovich effect) [46], typically yielding 0.1 ≤ fgas ≤ 0.2. Since primordial nucleosynthesis provides a determination of ΩB ∼ 0.04, these measurements imply

consistent with the value determined from mass to light ratios.

Another handle on the density parameter in matter comes from properties of clusters at high redshift. The very existence of massive clusters has been used to argue in favor of ΩM ∼ 0.2 [15], and the lack of appreciable evolution of clusters from high redshifts to the present [16, 44] provides additional evidence that ΩM < 1.0.

The story of large-scale motions is more ambiguous. The peculiar velocities of galaxies are sensitive to the underlying mass density, and thus to ΩM, but also to the “bias” describing the relative amplitude of fluctuations in galaxies and mass [66, 65]. Difficulties both in measuring the flows and in disentangling the mass density from other effects make it difficult to draw conclusions at this point, and at present it is hard to say much more than 0.2 ≤ ΩM ≤ 1.0.

Finally, the matter density parameter can be extracted from measurements of the power spectrum of density fluctuations (see for example [187]). As with the CMB, predicting the power spectrum requires both an assumption of the correct theory and a specification of a number of cosmological parameters. In simple models (e.g., with only cold dark matter and baryons, no massive neutrinos), the spectrum can be fit (once the amplitude is normalized) by a single “shape parameter”, which is found to be equal to Γ = ΩMh. (For more complicated models see [82].) Observations then yield Γ ∼ 0.25, or ΩM ∼ 0.36. For a more careful comparison between models and observations, see [156, 157, 71, 205].

Thus, we have a remarkable convergence on values for the density parameter in matter:

Even without the supernova results, this determination in concert with the CMB measurements favoring a flat universe provide a strong case for a nonzero cosmological constant.

3.4 Gravitational lensing

The volume of space back to a specified redshift, given by (44), depends sensitively on ΩΛ. Consequently, counting the apparent density of observed objects, whose actual density per cubic Mpc is assumed to be known, provides a potential test for the cosmological constant [109, 96, 244, 48]. Like tests of distance vs. redshift, a significant problem for such methods is the luminosity evolution of whatever objects one might attempt to count. A modern attempt to circumvent this difficulty is to use the statistics of gravitational lensing of distant galaxies; the hope is that the number of condensed objects which can act as lenses is less sensitive to evolution than the number of visible objects.

In a spatially flat universe, the probability of a source at redshift zs being lensed, relative to the fiducial (ΩM = 1, ΩΛ = 0) case, is given by

where as = 1/(1 + zs).

As shown in Figure 9, the probability rises dramatically as ΩΛ is increased to unity as we keep Ω fixed. Thus, the absence of a large number of such lenses would imply an upper limit on ΩΛ.

Analysis of lensing statistics is complicated by uncertainties in evolution, extinction, and biases in the lens discovery procedure. It has been argued [146, 83] that the existing data allow us to place an upper limit of ΩΛ < 0.7 in a flat universe. However, other groups [52, 51] have claimed that the current data actually favor a nonzero cosmological constant. The near future will bring larger, more objective surveys, which should allow these ambiguities to be resolved. Other manifestations of lensing can also be used to constrain ΩΛ, including statistics of giant arcs [275], deep weak-lensing surveys [133], and lensing in the Hubble Deep Field [61].

3.5 Other tests

There is a tremendous variety of ways in which a nonzero cosmological constant can manifest itself in observable phenomena. Here is an incomplete list of additional possibilities; see also [48, 58, 218].

-

Observations of numbers of objects vs. redshift are a potentially sensitive test of cosmological parameters if evolutionary effects can be brought under control. Although it is hard to account for the luminosity evolution of galaxies, it may be possible to indirectly count dark halos by taking into account the rotation speeds of visible galaxies, and upcoming redshift surveys could be used to constrain the volume/redshift relation [176].

-

Alcock and Paczyński [7] showed that the relationship between the apparent transverse and radial sizes of an object of cosmological size depends on the expansion history of the universe. Clusters of galaxies would be possible candidates for such a measurement, but they are insufficiently isotropic; alternatives, however, have been proposed, using for example the quasar correlation function as determined from redshift surveys [201, 204], or the Lyman-a forest [134].

-

In a related effect, the dynamics of large-scale structure can be affected by a nonzero cosmological constant; if a protocluster, for example, is anisotropic, it can begin to contract along a minor axis while the universe is matter-dominated and along its major axis while the universe is vacuum-dominated. Although small, such effects may be observable in individual clusters [153] or in redshift surveys [19].

-

A different version of the distance-redshift test uses extended lobes of radio galaxies as modified standard yardsticks. Current observations disfavor universes with ΩM near unity ([112], and references therein).

-

Inspiralling compact binaries at cosmological distances are potential sources of gravitational waves. It turns out that the redshift distribution of events is sensitive to the cosmological constant; although speculative, it has been proposed that advanced LIGO (Laser Interferometric Gravitational Wave Observatory [215]) detectors could use this effect to provide measurements of ΩΛ [262].

-

Finally, consistency of the age of the universe and the ages of its oldest constituents is a classic test of the expansion history. If stars were sufficiently old and H0 and ΩM were sufficiently high, a positive ΩΛ would be necessary to reconcile the two, and this situation has occasionally been thought to hold. Measurements of geometric parallax to nearby stars from the Hipparcos satellite have, at the least, called into question previous determinations of the ages of the oldest globular clusters, which are now thought to be perhaps 12 billion rather than 15 billion years old (see the discussion in [88]). It is therefore unclear whether the age issue forces a cosmological constant upon us, but by now it seems forced upon us for other reasons.

4 Physics Issues

In Section 1.3 we discussed the large difference between the magnitude of the vacuum energy expected from zero-point fluctuations and scalar potentials, ρ (theory)Λ ∼ 2 × 10110 erg/cm3, and the value we apparently observe, ρ (obs)Λ ∼ 2 × 10-10 erg/cm3 (which may be thought of as an upper limit, if we wish to be careful). It is somewhat unfair to characterize this discrepancy as a factor of 10120, since energy density can be expressed as a mass scale to the fourth power. Writing ρΛ = M 4vac , we find M (theory)vac ∼ MPl ∼ 1018 GeV and M (obs)vac ∼ 10-3 eV, so a more fair characterization of the problem would be

Of course, thirty orders of magnitude still constitutes a difference worthy of our attention.

Although the mechanism which suppresses the naive value of the vacuum energy is unknown, it seems easier to imagine a hypothetical scenario which makes it exactly zero than one which sets it to just the right value to be observable today. (Keeping in mind that it is the zero-temperature, late-time vacuum energy which we want to be small; it is expected to change at phase transitions, and a large value in the early universe is a necessary component of inflationary universe scenarios [113, 159, 6].) If the recent observations pointing toward a cosmological constant of astrophysically relevant magnitude are confirmed, we will be faced with the challenge of explaining not only why the vacuum energy is smaller than expected, but also why it has the specific nonzero value it does.

4.1 Supersymmetry

Although initially investigated for other reasons, supersymmetry (SUSY) turns out to have a significant impact on the cosmological constant problem, and may even be said to solve it halfway. SUSY is a spacetime symmetry relating fermions and bosons to each other. Just as ordinary symmetries are associated with conserved charges, supersymmetry is associated with “supercharges” Qα, where α is a spinor index (for introductions see [178, 166, 169]). As with ordinary symmetries, a theory may be supersymmetric even though a given state is not supersymmetric; a state which is annihilated by the supercharges, Qα|ψ〉 = 0, preserves supersymmetry, while states with Qα|ψ〉 ≠ 0 are said to spontaneously break SUSY.

Let us begin by considering “globally supersymmetric” theories, which are defined in flat space-time (obviously an inadequate setting in which to discuss the cosmological constant, but we have to start somewhere). Unlike most non-gravitational field theories, in supersymmetry the total energy of a state has an absolute meaning; the Hamiltonian is related to the supercharges in a straightforward way:

where braces represent the anticommutator. Thus, in a completely supersymmetric state (in which Qα|ψ〉 = 0 for all α), the energy vanishes automatically, 〈ψ|H|ψ〉 = 0 [280]. More concretely, in a given supersymmetric theory we can explicitly calculate the contributions to the energy from vacuum fluctuations and from the scalar potential V. In the case of vacuum fluctuations, contributions from bosons are exactly canceled by equal and opposite contributions from fermions when supersymmetry is unbroken. Meanwhile, the scalar-field potential in supersymmetric theories takes on a special form; scalar fields ϕi must be complex (to match the degrees of freedom of the fermions), and the potential is derived from a function called the superpotential W(ϕi) which is necessarily holomorphic (written in terms of ϕi and not its complex conjugate ̄ϕi). In the simple Wess-Zumino models of spin-0 and spin-1/2 fields, for example, the scalar potential is given by

where ∂iW = ∂W/∂ϕi. In such a theory, one can show that SUSY will be unbroken only for values of ϕi such that ∂iW = 0, implying V(ϕi, ̄ϕj) = 0.

So the vacuum energy of a supersymmetric state in a globally supersymmetric theory will vanish. This represents rather less progress than it might appear at first sight, since:

-

1.)

Supersymmetric states manifest a degeneracy in the mass spectrum of bosons and fermions, a feature not apparent in the observed world; and

-

1.)

The above results imply that non-supersymmetric states have a positive-definite vacuum energy.

Indeed, in a state where SUSY was broken at an energy scale MSUSY, we would expect a corresponding vacuum energy ρΛ ∼ M 4SUSY . In the real world, the fact that accelerator experiments have not discovered superpartners for the known particles of the Standard Model implies that MSUSY is of order 103 GeV or higher. Thus, we are left with a discrepancy

Comparison of this discrepancy with the naive discrepancy (54) is the source of the claim that SUSY can solve the cosmological constant problem halfway (at least on a log scale).

As mentioned, however, this analysis is strictly valid only in flat space. In curved spacetime, the global transformations of ordinary supersymmetry are promoted to the position-dependent (gauge) transformations of supergravity. In this context the Hamiltonian and supersymmetry generators play different roles than in flat spacetime, but it is still possible to express the vacuum energy in terms of a scalar field potential V(ϕi, ̄ϕj). In supergravity V depends not only on the superpotential W(ϕi), but also on a “Kähler potential” K(ϕi, ̄ϕj), and the Kähler metric Kīj constructed from the Kähler potential by Kīj = ∂2K/∂ϕi∂̄ϕj. (The basic role of the Kähler metric is to define the kinetic term for the scalars, which takes the form gμν Kīj∂ iμϕ ∂ jν̄ϕ .) The scalar potential is

where DiW is the Kähler derivative,

(In the presence of gauge fields there will also be non-negative “D-terms”, which do not change the present discussion.) Note that, if we take the canonical Kähler metric Kīj = δīj, in the limit MPl → ∞ (G → 0) the first term in square brackets reduces to the flat-space result (56). But with gravity, in addition to the non-negative first term we find a second term providing a non-positive contribution. Supersymmetry is unbroken when DiW = 0; the effective cosmological constant is thus non-positive. We are therefore free to imagine a scenario in which supersymmetry is broken in exactly the right way, such that the two terms in parentheses cancel to fantastic accuracy, but only at the cost of an unexplained fine-tuning (see for example [63]). At the same time, supergravity is not by itself a renormalizable quantum theory, and therefore it may not be reasonable to hope that a solution can be found purely within this context.

4.2 String theory

Unlike supergravity, string theory appears to be a consistent and well-defined theory of quantum gravity, and therefore calculating the value of the cosmological constant should, at least in principle, be possible. On the other hand, the number of vacuum states seems to be quite large, and none of them (to the best of our current knowledge) features three large spatial dimensions, broken supersymmetry, and a small cosmological constant. At the same time, there are reasons to believe that any realistic vacuum of string theory must be strongly coupled [70]; therefore, our inability to find an appropriate solution may simply be due to the technical difficulty of the problem. (For general introductions to string theory, see [110, 203]; for cosmological issues, see [167, 21]).

String theory is naturally formulated in more than four spacetime dimensions. Studies of duality symmetries have revealed that what used to be thought of as five distinct ten-dimensional superstring theories — Type I, Types IIA and IIB, and heterotic theories based on gauge groups E(8)×E(8) and SO(32) — are, along with eleven-dimensional supergravity, different low-energy weak-coupling limits of a single underlying theory, sometimes known as M-theory. In each of these six cases, the solution with the maximum number of uncompactified, flat spacetime dimensions is a stable vacuum preserving all of the supersymmetry. To bring the theory closer to the world we observe, the extra dimensions can be compactified on a manifold whose Ricci tensor vanishes. There are a large number of possible compactifications, many of which preserve some but not all of the original supersymmetry. If enough SUSY is preserved, the vacuum energy will remain zero; generically there will be a manifold of such states, known as the moduli space.

Of course, to describe our world we want to break all of the supersymmetry. Investigations in contexts where this can be done in a controlled way have found that the induced cosmological constant vanishes at the classical level, but a substantial vacuum energy is typically induced by quantum corrections [110]. Moore [174] has suggested that Atkin-Lehner symmetry, which relates strong and weak coupling on the string worldsheet, can enforce the vanishing of the one-loop quantum contribution in certain models (see also [67, 68]); generically, however, there would still be an appreciable contribution at two loops.

Thus, the search is still on for a four-dimensional string theory vacuum with broken supersymmetry and vanishing (or very small) cosmological constant. (See [69] for a general discussion of the vacuum problem in string theory.) The difficulty of achieving this in conventional models has inspired a number of more speculative proposals, which I briefly list here.

-

In three spacetime dimensions supersymmetry can remain unbroken, maintaining a zero cosmological constant, in such a way as to break the mass degeneracy between bosons and fermions [271]. This mechanism relies crucially on special properties of spacetime in (2+1) dimensions, but in string theory it sometimes happens that the strong-coupling limit of one theory is another theory in one higher dimension [272, 273].

-

More generally, it is now understood that (at least in some circumstances) string theory obeys the “holographic principle”, the idea that a theory with gravity in dimensions is equivalent to a theory without gravity in D-1 dimensions [235, 234]. In a holographic theory, the number of degrees of freedom in a region grows as the area of its boundary, rather than as its volume. Therefore, the conventional computation of the cosmological constant due to vacuum fluctuations conceivably involves a vast overcounting of degrees of freedom. We might imagine that a more correct counting would yield a much smaller estimate of the vacuum energy [20, 57, 254, 222], although no reliable calculation has been done as yet.

-

The absence of manifest SUSY in our world leads us to ask whether the beneficial aspect of canceling contributions to the vacuum energy could be achieved even without a truly super-symmetric theory. Kachru, Kumar and Silverstein [139] have constructed such a string theory, and argue that the perturbative contributions to the cosmological constant should vanish (although the actual calculations are somewhat delicate, and not everyone agrees [136]). If such a model could be made to work, it is possible that small non-perturbative effects could generate a cosmological constant of an astrophysically plausible magnitude [116].

-

A novel approach to compactification starts by imagining that the fields of the Standard Model are confined to a (3+1)-dimensional manifold (or “brane”, in string theory parlance) embedded in a larger space. While gravity is harder to confine to a brane, phenomenologically acceptable scenarios can be constructed if either the extra dimensions are any size less than a millimeter [216, 10, 124, 13, 140], or if there is significant spacetime curvature in a non-compact extra dimension [259, 207, 107]. Although these scenarios do not offer a simple solution to the cosmological constant problem, the relationship between the vacuum energy and the expansion rate can differ from our conventional expectation (see for example [32, 142]), and one is free to imagine that further study may lead to a solution in this context (see for example [231, 40]).

Of course, string theory might not be the correct description of nature, or its current formulation might not be directly relevant to the cosmological constant problem. For example, a solution may be provided by loop quantum gravity [98], or by a composite graviton [233]. It is probably safe to believe that a significant advance in our understanding of fundamental physics will be required before we can demonstrate the existence of a vacuum state with the desired properties. (Not to mention the equally important question of why our world is based on such a state, rather than one of the highly supersymmetric states that appear to be perfectly good vacua of string theory.)

4.3 The anthropic principle

The anthropic principle [25, 122] is essentially the idea that some of the parameters characterizing the universe we observe may not be determined directly by the fundamental laws of physics, but also by the truism that intelligent observers will only ever experience conditions which allow for the existence of intelligent observers. Many professional cosmologists view this principle in much the same way as many traditional literary critics view deconstruction — as somehow simultaneously empty of content and capable of working great evil. Anthropic arguments are easy to misuse, and can be invoked as a way out of doing the hard work of understanding the real reasons behind why we observe the universe we do. Furthermore, a sense of disappointment would inevitably accompany the realization that there were limits to our ability to unambiguously and directly explain the observed universe from first principles. It is nevertheless possible that some features of our world have at best an anthropic explanation, and the value of the cosmological constant is perhaps the most likely candidate.

In order for the tautology that “observers will only observe conditions which allow for observers” to have any force, it is necessary for there to be alternative conditions — parts of the universe, either in space, time, or branches of the wavefunction — where things are different. In such a case, our local conditions arise as some combination of the relative abundance of different environments and the likelihood that such environments would give rise to intelligence. Clearly, the current state of the art doesn’t allow us to characterize the full set of conditions in the entire universe with any confidence, but modern theories of inflation and quantum cosmology do at least allow for the possibility of widely disparate parts of the universe in which the “constants of nature” take on very different values (for recent examples see [100, 161, 256, 163, 118, 162, 251, 258]). We are therefore faced with the task of estimating quantitatively the likelihood of observing any specific value of A within such a scenario.

The most straightforward anthropic constraint on the vacuum energy is that it must not be so high that galaxies never form [263]. From the discussion in Section 2.4, we know that overdense regions do not collapse once the cosmological constant begins to dominate the universe; if this happens before the epoch of galaxy formation, the universe will be devoid of galaxies, and thus of stars and planets, and thus (presumably) of intelligent life. The condition that ΩΛ(zgal) ≤ ΩM(zgal) implies

where we have taken the redshift of formation of the first galaxies to be zgal ∼ 4. Thus, the cosmological constant could be somewhat larger than observation allows and still be consistent with the existence of galaxies. (This estimate, like the ones below, holds parameters such as the amplitude of density fluctuations fixed while allowing ΩΛ to vary; depending on one’s model of the universe of possibilities, it may be more defensible to vary a number of parameters at once. See for example [239, 104, 122].)

However, it is better to ask what is the most likely value of ΩΛ, i.e. what is the value that would be experienced by the largest number of observers [257, 76]? Since a universe with ΩΛ0/ΩM0 ∼ 1 will have many more galaxies than one with ΩΛ0/ΩM0 ∼ 100, it is quite conceivable that most observers will measure something close to the former value. The probability measure for observing a value of ρΛ can be decomposed as

where \({{\mathcal P}_ * }({\rho _\Lambda })d{\rho _\Lambda }\) is the a priori probability measure (whatever that might mean) for ρΛ, and v(ρΛ) is the average number of galaxies which form at the specified value of ρΛ. Martel, Shapiro and Weinberg [168] have presented a calculation of v(ρΛ) using a spherical-collapse model. They argue that it is natural to take the a priori distribution to be a constant, since the allowed range of ρΛ is very far from what we would expect from particle-physics scales. Garriga and Vilenkin [105] argue on the basis of quantum cosmology that there can be a significant departure from a constant a priori distribution. However, in either case the conclusion is that an observed ΩΛ0 of the same order of magnitude as ΩM0 is by no means extremely unlikely (which is probably the best one can hope to say given the uncertainties in the calculation).

Thus, if one is willing to make the leap of faith required to believe that the value of the cosmological constant is chosen from an ensemble of possibilities, it is possible to find an “explanation” for its current value (which, given its unnaturalness from a variety of perspectives, seems otherwise hard to understand). Perhaps the most significant weakness of this point of view is the assumption that there are a continuum of possibilities for the vacuum energy density. Such possibilities correspond to choices of vacuum states with arbitrarily similar energies. If these states were connected to each other, there would be local fluctuations which would appear to us as massless fields, which are not observed (see Section 4.5). If on the other hand the vacua are disconnected, it is hard to understand why all possible values of the vacuum energy are represented, rather than the differences in energies between different vacua being given by some characteristic particle-physics scale such as MPl or MSUSY. (For one scenario featuring discrete vacua with densely spaced energies, see [23].) It will therefore (again) require advances in our understanding of fundamental physics before an anthropic explanation for the current value of the cosmological constant can be accepted.

4.4 Miscellaneous adjustment mechanisms

The importance of the cosmological constant problem has engendered a wide variety of proposed solutions. This section will present only a brief outline of some of the possibilities, along with references to recent work; further discussion and references can be found in [264, 48, 218].

One approach which has received a great deal of attention is the famous suggestion by Cole-man [59], that effects of virtual wormholes could set the cosmological constant to zero at low energies. The essential idea is that wormholes (thin tubes of spacetime connecting macroscopically large regions) can act to change the effective value of all the observed constants of nature. If we calculate the wave function of the universe by performing a Feynman path integral over all possible spacetime metrics with wormholes, the dominant contribution will be from those configurations whose effective values for the physical constants extremize the action. These turn out to be, under a certain set of assumed properties of Euclidean quantum gravity, configurations with zero cosmological constant at late times. Thus, quantum cosmology predicts that the constants we observe are overwhelmingly likely to take on values which imply a vanishing total vacuum energy. However, subsequent investigations have failed to inspire confidence that the desired properties of Euclidean quantum cosmology are likely to hold, although it is still something of an open question; see discussions in [264, 48].

Another route one can take is to consider alterations of the classical theory of gravity. The simplest possibility is to consider adding a scalar field to the theory, with dynamics which cause the scalar to evolve to a value for which the net cosmological constant vanishes (see for example [74, 230]). Weinberg, however, has pointed out on fairly general grounds that such attempts are unlikely to work [264, 265]; in models proposed to date, either there is no solution for which the effective vacuum energy vanishes, or there is a solution but with other undesirable properties (such as making Newton’s constant G also vanish). Rather than adding scalar fields, a related approach is to remove degrees of freedom by making the determinant of the metric, which multiplies Λ0 in the action (15), a non-dynamical quantity, or at least changing its dynamics in some way (see [111, 270, 177] for recent examples). While this approach has not led to a believable solution to the cosmological constant problem, it does change the context in which it appears, and may induce different values for the effective vacuum energy in different branches of the wavefunction of the universe.

Along with global supersymmetry, there is one other symmetry which would work to prohibit a cosmological constant: conformal (or scale) invariance, under which the metric is multiplied by a spacetime-dependent function, gμν → eλ(x)gμν. Like supersymmetry, conformal invariance is not manifest in the Standard Model of particle physics. However, it has been proposed that quantum effects could restore conformal invariance on length scales comparable to the cosmological horizon size, working to cancel the cosmological constant (for some examples see [240, 12, 11]). At this point it remains unclear whether this suggestion is compatible with a more complete understanding of quantum gravity, or with standard cosmological observations.

A final mechanism to suppress the cosmological constant, related to the previous one, relies on quantum particle production in de Sitter space (analogous to Hawking radiation around black holes). The idea is that the effective energy-momentum tensor of such particles may act to cancel out the bare cosmological constant (for recent attempts see [242, 243, 1, 184]). There is currently no consensus on whether such an effect is physically observable (see for example [252]).

If inventing a theory in which the vacuum energy vanishes is difficult, finding a model that predicts a vacuum energy which is small but not quite zero is all that much harder. Along these lines, there are various numerological games one can play. For example, the fact that supersymmetry solves the problem halfway could be suggestive; a theory in which the effective vacuum energy scale was given not by MSUSY ∼ 103 GeV but by M 2SUSY /MPl ∼ 10-3 eV would seem to fit the observations very well. The challenging part of this program, of course, is to devise such a theory. Alternatively, one could imagine that we live in a “false vacuum” — that the absolute minimum of the vacuum energy is truly zero, but we live in a state which is only a local minimum of the energy. Scenarios along these lines have been explored [250, 103, 152]; the major hurdle to be overcome is explaining why the energy difference between the true and false vacua is so much smaller than one would expect.

4.5 Other sources of dark energy