Abstract

I review the development of numerical evolution codes for general relativity based upon the characteristic initial value problem. Progress is traced from the early stage of 1D feasibility studies to 2D axisymmetric codes that accurately simulate the oscillations and gravitational collapse of relativistic stars and to current 3D codes that provide pieces of a binary black hole spacetime. A prime application of characteristic evolution is to compute waveforms via Cauchy-characteristic matching, which is also reviewed.

Similar content being viewed by others

1 Introduction

It is my pleasure to review progress in numerical relativity based upon characteristic evolution. In the spirit of Living Reviews in Relativity, I invite my colleagues to continue to send me contributions and comments at jeff@einstein.phyast.pitt.edu.

We are now in an era in which Einstein’s equations can effectively be considered solved at the local level. Several groups, as reported here and in other Living Reviews in Relativity, have developed 3D codes which are stable and accurate in some sufficiently local setting. Global solutions are another matter. In particular, there is no single code in existence today which purports to be capable of computing the waveform of gravitational radiation emanating from the inspiral and merger of two black holes, the premier problem in classical relativity. Just as several coordinate patches are necessary to describe a spacetime with nontrivial topology, the most effective attack on the binary black hole problem may involve patching together pieces of spacetime handled by a combination of different codes and techniques.

Most of the effort in numerical relativity has centered about the Cauchy {3+1} formalism [226], with the gravitational radiation extracted by perturbative methods based upon introducing an artificial Schwarzschild background in the exterior region [1, 4, 2, 3, 181, 180, 156]. These wave extraction methods have not been thoroughly tested in a nonlinear 3D setting. A different approach which is specifically tailored to study radiation is based upon the characteristic initial value problem. In the 1960’s, Bondi [45, 46] and Penrose [166] pioneered the use of null hypersurfaces to describe gravitational waves. This new approach has flourished in general relativity. It led to the first unambiguous description of gravitational radiation in a fully nonlinear context. It yields the standard linearized description of the “plus” and “cross” polarization modes of gravitational radiation in terms of the Bondi news function N at future null infinity \({{\mathcal I}^ +}\). The Bondi news function is an invariantly defined complex radiation amplitude N = N⊕ + iN⊕, whose real and imaginary parts correspond to the time derivatives ∂th⊕ and ∂th⊗ of the “plus” and “cross” polarization modes of the strain h incident on a gravitational wave antenna.

The major drawback of the characteristic approach arises from the formation of caustics in the light rays generating the null hypersurfaces. In the most ambitious scheme proposed at the theoretical level such caustics would be treated “head-on” as part of the evolution problem [205]. This is a profoundly attractive idea. Only a few structural stable caustics can arise in numerical evolution, and their geometrical properties are well enough understood to model their singular behavior numerically [87], although a computational implementation has not yet been attempted.

In the typical setting for the characteristic initial value problem, the domain of dependence of a single nonsingular null hypersurface is empty. In order to obtain a nontrivial evolution problem, the null hypersurface must either be completed to a caustic-crossover region where it pinches off, or an additional boundary must be introduced. So far, the only caustics that have been successfully evolved numerically in general relativity are pure point caustics (the complete null cone problem). When spherical symmetry is not present, it turns out that the stability conditions near the vertex of a light cone place a strong restriction on the allowed time step [136]. Point caustics in general relativity have been successfully handled this way for axisymmetric spacetimes [106], but the computational demands for 3D evolution would be prohibitive using current generation supercomputers. This is unfortunate because, away from the caustics, characteristic evolution offers myriad computational and geometrical advantages.

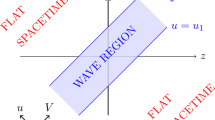

As a result, at least in the near future, fully three-dimensional computational applications of characteristic evolution are likely to be restricted to some mixed form, in which data is prescribed on a non-singular but incomplete initial null hypersurface N and on a second boundary hypersurface B, which together with the initial null hypersurface determine a nontrivial domain of dependence. The hypersurface B may be either (i) null, (ii) timelike or (iii) spacelike, as schematically depicted in Figure 1. The first two possibilities give rise to (i) the double null problem and (ii) the nullcone-worldtube problem. Possibility (iii) has more than one interpretation. It may be regarded as a Cauchy initial boundary value problem where the outer boundary is null. An alternative interpretation is the Cauchy-characteristic matching (CCM) problem, in which the Cauchy and characteristic evolutions are matched transparently across a worldtube W, as indicated in Figure 1.

In CCM, it is possible to choose the matching interface between the Cauchy and characteristic regions to be a null hypersurface, but it is more practical to match across a timelike worldtube. CCM combines the advantages of characteristic evolution in treating the outer radiation zone in spherical coordinates which are naturally adapted to the topology of the worldtube with the advantages of Cauchy evolution in treating the inner region in Cartesian coordinates, where spherical coordinates would break down.

In this review, we trace the development of characteristic algorithms from model 1D problems to a 2D axisymmetric code which computes the gravitational radiation from the oscillation and gravitational collapse of a relativistic star and to a 3D code designed to calculate the waveform emitted in the merger to ringdown phase of a binary black hole. And we trace the development of CCM from early feasibility studies to successful implementation in the linear regime and through current attempts to treat the binary black hole problem.

This material includes several notable developments since my last review. Most important for future progress have been two Ph.D. theses based upon characteristic evolution codes. Florian Siebel’s thesis work [191], at the Technische Universität München, integrates an axisymmetric characteristic gravitational code with a high resolution shock capturing code for relativistic hydrodynamics. This coupled general relativistic code has been thoroughly tested and has yielded state-of-the-art results for the gravitational waves produced by the oscillation and collapse of a relativistic star (see Sections 5.1 and 5.2). In Yosef Zlochower’s thesis work [228], at the University of Pittsburgh, the gravitational waves generated from the post-merger phase of a binary blacszk black hole is computed using a fully nonlinear three-dimensional characteristic code [229] (see Section 3.8). He shows how the characteristic code can be employed to investigate the nonlinear mode coupling in the response of a black hole to the infall of gravitational waves.

A further notable achievement has been the successful application of CCM to the linearized matching problem between a 3D characteristic code and a 3D Cauchy code based upon harmonic coordinates [208] (see Section 4.7). Here the linearized Cauchy code satisfies a well-posed initial-boundary value problem, which seems to be a critical missing ingredient in previous attempts at CCM in general relativity.

The problem of computing the evolution of a self-gravitating object, such as a neutron star, in close orbit about a black hole is of clear importance to the new gravitational wave detectors. The interaction with the black hole could be strong enough to produce a drastic change in the emitted waves, say by tidally disrupting the star, so that a perturbative calculation would be inadequate. The understanding of such nonlinear phenomena requires well behaved numerical simulations of hydrodynamic systems satisfying Einstein’s equations. Several numerical relativity codes for treating the problem of a neutron star near a black hole have been developed, as described in the Living Review in Relativity on “Numerical Hydrodynamics in General Relativity” by Font [80]. Although most of these efforts concentrate on Cauchy evolution, the characteristic approach has shown remarkable robustness in dealing with a single black hole or relativistic star. In this vein, state-of-the-art axisymmetric studies of the oscillation and gravitational collapse of relativistic stars have been achieved (see Section 5.2) and progress has been made in the 3D simulation of a body in close orbit about a Schwarzschild black hole (see Sections 5.3 and 5.3.1).

In previous reviews, I tried to include material on the treatment of boundaries in the computational mathematics and fluid dynamics literature because of its relevance to the CCM problem. The fertile growth of this subject makes this impractical to continue. A separate Living Review in Relativity on boundary conditions is certainly warranted and is presently under consideration. In view of this, I will not attempt to keep this subject up to date except for material of direct relevance to CCM, although I will for now retain the past material.

Animations and other material from these studies can be viewed at the web sites of the University of Canberra [217], Louisiana State University [148], Pittsburgh University [218], and Pittsburgh Supercomputing Center [145].

2 The Characteristic Initial Value Problem

Characteristics have traditionally played an important role in the analysis of hyperbolic partial differential equations. However, the use of characteristic hypersurfaces to supply the foliation underlying an evolution scheme has been mainly restricted to relativity. This is perhaps natural because in curved spacetime there is no longer a preferred Cauchy foliation provided by the Euclidean 3-spaces allowed in Galilean or special relativity. The method of shooting along characteristics is a standard technique in many areas of computational physics, but evolution based upon characteristic hypersurfaces is quite uniquely limited to relativity.

Bondi’s initial use of null coordinates to describe radiation fields [45] was followed by a rapid development of other null formalisms. These were distinguished either as metric based approaches, as developed for axisymmetry by Bondi, Metzner and van der Burg [46] and generalized to 3 dimensions by Sachs [184], or as null tetrad approaches in which the Bianchi identities appear as part of the system of equations, as developed by Newman and Penrose [158].

At the outset, null formalisms were applied to construct asymptotic solutions at null infinity by means of 1/r expansions. Soon afterward, Penrose devised the conformal compactification of null infinity \({\mathcal I}\) (“scri”), thereby reducing to geometry the asymptotic quantities describing the physical properties of the radiation zone, most notably the Bondi mass and news function [166]. The characteristic initial value problem rapidly became an important tool for the clarification of fundamental conceptual issues regarding gravitational radiation and its energy content. It laid bare and geometrised the gravitational far field.

The initial focus on asymptotic solutions clarified the kinematic properties of radiation fields but could not supply the waveform from a specific source. It was soon realized that instead of carrying out a 1/r expansion, one could reformulate the approach in terms of the integration of ordinary differential equations along the characteristics (null rays) [209]. The integration constants supplied on some inner boundary then determined the specific waveforms obtained at infinity. In the double-null initial value problem of Sachs [185], the integration constants are supplied at the intersection of outgoing and ingoing null hypersurfaces. In the worldtube-nullcone formalism, the sources were represented by integration constants on a timelike worldtube [209]. These early formalisms have gone through much subsequent revamping. Some have been reformulated to fit the changing styles of modern differential geometry. Some have been reformulated in preparation for implementation as computational algorithms. The articles in [72] give a representative sample of formalisms. Rather than including a review of the extensive literature on characteristic formalisms in general relativity, I concentrate here on those approaches which have been implemented as computational evolution schemes. The existence and uniqueness of solutions to the associated boundary value problems, which has obvious relevance to the success of numerical simulations, is treated in a separate Living Review in Relativity on “Theorems on Existence and Global Dynamics for the Einstein Equations” by Rendall [179].

All characteristic evolution schemes share the same skeletal form. The fundamental ingredient is a foliation by null hypersurfaces u = const. which are generated by a two-dimensional set of null rays, labeled by coordinates s, with a coordinate λ varying along the rays. In (u, λ, xA) null coordinates, the main set of Einstein equations take the schematic form

and

Here F represents a set of hypersurface variables, G a set of evolution variables, and HF and HG are nonlinear hypersurface operators, i.e. they operate locally on the values of F, G and G,u intrinsic to a single null hypersurface. In the Bondi formalism, these hypersurface equations have a hierarchical structure in which the members of the set F can be integrated in turn in terms of the characteristic data for the evolution variables and prior members of the hierarchy. In addition to these main equations, there is a subset of four Einstein equations which are satisfied by virtue of the Bianchi identities, provided that they are satisfied on a hypersurface transverse to the characteristics. These equations have the physical interpretation as conservation laws. Mathematically they are analogous to the constraint equations of the canonical formalism. But they are not necessarily elliptic, since they may be intrinsic to null or timelike hypersurfaces, rather than spacelike Cauchy hypersurfaces.

Computational implementation of characteristic evolution may be based upon different versions of the formalism (i.e. metric or tetrad) and different versions of the initial value problem (i.e. double null or worldtube-nullcone). The performance and computational requirements of the resulting evolution codes can vary drastically. However, most characteristic evolution codes share certain common advantages:

-

The initial data is free. There are no elliptic constraints on the data, which eliminates the need for time consuming iterative constraint solvers and artificial boundary conditions. This flexibility and control in prescribing initial data has the trade-off of limited experience with prescribing physically realistic characteristic initial data.

-

The coordinates are very“rigid”, i.e. there is very little remaining gauge freedom.

-

The constraints satisfy ordinary differential equations along the characteristics which force any constraint violation to fall off asymptotically as 1/r2.

-

No second time derivatives appear so that the number of basic variables is at most half the number for the corresponding version of the Cauchy problem.

-

The main Einstein equations form a system of coupled ordinary differential equations with respect to the parameter λ varying along the characteristics. This allows construction of an evolution algorithm in terms of a simple march along the characteristics.

-

In problems with isolated sources, the radiation zone can be compactified into a finite grid boundary with the metric rescaled by 1/r2 as an implementation of Penrose’s conformal method. Because the Penrose boundary is a null hypersurface, no extraneous outgoing radiation condition or other artificial boundary condition is required. The analogous treatment in the Cauchy problem requires the use of hyperboloidal spacelike hypersurfaces asymptoting to null infinity [85]. For reviews of the hyperboloidal approach and its status in treating the associated three-dimensional computational problem, see [131, 81].

-

The grid domain is exactly the region in which waves propagate, which is ideally efficient for radiation studies. Since each null hypersurface of the foliation extends to infinity, the radiation is calculated immediately (in retarded time).

-

In black hole spacetimes, a large redshift at null infinity relative to internal sources is an indication of the formation of an event horizon and can be used to limit the evolution to the exterior region of spacetime. While this can be disadvantageous for late time accuracy, it allows the possibility of identifying the event horizon “on the fly”, as opposed to Cauchy evolution where the event horizon can only be located after the evolution has been completed.

Perhaps most important from a practical view, characteristic evolution codes have shown remarkably robust stability and were the first to carry out long term evolutions of moving black holes [102].

Characteristic schemes also share as a common disadvantage the necessity either to deal with caustics or to avoid them altogether. The scheme to tackle the caustics head on by including their development as part of the evolution is perhaps a great idea still ahead of its time but one that should not be forgotten. There are only a handful of structurally stable caustics, and they have well known algebraic properties. This makes it possible to model their singular structure in terms of Padé approximants. The structural stability of the singularities should in principle make this possible, and algorithms to evolve the elementary caustics have been proposed [69, 202]. In the axisymmetric case, cusps and folds are the only structurally stable caustics, and they have already been identified in the horizon formation occurring in simulations of head-on collisions of black holes and in the temporarily toroidal horizons occurring in collapse of rotating matter [151, 189]. In a generic binary black hole horizon, where axisymmetry is broken, there is a closed curve of cusps which bounds the two-dimensional region on the horizon where the black holes initially form and merge [144, 133].

3 Prototype Characteristic Evolution Codes

Limited computer power, as well as the instabilities arising from non-hyperbolic formulations of Einstein’s equations, necessitated that the early code development in general relativity be restricted to spacetimes with symmetry. Characteristic codes were first developed for spacetimes with spherical symmetry. The techniques for relativistic fields which propagate on null characteristics are similar to the gravitational case. Such fields are included in this section. We postpone treatment of relativistic fluids, whose characteristics are timelike, until Section 5.

3.1 {1 + 1}-dimensional codes

It is often said that the solution of the general ordinary differential equation is essentially known, in light of the success of computational algorithms and present day computing power. Perhaps this is an overstatement because investigating singular behavior is still an art. But, in this spirit, it is fair to say that the general system of hyperbolic partial differential equations in one spatial dimension seems to be a solved problem in general relativity. Codes have been successful in revealing important new phenomena underlying singularity formation in cosmology [29] and in dealing with unstable spacetimes to discover critical phenomena [111]. As described below, characteristic evolution has contributed to a rich variety of such results.

One of the earliest characteristic evolution codes, constructed by Corkill and Stewart [69, 201], treated spacetimes with two Killing vectors using a grid based upon double null coordinates, with the null hypersurfaces intersecting in the surfaces spanned by the Killing vectors. They simulated colliding plane waves and evolved the Khan-Penrose [141] collision of impulsive (δ-function curvature) plane waves to within a few numerical zones from the final singularity, with extremely close agreement with the analytic results. Their simulations of collisions with more general waveforms, for which exact solutions are not known, provided input to the understanding of singularity formation which was unforeseen in the analytic treatments of this problem.

Many {1 + 1}-dimensional characteristic codes have been developed for spherically symmetric systems. Here matter must be included in order to make the system non-Schwarzschild. Initially the characteristic evolution of matter was restricted to simple cases, such as massless Klein-Gordon fields, which allowed simulation of gravitational collapse and radiation effects in the simple context of spherical symmetry. Now, characteristic evolution of matter is progressing to more complicated systems. Its application to hydrodynamics has made significant contributions to general relativistic astrophysics, as reviewed in Section 5.

The synergy between analytic and computational approaches has already led to dramatic results in the massless Klein-Gordon case. On the analytic side, working in a characteristic initial value formulation based upon outgoing null cones, Christodoulou made a penetrating study of the spherically symmetric problem [59, 60, 61, 62, 63, 64]. In a suitable function space, he showed the existence of an open ball about Minkowski space data whose evolution is a complete regular spacetime; he showed that an evolution with a nonzero final Bondi mass forms a black hole; he proved a version of cosmic censorship for generic data; and he established the existence of naked singularities for non-generic data. What this analytic tour-de-force did not reveal was the remarkable critical behavior in the transition to the black hole regime, which was discovered by Choptuik [57, 58] by computational simulation based upon Cauchy evolution. This phenomenon has now been understood in terms of the methods of renormalization group theory and intermediate asymptotics, and has spawned a new subfield in general relativity, which is covered in the Living Review in Relativity on “Critical Phenomena in Gravitational Collapse” by Gundlach [111].

The characteristic evolution algorithm for the spherically symmetric Einstein-Klein-Gordon problem provides a simple illustration of the techniques used in the general case. It centers about the evolution scheme for the scalar field, which constitutes the only dynamical field. Given the scalar field, all gravitational quantities can be determined by integration along the characteristics of the null foliation. This is a coupled problem, since the scalar wave equation involves the curved space metric. It illustrates how null algorithms lead to a hierarchy of equations which can be integrated along the characteristics to effectively decouple the hypersurface and dynamical variables.

In a Bondi coordinate system based upon outgoing null hypersurfaces u = const. and a surface area coordinate r, the metric is

Smoothness at r = 0 allows imposition of the coordinate conditions

The field equations consist of the curved space wave equation □Φ = 0 for the scalar field and two hypersurface equations for the metric functions:

The wave equation can be expressed in the form

where g = rΦ and □(2) is the D’Alembertian associated with the two-dimensional submanifold spanned by the ingoing and outgoing null geodesics. Initial null data for evolution consists of Φ(u0, r) at initial retarded time u0.

Because any two-dimensional geometry is conformally flat, the surface integral of □(2)g over a null parallelogram Σ gives exactly the same result as in a flat 2-space, and leads to an integral identity upon which a simple evolution algorithm can be based [108]. Let the vertices of the null parallelogram be labeled by N, E, S, and W corresponding, respectively, to their relative locations (North, East, South, and West) in the 2-space, as shown in Figure 2. Upon integration of Equation (7), curvature introduces an integral correction to the flat space null parallelogram relation between the values of g at the vertices:

This identity, in one form or another, lies behind all of the null evolution algorithms that have been applied to this system. The prime distinction between the different algorithms is whether they are based upon double null coordinates or Bondi coordinates as in Equation (3). When a double null coordinate system is adopted, the points N, E, S, and W can be located in each computational cell at grid points, so that evaluation of the left hand side of Equation (8) requires no interpolation. As a result, in flat space, where the right hand side of Equation (8) vanishes, it is possible to formulate an exact evolution algorithm. In curved space, of course, there is a truncation error arising from the approximation of the integral, e.g., by evaluating the integrand at the center of Σ.

The identity (8) gives rise to the following explicit marching algorithm, indicated in Figure 2. Let the null parallelogram lie at some fixed θ and ϕ and span adjacent retarded time levels u0 and uo + Δu. Imagine for now that the points N, E, S, and W lie on the spatial grid, with rN − rW = rE − rS = Δr. If g has been determined on the entire initial cone u0, which contains the points E and S, and g has been determined radially outward from the origin to the point W on the next cone u0 + Δu, then Equation (8) determines g at the next radial grid point N in terms of an integral over Σ. The integrand can be approximated to second order, i.e. to \({\mathcal O}(\Delta r\Delta u)\), by evaluating it at the center of Σ. To this same accuracy, the value of g at the center equals its average between the points E and W, at which g has already been determined. Similarly, the value of (V/r),r at the center of Σ can be approximated to second order in terms of values of V at points where it can be determined by integrating the hypersurface equations (5, 6) radially outward from r = 0.

After carrying out this procedure to evaluate g at the point N, the procedure can then be iterated to determine g at the next radially outward grid point on the u0 + Δu level, i.e. point n in Figure 2. Upon completing this radial march to null infinity, in terms of a compactified radial coordinate such as x = r/(1 + r), the field g is then evaluated on the next null cone at u0 + 2Δu, beginning at the vertex where smoothness gives the startup condition that g(u, 0) = 0.

In the compactified Bondi formalism, the vertices N, E, S, and W of the null parallelogram Σ cannot be chosen to lie exactly on the grid because, even in Minkowski space, the velocity of light in terms of a compactified radial coordinate x is not constant. As a consequence, the fields g, β, and V at the vertices of Σ are approximated to second order accuracy by interpolating between grid points. However, cancellations arise between these four interpolations so that Equation (8) is satisfied to fourth order accuracy. The net result is that the finite difference version of Equation (8) steps g radially outward one zone with an error of fourth order in grid size, \({\mathcal O}({(\Delta u)^2}{(\Delta x)^2})\). In addition, the smoothness conditions (4) can be incorporated into the startup for the numerical integrations for V and β to insure no loss of accuracy in starting up the march at r = 0. The resulting global error in g, after evolving a finite retarded time, is then \({\mathcal O}(\Delta u\Delta x)\), after compounding errors from 1/(ΔuΔx) number of zones.

When implemented on a grid based upon the (u, r) coordinates, the stability of this algorithm is subject to a Courant-Friedrichs-Lewy (CFL) condition requiring that the physical domain of dependence be contained in the numerical domain of dependence. In the spherically symmetric case, this condition requires that the ratio of the time step to radial step be limited by \((V/r)\Delta u \leq 2\Delta r\), where Δr = Δ[x/(1−x)]. This condition can be built into the code using the value V/r = e2H, corresponding to the maximum of V/r at \({{\mathcal I}^ +}\). The strongest restriction on the time step then arises just before the formation of a horizon, where V/r → ∞ at \({{\mathcal I}^ +}\). This infinite redshift provides a mechanism for locating the true event horizon “on the fly” and restricting the evolution to the exterior spacetime. Points near \({{\mathcal I}^ +}\) must be dropped in order to evolve across the horizon due to the lack of a nonsingular compactified version of future time infinity \({I^ +}\).

The situation is quite different in a double null coordinate system, in which the vertices of the null parallelogram can be placed exactly on grid points so that the CFL condition is automatically satisfied. A characteristic code based upon double null coordinates was developed by Goldwirth and Piran in a study of cosmic censorship [95] based upon the spherically symmetric gravitational collapse of a massless scalar field. Their early study lacked the sensitivity of adaptive mesh refinement (AMR) which later enabled Choptuik to discover the critical phenomena appearing in this problem. Subsequent work by Marsa and Choptuik [150] combined the use of the null related ingoing Eddington-Finklestein coordinates with Unruh’s strategy of singularity excision to construct a 1D code that “runs forever”. Later, Garfinkle [90] constructed an improved version of the Goldwirth-Piran double null code which was able to simulate critical phenomena without using adaptive mesh refinement. In this treatment, as the evolution proceeds on one outgoing null cone to the next, the grid points follow the ingoing null cones and must be dropped as they cross the origin at r = 0. However, after half the grid points are lost they are then “recycled” at new positions midway between the remaining grid points. This technique is crucial for resolving the critical phenomena associated with an r → 0 size horizon. An extension of the code [91] was later used to verify that scalar field collapse in six dimensions continues to display critical phenomena.

Hamadé and Stewart [118] also applied a double null code to study critical phenomena. In order to obtain the accuracy necessary to confirm Choptuik’s results they developed the first example of a characteristic grid with AMR. They did this with both the standard Berger and Oliger algorithm and their own simplified version, with both versions giving indistinguishable results. Their simulations of critical collapse of a massless scalar field agreed with Choptuik’s values for the universal parameters governing mass scaling and displayed the echoing associated with discrete self-similarity. Hamadé, Horne, and Stewart [117] extended this study to the spherical collapse of an axion/dilaton system and found in this case that self-similarity was a continuous symmetry of the critical solution.

Brady, Chambers, and Goncalves [47] used Garfinkle’s [90] double null algorithm to investigate the effect of a massive scalar field on critical phenomena. The introduction of a mass term in the scalar wave equation introduces a scale to the problem, which suggests that the critical point behavior might differ from the massless case. They found that there are two different regimes depending on the ratio of the Compton wavelength 1/m of the scalar mass to the radial size λ of the scalar pulse used to induce collapse. When λm ≪ 1, the critical solution is the one found by Choptuik in the m = 0 case, corresponding to a type II phase transition. However, when λm ≫ 1, the critical solution is an unstable soliton star (see [188]), corresponding to a type I phase transition where black hole formation turns on at a finite mass.

A code based upon Bondi coordinates, developed by Husa and his collaborators [132], has been successfully applied to spherically symmetric critical collapse of a nonlinear σ-model coupled to gravity. Critical phenomena cannot be resolved on a static grid based upon the Bondi r-coordinate. Instead, the numerical techniques of Garfinkle were adopted by using a dynamic grid following the ingoing null rays and by recycling radial grid points. They studied how coupling to gravity affects the critical behavior previously observed by Bizoń [43] and others in the Minkowski space version of the model. For a wide range of the coupling constant, they observe discrete self-similarity and typical mass scaling near the critical solution. The code is shown to be second order accurate and to give second order convergence for the value of the critical parameter.

The first characteristic code in Bondi coordinates for the self-gravitating scalar wave problem was constructed by Gomez and Winicour [108]. They introduced a numerical compactification of \({{\mathcal I}^ +}\) for the purpose of studying effects of self-gravity on the scalar radiation, particularly in the high amplitude limit of the rescaling Φ → αΦ. As a → ∞, the red shift creates an effective boundary layer at \({{\mathcal I}^ +}\) which causes the Bondi mass MB and the scalar field monopole moment \(Q\) to be related by \({M_B} \sim \pi \vert Q\vert/\sqrt 2\), rather than the quadratic relation of the weak field limit [108]. This could also be established analytically so that the high amplitude limit provided a check on the code’s ability to handle strongly nonlinear fields. In the small amplitude case, this work incorrectly reported that the radiation tails from black hole formation had an exponential decay characteristic of quasinormal modes rather than the polynomial 1/t or 1/t2 falloff expected from Price’s [175] work on perturbations of Schwarzschild black holes. In hindsight, the error here was not having confidence to run the code sufficiently long to see the proper late time behavior.

Gundlach, Price, and Pullin [113, 114] subsequently reexamined the issue of power law tails using a double null code similar to that developed by Goldwirth and Piran. Their numerical simulations verified the existence of power law tails in the full nonlinear case, thus establishing consistency with analytic perturbative theory. They also found normal mode ringing at intermediate time, which provided reassuring consistency with perturbation theory and showed that there is a region of spacetime where the results of linearized theory are remarkably reliable even though highly nonlinear behavior is taking place elsewhere. These results have led to a methodology that has application beyond the confines of spherically symmetric problems, most notably in the “close approximation” for the binary black hole problem [176]. Power law tails and quasinormal ringing have also been confirmed using Cauchy evolution [150].

The study of the radiation tail decay of a scalar field was subsequently extended by Gómez, Schmidt, and Winicour [107] using a characteristic code. They showed that the Newman-Penrose constant [160] for the scalar field determines the exponent of the power law (and not the static monopole moment as often stated). When this constant is non-zero, the tail decays as 1/t on \({{\mathcal I}^ +}\), as opposed to the 1/t2 decay for the vanishing case. (They also found t−n log t corrections, in addition to the exponentially decaying contributions of the quasinormal modes.) This code was also used to study the instability of a topological kink in the configuration of the scalar field [17]. The kink instability provides the simplest example of the turning point instability [135, 197] which underlies gravitational collapse of static equilibria.

Brady and Smith [49] have demonstrated that characteristic evolution is especially well adapted to explore properties of Cauchy horizons. They examined the stability of the Reissner-Nordström Cauchy horizon using an Einstein-Klein-Gordon code based upon advanced Bondi coordinates (v, r) (where the hypersurfaces v = const are ingoing null hypersurfaces). They study the effect of a spherically symmetric scalar pulse on the spacetime structure as it propagates across the event horizon. Their numerical methods are patterned after the work of Goldwirth and Piran [95], with modifications of the radial grid structure that allow deep penetration inside the black hole. In accord with expectations from analytic studies, they find that the pulse first induces a weak null singularity on the Cauchy horizon, which then leads to a crushing spacelike singularity as r → 0. The null singularity is weak in the sense that an infalling observer experiences a finite tidal force, although the Newman-Penrose Weyl component Ψ2 diverges, a phenomenon known as mass inflation [171]. These results confirm the earlier result of Gnedin and Gnedin [94] that a central spacelike singularity would be created by the interaction of a charged black hole with a scalar field, in accord with a physical argument by Penrose [167] that a small perturbation undergoes an infinite redshift as it approaches the Cauchy horizon.

Burko [51] has confirmed and extended these results, using a code based upon double null coordinates which was developed with Ori [52] in a study of tail decay. He found that in the early stages the perturbation of the Cauchy horizon is weak and in agreement with the behavior calculated by perturbation theory.

Brady, Chambers, Krivan, and Laguna [48] have found interesting effects of a non-zero cosmo-logical constant Λ on tail decay by using a characteristic Einstein-Klein-Gordon code to study the effect of a massless scalar pulse on Schwarzschild-de Sitter and Reissner-Nordstrom-de Sitter spacetimes. First, by constructing a linearized scalar evolution code, they show that scalar test fields with ℓ ≠ 0 have exponentially decaying tails, in contrast to the standard power law tails for asymptotically flat spacetimes. Rather than decaying, the monopole mode asymptotes at late time to a constant, which scales linearly with Λ, in contrast to the standard no-hair result. This unusual behavior for the ℓ = 0 case was then independently confirmed with a nonlinear spherical characteristic code.

Using a combination of numerical and analytic techniques based upon null coordinates, Hod and Piran have made an extensive series of investigations of the spherically symmetric charged Einstein-Klein-Gordon system dealing with the effect of charge on critical gravitational collapse [125] and the late time tail decay of a charged scalar field on a Reissner-Nordström black hole [126, 129, 127, 128]. These studies culminated in a full nonlinear investigation of horizon formation by the collapse of a charged massless scalar pulse [130]. They track the formation of an apparent horizon which is followed by a weakly singular Cauchy horizon which develops a strong spacelike singularity at r = 0. This is in complete accord with prior perturbative results and nonlinear simulations involving a pre-existing black hole. Oren and Piran [161] increased the late time accuracy of this study by incorporating an adaptive grid for the retarded time coordinate u, with a refinement criterion to maintain Δr/r = const. The accuracy of this scheme is confirmed through convergence tests as well as charge and constraint conservation. They were able to observe the physical mechanism which prohibits black hole formation with charge to mass ration Q/M > 1. Electrostatic repulsion of the outer parts of the scalar pulse increases relative to the gravitational attraction and causes the outer portion of the charge to disperse to larger radii before the black hole is formed. Inside the black hole, they confirm the formation of a weakly singular Cauchy horizon which turns into a strong spacelike singularity, in accord with other studies.

Hod extended this combined numerical-analytical double null approach to investigate higher order corrections to the dominant power law tail [123], as well as corrections due to a general spherically symmetric scattering potential [122] and due to a time dependent potential [124]. He found (log t)/t modifications to the leading order tail behavior for a Schwarzschild black hole, in accord with earlier results of Gómez et al. [107]. These modifications fall off at a slow rate so that a very long numerical evolution (t ≈ 3000 M) is necessary to cleanly identify the leading order power law decay.

The foregoing numerical-analytical work based upon characteristic evolution has contributed to a very comprehensive classical treatment of spherically symmetric gravitational collapse. Sorkin and Piran [196] have investigated the question of quantum corrections due to pair creation on the gravitational collapse of a charged scalar field. For observers outside the black hole, several analytic studies have indicated that such pair-production can rapidly diminish the charge of the black hole. Sorkin and Piran apply the same double-null characteristic code used in studying the classical problem [130] to evolve across the event horizon and observe the quantum effects on the Cauchy horizon. The quantum electrodynamic effects are modeled in a rudimentary way by a nonlinear dielectric ϵ constant that limits the electric field to the critical value necessary for pair creation. The back-reaction of the pairs on the stress-energy and the electric current are ignored. They found that quantum effects leave the classical picture of the Cauchy horizon qualitatively intact but that they shorten its “lifetime” by hastening the conversion of the weak null singularity into a strong spacelike singularity.

The Southampton group has constructed a {1 + 1}-dimensional characteristic code for spacetimes with cylindrical symmetry [67, 76]. The original motivation was to use it as the exterior characteristic code in a test case of CCM (see Section 4.4.1 for the application to matching). Subsequently, Sperhake, Sjödin, and Vickers [198, 199] modified the code into a global characteristic version for the purpose of studying cosmic strings, represented by massive scalar and vector fields coupled to gravity. Using a Geroch decomposition [92] with respect to the translational Killing vector, they reduced the global problem to a {2 + 1}-dimensional asymptotically flat spacetime, so that \({{\mathcal I}^ +}\) can be compactified and included in the numerical grid. Rather than the explicit scheme used in CCM, the new version employs an implicit, second order in space and time, Crank-Nicholson evolution scheme. The code showed long term stability and second order convergence in vacuum tests based upon exact Weber-Wheeler waves [221] and Xanthopoulos’ rotating solution [225], and in tests of wave scattering by a string. The results show damped ringing of the string after an incoming Weber-Wheeler pulse has excited it and then scattered to \({{\mathcal I}^ +}\). The ringing frequencies are independent of the details of the pulse but are inversely proportional to the masses of the scalar and vector fields.

3.1.1 Adaptive mesh refinement

The goal of computing waveforms from relativistic binaries, such as a neutron star spiraling into a black hole, requires more than a stable convergent code. It is a delicate task to extract a waveform in a spacetime in which there are multiple length scales: the size of the black hole, the size of the star, the wavelength of the radiation. It is commonly agreed that some form of mesh refinement is essential to attack this problem. Mesh refinement was first applied in characteristic evolution to solve specific spherically symmetric problems regarding critical phenomena and singularity structure [90, 118, 51].

Pretorius and Lehner [174] have presented a general approach for AMR to a generic characteristic code. Although the method is designed to treat 3D simulations, the implementation has so far been restricted to the Einstein-Klein-Gordon system in spherical symmetry. The 3D approach is modeled after the Berger and Oliger AMR algorithm for hyperbolic Cauchy problems, which is reformulated in terms of null coordinates. The resulting characteristic AMR algorithm can be applied to any unigrid characteristic code and is amenable to parallelization. They applied it to the problem of a massive Klein-Gordon field propagating outward from a black hole. The non-zero rest mass restricts the Klein-Gordon field from propagating to infinity. Instead it diffuses into higher frequency components which Pretorius and Lehner show can be resolved using AMR but not with a comparison unigrid code.

3.2 {2 + 1}-dimensional codes

One-dimensional characteristic codes enjoy a very special simplicity due to the two preferred sets (ingoing and outgoing) of characteristic null hypersurfaces. This eliminates a source of gauge freedom that otherwise exists in either two- or three-dimensional characteristic codes. However, the manner in which the characteristics of a hyperbolic system determine domains of dependence and lead to propagation equations for shock waves is the same as in the one-dimensional case. This makes it desirable for the purpose of numerical evolution to enforce propagation along characteristics as extensively as possible. In basing a Cauchy algorithm upon shooting along characteristics, the infinity of characteristic rays (technically, bicharacteristics) at each point leads to an arbitrariness which, for a practical numerical scheme, makes it necessary either to average the propagation equations over the sphere of characteristic directions or to select out some preferred subset of propagation equations. The latter approach was successfully applied by Butler [53] to the Cauchy evolution of two-dimensional fluid flow, but there seems to have been very little follow-up along these lines.

The formal ideas behind the construction of two- or three-dimensional characteristic codes are similar, although there are various technical options for treating the angular coordinates which label the null rays. Historically, most characteristic work graduated first from 1D to 2D because of the available computing power.

3.3 The Bondi problem

The first characteristic code based upon the original Bondi equations for a twist-free axisymmetric spacetime was constructed by Isaacson, Welling, and Winicour [137] at Pittsburgh. The spacetime was foliated by a family of null cones, complete with point vertices at which regularity conditions were imposed. The code accurately integrated the hypersurface and evolution equations out to compactified null infinity. This allowed studies of the Bondi mass and radiation flux on the initial null cone, but it could not be used as a practical evolution code because of instabilities.

These instabilities came as a rude shock and led to a retreat to the simpler problem of axisymmetric scalar waves propagating in Minkowski space, with the metric

in outgoing null cone coordinates. A null cone code for this problem was constructed using an algorithm based upon Equation (8), with the angular part of the flat space Laplacian replacing the curvature terms in the integrand on the right hand side. This simple setting allowed one source of instability to be traced to a subtle violation of the CFL condition near the vertices of the cones. In terms of the grid spacing Δxα, the CFL condition in this coordinate system takes the explicit form

where the coefficient K, which is of order 1, depends on the particular startup procedure adopted for the outward integration. Far from the vertex, the condition (10) on the time step Δu is quantitatively similar to the CFL condition for a standard Cauchy evolution algorithm in spherical coordinates. But condition (10) is strongest near the vertex of the cone where (at the equator θ = π/2) it implies that

This is in contrast to the analogous requirement

for stable Cauchy evolution near the origin of a spherical coordinate system. The extra power of Δθ is the price that must be paid near the vertex for the simplicity of a characteristic code. Nevertheless, the enforcement of this condition allowed efficient global simulation of axisymmetric scalar waves. Global studies of backscattering, radiative tail decay, and solitons were carried out for nonlinear axisymmetric waves [137], but three-dimensional simulations extending to the vertices of the cones were impractical at the time on existing machines.

Aware now of the subtleties of the CFL condition near the vertices, the Pittsburgh group returned to the Bondi problem, i.e. to evolve the Bondi metric [46]

by means of the three hypersurface equations

and the evolution equation

The beauty of the Bondi equations is that they form a clean hierarchy. Given γ on an initial null hypersurface, the equations can be integrated radially to determine β, U, V, and γ,u on the hypersurface (in that order) in terms of integration constants determined by boundary conditions, or smoothness if extended to the vertex of a null cone. The initial data γ is unconstrained except by smoothness conditions. Because γ represents an axisymmetric spin-2 field, it must be \({\mathcal O}({\sin ^2}\theta)\) near the poles of the spherical coordinates and must consist of l ≥ 2 spin-2 multipoles.

In the computational implementation of this system by the Pittsburgh group [106], the null hy-persurfaces were chosen to be complete null cones with nonsingular vertices, which (for simplicity) trace out a geodesic worldline r = 0. The smoothness conditions at the vertices were formulated in local Minkowski coordinates.

The vertices of the cones were not the chief source of difficulty. A null parallelogram marching algorithm, similar to that used in the scalar case, gave rise to another instability that sprang up throughout the grid. In order to reveal the source of this instability, physical considerations suggested looking at the linearized version of the Bondi equations, where they can be related to the wave equation. If this relationship were sufficiently simple, then the scalar wave algorithm could be used as a guide in stabilizing the evolution of γ. A scheme for relating γ to solutions Φ of the wave equation had been formulated in the original paper by Bondi, Metzner, and van der Burgh [46]. However, in that scheme, the relationship of the scalar wave to γ was nonlocal in the angular directions and was not useful for the stability analysis.

A local relationship between γ and solutions of the wave equation was found [106]. This provided a test bed for the null evolution algorithm similar to the Cauchy test bed provided by Teukolsky waves [211]. More critically, it allowed a simple von Neumann linear stability analysis of the finite difference equations, which revealed that the evolution would be unstable if the metric quantity U was evaluated on the grid. For a stable algorithm, the grid points for U must be staggered between the grid points for γ, β, and V. This unexpected feature emphasizes the value of linear stability analysis in formulating stable finite difference approximations.

It led to an axisymmetric code [165, 106] for the global Bondi problem which ran stably, subject to a CFL condition, throughout the regime in which caustics and horizons did not form. Stability in this regime was verified experimentally by running arbitrary initial data until it radiated away to \({{\mathcal I}^ +}\). Also, new exact solutions as well as the linearized null solutions were used to perform extensive convergence tests that established second order accuracy. The code generated a large complement of highly accurate numerical solutions for the class of asymptotically flat, axisymmetric vacuum spacetimes, a class for which no analytic solutions are known. All results of numerical evolutions in this regime were consistent with the theorem of Christodoulou and Klainerman [65] that weak initial data evolve asymptotically to Minkowski space at late time.

An additional global check on accuracy was performed using Bondi’s formula relating mass loss to the time integral of the square of the news function. The Bondi mass loss formula is not one of the equations used in the evolution algorithm but follows from those equations as a consequence of a global integration of the Bianchi identities. Thus it not only furnishes a valuable tool for physical interpretation but it also provides a very important calibration of numerical accuracy and consistency.

An interesting feature of the evolution arises in regard to compactification. By construction, the u-direction is timelike at the origin where it coincides with the worldline traced out by the vertices of the outgoing null cones. But even for weak fields, the u-direction generically becomes spacelike at large distances along an outgoing ray. Geometrically, this reflects the property that I is itself a null hypersurface so that all internal directions are spacelike, except for the null generator. For a flat space time, the u-direction picked out at the origin leads to a null evolution direction at \({\mathcal I}\), but this direction becomes spacelike under a slight deviation from spherical symmetry. Thus the evolution generically becomes “superluminal” near \({\mathcal I}\). Remarkably, this leads to no adverse numerical effects. This fortuitous property apparently arises from the natural way that causality is built into the marching algorithm so that no additional resort to numerical techniques, such as “causal differencing” [68], is necessary.

3.3.1 The conformal-null tetrad approach

Stewart has implemented a characteristic evolution code which handles the Bondi problem by a null tetrad, as opposed to metric, formalism [203]. The geometrical algorithm underlying the evolution scheme, as outlined in [205, 87], is Friedrich’s [83] conformal-null description of a compactified spacetime in terms of a first order system of partial differential equations. The variables include the metric, the connection, and the curvature, as in a Newman-Penrose formalism, but in addition the conformal factor (necessary for compactification of \({\mathcal I}\)) and its gradient. Without assuming any symmetry, there are more than 7 times as many variables as in a metric based null scheme, and the corresponding equations do not decompose into as clean a hierarchy. This disadvantage, compared to the metric approach, is balanced by several advantages:

-

The equations form a symmetric hyperbolic system so that standard theorems can be used to establish that the system is well-posed.

-

Standard evolution algorithms can be invoked to ensure numerical stability.

-

The extra variables associated with the curvature tensor are not completely excess baggage, since they supply essential physical information.

-

The regularization necessary to treat \({\mathcal I}\) is built in as part of the formalism so that no special numerical regularization techniques are necessary as in the metric case. (This last advantage is somewhat offset by the necessity of having to locate \({\mathcal I}\) by tracking the zeroes of the conformal factor.)

The code was intended to study gravitational waves from an axisymmetric star. Since only the vacuum equations are evolved, the outgoing radiation from the star is represented by data (Ψ4 in Newman-Penrose notation) on an ingoing null cone forming the inner boundary of the evolved domain. The inner boundary data is supplemented by Schwarzschild data on the initial outgoing null cone, which models an initially quiescent state of the star. This provides the necessary data for a double-null initial value problem. The evolution would normally break down where the ingoing null hypersurface develops caustics. But by choosing a scenario in which a black hole is formed, it is possible to evolve the entire region exterior to the horizon. An obvious test bed is the Schwarzschild spacetime for which a numerically satisfactory evolution was achieved (although convergence tests were not reported).

Physically interesting results were obtained by choosing data corresponding to an outgoing quadrupole pulse of radiation. By increasing the initial amplitude of the data Ψ4, it was possible to evolve into a regime where the energy loss due to radiation was large enough to drive the total Bondi mass negative. Although such data is too grossly exaggerated to be consistent with an astrophysically realistic source, the formation of a negative mass was an impressive test of the robustness of the code.

3.3.2 Axisymmetric mode coupling

Papadopoulos [162] has carried out an illuminating study of mode mixing by computing the evolution of a pulse emanating outward from an initially Schwarzschild white hole of mass M. The evolution proceeds along a family of ingoing null hypersurfaces with outer boundary at r = 60 M. The evolution is stopped before the pulse hits the outer boundary in order to avoid spurious effects from reflection and the radiation is inferred from data at r = 20 M. Although gauge ambiguities arise in reading off the waveform at a finite radius, the work reveals interesting nonlinear effects: (i) modification of the light cone structure governing the principal part of the equations and hence the propagation of signals; (ii) modulation of the Schwarzschild potential by the introduction of an angular dependent “mass aspect”; and (iii) quadratic and higher order terms in the evolution equations which couple the spherical harmonic modes. A compactified version of this study [229] was later carried out with the 3D PITT code, which confirms these effects as well as new effects which are not present in the axisymmetric case (see Section 3.8 for details).

3.3.3 Twisting axisymmetry

The Southampton group, as part of its goal of combining Cauchy and characteristic evolution, has developed a code [74, 75, 172] which extends the Bondi problem to full axisymmetry, as described by the general characteristic formalism of Sachs [184]. By dropping the requirement that the rotational Killing vector be twist-free, they were able to include rotational effects, including radiation in the “cross” polarization mode (only the “plus” mode is allowed by twist-free axisymmetry). The null equations and variables were recast into a suitably regularized form to allow compactification of null infinity. Regularization at the vertices or caustics of the null hypersurfaces was not necessary, since they anticipated matching to an interior Cauchy evolution across a finite worldtube.

The code was designed to insure standard Bondi coordinate conditions at infinity, so that the metric has the asymptotically Minkowskian form corresponding to null-spherical coordinates. In order to achieve this, the hypersurface equation for the Bondi metric variable β must be integrated radially inward from infinity, where the integration constant is specified. The evolution of the dynamical variables proceeds radially outward as dictated by causality [172]. This differs from the Pittsburgh code in which all the equations are integrated radially outward, so that the coordinate conditions are determined at the inner boundary and the metric is asymptotically flat but not asymptotically Minkowskian. The Southampton scheme simplifies the formulae for the Bondi news function and mass in terms of the metric. It is anticipated that the inward integration of β causes no numerical problems because this is a gauge choice which does not propagate physical information. However, the code has not yet been subject to convergence and long term stability tests so that these issues cannot be properly assessed at the present time.

The matching of the Southampton axisymmetric code to a Cauchy interior is discussed in Section 4.5.

3.4 The Bondi mass

Numerical calculations of asymptotic quantities such as the Bondi mass must pick off non-leading terms in an asymptotic expansion about infinity. This is similar to the experimental task of determining the mass of an object by measuring its far field. For example, in an asymptotically inertial frame (called a standard Bondi frame at \({{\mathcal I}^ +}\)), the mass aspect \({\mathcal M}(u,\theta, \phi)\) is picked off from the asymptotic expansion of Bondi’s metric quantity V (see Equation (16)) of the form \(V = r - 2{\mathcal M} + {\mathcal O}(1/r)\). In gauges which incorporate some of the properties of an asymptotically inertial frame, such as the null quasi-spherical gauge [24] in which the angular metric is conformal to the unit sphere metric, this can be a straightforward computational problem. However, the job can be more difficult if the gauge does not correspond to a standard Bondi frame at \({{\mathcal I}^ +}\). One must then deal with an arbitrary coordinatization of \({{\mathcal I}^ +}\) which is determined by the details of the interior geometry. As a result, V has a more complicated asymptotic behavior, given in the axisymmetric case by

where L, H, and K are gauge dependent functions of (u, θ) which would vanish in a Bondi frame [209, 137]. The calculation of the Bondi mass requires regularization of this expression by numerical techniques so that the coefficient \({\mathcal M}\) can be picked off. The task is now similar to the experimental determination of the mass of an object by using non-inertial instruments in a far zone which contains \({\mathcal O}(1/r)\) radiation fields. But it has been done!

It was accomplished in Stewart’s code by re-expressing the formula for the Bondi mass in terms of the well-behaved fields of the conformal formalism [203]. In the Pittsburgh code, it was accomplished by re-expressing the Bondi mass in terms of renormalized metric variables which regularize all calculations at \({{\mathcal I}^ +}\) and make them second order accurate in grid size [100]. The calculation of the Bondi news function (which provides the waveforms of both polarization modes) is an easier numerical task than the Bondi mass. It has also been implemented in both of these codes, thus allowing the important check of the Bondi mass loss formula.

An alternative approach to computing the Bondi mass is to adopt a gauge which corresponds more closely to an inertial or Bondi frame at \({{\mathcal I}^ +}\) and simplifies the asymptotic limit. Such a choice is the null quasi-spherical gauge in which the angular part of the metric is proportional to the unit sphere metric, and as a result the gauge term K vanishes in Equation (18). This gauge was adopted by Bartnik and Norton at Canberra in their development of a 3D characteristic evolution code [24] (see Section 3.5 for further discussion). It allowed accurate computation of the Bondi mass as a limit as r → ∞ of the Hawking mass [21].

Mainstream astrophysics is couched in Newtonian concepts, some of which have no well defined extension to general relativity. In order to provide a sound basis for relativistic astrophysics, it is crucial to develop general relativistic concepts which have well defined and useful Newtonian limits. Mass and radiation flux are fundamental in this regard. The results of characteristic codes show that the energy of a radiating system can be evaluated rigorously and accurately according to the rules for asymptotically flat spacetimes, while avoiding the deficiencies that plagued the “pre-numerical” era of relativity: (i) the use of coordinate dependent concepts such as gravitational energy-momentum pseudotensors; (ii) a rather loose notion of asymptotic flatness, particularly for radiative spacetimes; (iii) the appearance of divergent integrals; and (iv) the use of approximation formalisms, such as weak field or slow motion expansions, whose errors have not been rigorously estimated.

Characteristic codes have extended the role of the Bondi mass from that of a geometrical construct in the theory of isolated systems to that of a highly accurate computational tool. The Bondi mass loss formula provides an important global check on the preservation of the Bianchi identities. The mass loss rates themselves have important astrophysical significance. The numerical results demonstrate that computational approaches, rigorously based upon the geometrical definition of mass in general relativity, can be used to calculate radiation losses in highly nonlinear processes where perturbation calculations would not be meaningful.

Numerical calculation of the Bondi mass has been used to explore both the Newtonian and the strong field limits of general relativity [100]. For a quasi-Newtonian system of radiating dust, the numerical calculation joins smoothly on to a post-Newtonian expansion of the energy in powers of 1/c, beginning with the Newtonian mass and mechanical energy as the leading terms. This comparison with perturbation theory has been carried out to \({\mathcal O}(1/{c^7})\), at which stage the computed Bondi mass peels away from the post-Newtonian expansion. It remains strictly positive, in contrast to the truncated post-Newtonian behavior which leads to negative values.

A subtle feature of the Bondi mass stems from its role as one component of the total energy-momentum 4-vector, whose calculation requires identification of the translation subgroup of the Bondi-Metzner-Sachs group [183]. This introduces boost freedom into the problem. Identifying the translation subgroup is tantamount to knowing the conformal transformation to a standard Bondi frame [209] in which the time slices of \({\mathcal I}\) have unit sphere geometry. Both Stewart’s code and the Pittsburgh code adapt the coordinates to simplify the description of the interior sources. This results in a non-standard foliation of \({\mathcal I}\). The determination of the conformal factor which relates the 2-metric hAB of a slice of \({\mathcal I}\) to the unit sphere metric is an elliptic problem equivalent to solving the second order partial differential equation for the conformal transformation of Gaussian curvature. In the axisymmetric case, the PDE reduces to an ODE with respect to the angle θ, which is straightforward to solve [100]. The integration constants determine the boost freedom along the axis of symmetry.

The non-axisymmetric case is more complicated. Stewart [203] proposes an approach based upon the dyad decomposition

The desired conformal transformation is obtained by first relating hAB conformally to the flat metric of the complex plane. Denoting the complex coordinate of the plane by ζ, this relationship can be expressed as dζ = ef ma dxA. The conformal factor f can then be determined from the integrability condition

This is equivalent to the classic Beltrami equation for finding isothermal coordinates. It would appear to be a more effective scheme than tackling the second order PDE directly, but numerical implementation has not yet been carried out.

3.5 3D characteristic evolution

There has been rapid progress in 3D characteristic evolution. There are now two independent 3D codes, one developed at Canberra and the other at Pittsburgh (the PITT code), which have the capability to study gravitational waves in single black hole spacetimes, at a level still not mastered by Cauchy codes. Several years ago the Pittsburgh group established robust stability and second order accuracy of a fully nonlinear 3D code which calculates waveforms at null infinity [42, 31] and also tracks a dynamical black hole and excises its internal singularity from the computational grid [105, 102]. The Canberra group has implemented an independent nonlinear 3D code which accurately evolves the exterior region of a Schwarzschild black hole. Both codes pose data on an initial null hypersurface and on a worldtube boundary, and evolve the exterior spacetime out to a compactified version of null infinity, where the waveform is computed. However, there are essential differences in the underlying geometrical formalisms and numerical techniques used in the two codes and in their success in evolving generic black hole spacetimes.

3.5.1 Geometrical formalism

The PITT code uses a standard Bondi-Sachs null coordinate system,

where det(hAB) = det(qAB) for some standard choice qAB of the unit sphere metric. This generalizes Equation (13) to the three-dimensional case. The hypersurface equations derive from the \({G_\mu}^v{\nabla _v}u\) components of the Einstein tensor. They take the explicit form

where Da is the covariant derivative and \({\mathcal R}\) the curvature scalar of the conformal 2-metric hAB of the r = const. surfaces, and capital Latin indices are raised and lowered with hAB. Given the null data hAB on an outgoing null hypersurface, this hierarchy of equations can be integrated radially in order to determine β, UA and V on the hypersurface in terms of integration constants on an inner boundary. The evolution equations for the u-derivative of the null data derive from the trace-free part of the angular components of the Einstein tensor, i.e. the components \({h^{AB}} = 2m({A_{\bar m}}B)\). They take the explicit form

The Canberra code employs a null quasi-spherical (NQS) gauge (not to be confused with the quasi-spherical approximation in which quadratically aspherical terms are ignored [42]). The NQS gauge takes advantage of the possibility of mapping the angular part of the Bondi metric conformally onto a unit sphere metric, so that hAB → qab. The required transformation xA → yA(u, r, xA) is in general dependent upon u and r so that the NQS angular coordinates yA are not constant along the outgoing null rays, unlike the Bondi-Sachs angular coordinates. Instead the coordinates yA display the analogue of a shift on the null hypersurfaces u = const. In addition, the NQS spheres (u, r) = const. are not the same as the Bondi spheres. The radiation content of the metric is contained in a shear vector describing this shift. This results in the description of the radiation in terms of a spin-weight 1 field, rather than the spin-weight 2 field associated with hAB in the Bondi-Sachs formalism. In both the Bondi—Sachs and NQS gauges, the independent gravitational data on a null hypersurface is the conformal part of its degenerate 3-metric. The Bondi-Sachs null data consists of hAB, which determines the intrinsic conformal metric of the null hypersurface. In the NQS case, hAB = qab and the shear vector comprises the only non-trivial part of the conformal 3-metric. Both the Bondi-Sachs and NQS gauges can be arranged to coincide in the special case of shear-free Robinson-Trautman metrics [71, 20].

The formulation of Einstein’s equations in the NQS gauge is presented in [19], and the associated gauge freedom arising from (u, r) dependent rotation and boosts of the unit sphere is discussed in [20]. As in the PITT code, the main equations involve integrating a hierarchy of hypersurface equations along the radial null geodesics extending from the inner boundary to null infinity. In the NQS gauge the source terms for these radial ODE’s are rather simple when the unknowns are chosen to be the connection coefficients. However, as a price to pay for this simplicity, after the radial integrations are performed on each null hypersurface a first order elliptic equation must be solved on each r = const. cross-section to reconstruct the underlying metric.

3.5.2 Numerical methodology

The PITT code is an explicit second order finite difference evolution algorithm based upon retarded time steps on a uniform three-dimensional null coordinate grid. The straightforward numerical approach and the second order convergence of the finite difference equations has facilitated code development. The Canberra code uses an assortment of novel and elegant numerical methods. Most of these involve smoothing or filtering and have obvious advantage for removing short wavelength noise but would be unsuitable for modeling shocks.

Both codes require the ability to handle tensor fields and their derivatives on the sphere. Spherical coordinates and spherical harmonics are natural analytic tools for the description of radiation, but their implementation in computational work requires dealing with the impossibility of smoothly covering the sphere with a single coordinate grid. Polar coordinate singularities in axisymmetric systems can be regularized by standard tricks. In the absence of symmetry, these techniques do not generalize and would be especially prohibitive to develop for tensor fields.

A crucial ingredient of the PITT code is the eth-module [104] which incorporates a computational version of the Newman-Penrose eth-formalism [159]. The eth-module covers the sphere with two overlapping stereographic coordinate grids (North and South). It provides everywhere regular, second order accurate, finite difference expressions for tensor fields on the sphere and their covariant derivatives. The eth-calculus simplifies the underlying equations, avoids spurious coordinate singularities and allows accurate differentiation of tensor fields on the sphere in a computationally efficient and clean way. Its main weakness is the numerical noise introduced by interpolations (fourth order accurate) between the North and South patches. For parabolic or elliptic equations on the sphere, the finite difference approach of the eth-calculus would be less efficient than a spectral approach, but no parabolic or elliptic equations appear in the Bondi-Sachs evolution scheme.

The Canberra code handles fields on the sphere by means of a 3-fold representation: (i) as discretized functions on a spherical grid uniformly spaced in standard (θ, ϕ) coordinates, (ii) as fast-Fourier transforms with respect to (θ, ϕ) (based upon a smooth map of the torus onto the sphere), and (iii) as a spectral decomposition of scalar, vector, and tensor fields in terms of spin-weighted spherical harmonics. The grid values are used in carrying out nonlinear algebraic operations; the Fourier representation is used to calculate (θ, ϕ)-derivatives; and the spherical harmonic representation is used to solve global problems, such as the solution of the first order elliptic equation for the reconstruction of the metric, whose unique solution requires pinning down the ℓ =1 gauge freedom. The sizes of the grid and of the Fourier and spherical harmonic representations are coordinated. In practice, the spherical harmonic expansion is carried out to 15th order in ℓ, but the resulting coefficients must then be projected into the ℓ ≤ 10 subspace in order to avoid inconsistencies between the spherical harmonic, grid, and Fourier representations.

The Canberra code solves the null hypersurface equations by combining an 8th order Runge-Kutta integration with a convolution spline to interpolate field values. The radial grid points are dynamically positioned to approximate ingoing null geodesics, a technique originally due to Goldwirth and Piran [95] to avoid the problems with a uniform r-grid near a horizon which arise from the degeneracy of an areal coordinate on a stationary horizon. The time evolution uses the method of lines with a fourth order Runge-Kutta integrator, which introduces further high frequency filtering.

3.5.3 Stability

3.5.3.1 PITT code

Analytic stability analysis of the finite difference equations has been crucial in the development of a stable evolution algorithm, subject to the standard Courant-Friedrichs-Lewy (CFL) condition for an explicit code. Linear stability analysis on Minkowski and Schwarzschild backgrounds showed that certain field variables must be represented on the half-grid [106, 42]. Nonlinear stability analysis was essential in revealing and curing a mode coupling instability that was not present in the original axisymmetric version of the code [31, 142]. This has led to a code whose stability persists even in the regime that the u-direction, along which the grid flows, becomes spacelike, such as outside the velocity of light cone in a rotating coordinate system. Severe tests were used to verify stability. In the linear regime, robust stability was established by imposing random initial data on the initial characteristic hypersurface and random constraint violating boundary data on an inner worldtube. (Robust stability was later adopted as one of the standardized tests for Cauchy codes [5].) The code ran stably for 10,000 grid crossing times under these conditions [42]. The PITT code was the first 3D general relativistic code to pass this robust stability test. The use of random data is only possible in sufficiently weak cases where effective energy terms quadratic in the field gradients are not dominant. Stability in the highly nonlinear regime was tested in two ways. Runs for a time of 60,000 M were carried out for a moving, distorted Schwarzschild black hole (of mass M), with the marginally trapped surface at the inner boundary tracked and its interior excised from the computational grid [102, 103]. This remains one of the longest simulations of a dynamic black hole carried out to date. Furthermore, the scattering of a gravitational wave off a Schwarzschild black hole was successfully carried out in the extreme nonlinear regime where the backscattered Bondi news was as large as N = 400 (in dimensionless geometric units) [31], showing that the code can cope with the enormous power output N2c5/G ≈ 1060 W in conventional units. This exceeds the power that would be produced if, in 1 second, the entire galaxy were converted to gravitational radiation.

3.5.3.2 Canberra code

Analytic stability analysis of the underlying finite difference equations is impractical because of the extensive mix of spectral techniques, higher order methods, and splines. Although there is no clear-cut CFL limit on the code, stability tests show that there is a limit on the time step. The damping of high frequency modes due to the implicit filtering would be expected to suppress numerical instability, but the stability of the Canberra code is nevertheless subject to two qualifications [22, 23, 24]: (i) At late times (less than 100 M), the evolution terminates as it approaches an event horizon, apparently because of a breakdown of the NQS gauge condition, although an analysis of how and why this should occur has not yet been given. (ii) Numerical instabilities arise from dynamic inner boundary conditions and restrict the inner boundary to a fixed Schwarzschild horizon. Tests in the extreme nonlinear regime were not reported.

3.5.4 Accuracy

3.5.4.1 PITT code

Second order accuracy has been verified in an extensive number of testbeds [42, 31, 102, 228, 229], including new exact solutions specifically constructed in null coordinates for the purpose of convergence tests:

-