Abstract

The article reviews the statistical theory of signal detection in application to analysis of deterministic gravitational-wave signals in the noise of a detector. Statistical foundations for the theory of signal detection and parameter estimation are presented. Several tools needed for both theoretical evaluation of the optimal data analysis methods and for their practical implementation are introduced. They include optimal signal-to-noise ratio, Fisher matrix, false alarm and detection probabilities, \({\mathcal F}\)-statistic, template placement, and fitting factor. These tools apply to the case of signals buried in a stationary and Gaussian noise. Algorithms to efficiently implement the optimal data analysis techniques are discussed. Formulas are given for a general gravitational-wave signal that includes as special cases most of the deterministic signals of interest.

Similar content being viewed by others

1 Introduction

In this review we consider the problem of detection of deterministic gravitational-wave signals in the noise of a detector and the question of estimation of their parameters. The examples of deterministic signals are gravitational waves from rotating neutron stars, coalescing compact binaries, and supernova explosions. The case of detection of stochastic gravitational-wave signals in the noise of a detector is reviewed in [5]. A very powerful method to detect a signal in noise that is optimal by several criteria consists of correlating the data with the template that is matched to the expected signal. This matched-filtering technique is a special case of the maximum likelihood detection method. In this review we describe the theoretical foundation of the method and we show how it can be applied to the case of a very general deterministic gravitational-wave signal buried in a stationary and Gaussian noise.

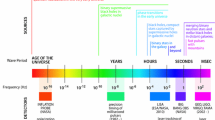

Early gravitational-wave data analysis was concerned with the detection of bursts originating from supernova explosions [99]. It involved analysis of the coincidences among the detectors [52]. With the growing interest in laser interferometric gravitational-wave detectors that are broadband it was realized that sources other than supernovae can also be detectable [92] and that they can provide a wealth of astrophysical information [85, 59]. For example the analytic form of the gravitational-wave signal from a binary system is known in terms of a few parameters to a good approximation. Consequently one can detect such a signal by correlating the data with the waveform of the signal and maximizing the correlation with respect to the parameters of the waveform. Using this method one can pick up a weak signal from the noise by building a large signal-to-noise ratio over a wide bandwidth of the detector [92]. This observation has led to a rapid development of the theory of gravitational-wave data analysis. It became clear that the detectability of sources is determined by optimal signal-to-noise ratio, Equation (24), which is the power spectrum of the signal divided by the power spectrum of the noise integrated over the bandwidth of the detector.

An important landmark was a workshop entitled Gravitational Wave Data Analysis held in Dyffryn House and Gardens, St. Nicholas near Cardiff, in July 1987 [86]. The meeting acquainted physicists interested in analyzing gravitational-wave data with the basics of the statistical theory of signal detection and its application to detection of gravitational-wave sources. As a result of subsequent studies the Fisher information matrix was introduced to the theory of the analysis of gravitational-wave data [40, 58]. The diagonal elements of the Fisher matrix give lower bounds on the variances of the estimators of the parameters of the signal and can be used to assess the quality of astrophysical information that can be obtained from detections of gravitational-wave signals [32, 57, 18]. It was also realized that application of matched-filtering to some sources, notably to continuous sources originating from neutron stars, will require extraordinary large computing resources. This gave a further stimulus to the development of optimal and efficient algorithms and data analysis methods [87].

A very important development was the work by Cutler et al. [31] where it was realized that for the case of coalescing binaries matched filtering was sensitive to very small post-Newtonian effects of the waveform. Thus these effects can be detected. This leads to a much better verification of Einstein’s theory of relativity and provides a wealth of astrophysical information that would make a laser interferometric gravitational-wave detector a true astronomical observatory complementary to those utilizing the electromagnetic spectrum. As further developments of the theory methods were introduced to calculate the quality of suboptimal filters [9], to calculate the number of templates to do a search using matched-filtering [74], to determine the accuracy of templates required [24], and to calculate the false alarm probability and thresholds [50]. An important point is the reduction of the number of parameters that one needs to search for in order to detect a signal. Namely estimators of a certain type of parameters, called extrinsic parameters, can be found in a closed analytic form and consequently eliminated from the search. Thus a computationally intensive search needs only be performed over a reduced set of intrinsic parameters [58, 50, 60].

Techniques reviewed in this paper have been used in the data analysis of prototypes of gravitational-wave detectors [73, 71, 6] and in the data analysis of presently working gravitational-wave detectors [90, 15, 3, 2, 1].

We use units such that the velocity of light c=1.

2 Response of a Detector to a Gravitational Wave

There are two main methods to detect gravitational waves which have been implemented in the currently working instruments. One method is to measure changes induced by gravitational waves on the distances between freely moving test masses using coherent trains of electromagnetic waves. The other method is to measure the deformation of large masses at their resonance frequencies induced by gravitational waves. The first idea is realized in laser interferometric detectors and Doppler tracking experiments [82, 65] whereas the second idea is implemented in resonant mass detectors [13].

Let us consider the response to a plane gravitational wave of a freely falling configuration of masses. It is enough to consider a configuration of three masses shown in Figure 1 to obtain the response for all currently working and planned detectors. Two masses model a Doppler tracking experiment where one mass is the Earth and the other one is a distant spacecraft. Three masses model a ground-based laser interferometer where the masses are suspended from seismically isolated supports or a space-borne interferometer where the three masses are shielded in satellites driven by drag-free control systems.

Schematic configuration of three freely falling masses as a detector of gravitational waves. The masses are labelled 1, 2, and 3, their positions with respect to the origin O of the coordinate system are given by vectors xa (a = 1, 2, 3). The Euclidean separations between the masses are denoted by La, where the index a corresponds to the opposite mass. The unit vectors na point between pairs of masses, with the orientation indicated.

In Figure 1 we have introduced the following notation: O denotes the origin of the TT coordinate system related to the passing gravitational wave, xa (a = 1, 2, 3) are 3-vectors joining O and the masses, na and La(a = 1, 2, 3) are, respectively, 3-vectors of unit Euclidean length along the lines joining the masses and the coordinate Euclidean distances between the masses, where a is the label of the opposite mass. Let us also denote by k the unit 3-vector directed from the origin O to the source of the gravitational wave. We first assume that the spatial coordinates of the masses do not change in time.

Let ν0 be the frequency of the coherent beam used in the detector (laser light in the case of an interferometer and radio waves in the case of Doppler tracking). Let y21 be the relative change Δν/ν0 of frequency induced by a transverse, traceless, plane gravitational wave on the coherent beam travelling from the mass 2 to the mass 1, and let y31 be the relative change of frequency induced on the beam travelling from the mass 3 to the mass 1. The frequency shifts y21 and y31 are given by [37, 10, 83]

where (here T denotes matrix transposition)

In Equation (3) H is the three-dimensional matrix of the spatial metric perturbation produced by the wave in the TT coordinate system. If one chooses spatial TT coordinates such that the wave is travelling in the +z direction, then the matrix H is given by

where h+ and h× are the two polarizations of the wave.

Real gravitational-wave detectors do not stay at rest with respect to the TT coordinate system related to the passing gravitational wave, because they also move in the gravitational field of the solar system bodies, as in the case of the LISA spacecraft, or are fixed to the surface of Earth, as in the case of Earth-based laser interferometers or resonant bar detectors. Let us choose the origin O of the TT coordinate system to coincide with the solar system barycenter (SSB). The motion of the detector with respect to the SSB will modulate the gravitational-wave signal registered by the detector. One can show that as far as the velocities of the masses (modelling the detector’s parts) with respect to the SSB are nonrelativistic, which is the case for all existing or planned detectors, the Equations (1) and (2) can still be used, provided the 3-vectors xa and na (a = 1, 2, 3) will be interpreted as made of the time-dependent components describing the motion of the masses with respect to the SSB.

It is often convenient to introduce the proper reference frame of the detector with coordinates \(({x^{\hat \alpha}})\). Because the motion of this frame with respect to the SSB is nonrelativistic, we can assume that the transformation between the SSB-related coordinates (xα) and the detector’s coordinates \(({x^{\hat \alpha}})\) has the form

where the functions \(x_{\hat O}^{\hat i}(t)\) describe the motion of the origin Ô of the proper reference frame with respect to the SSB, and the functions \(O_j^{\hat i}(t)\) account for the different orientations of the spatial axes of the two reference frames. One can compute some of the quantities entering Equations (1) and (2) in the detector’s coordinates rather than in the TT coordinates. For instance, the matrix Ĥ of the wave-induced spatial metric perturbation in the detector’s coordinates is related to the matrix of the spatial metric perturbation produced by the wave in the TT coordinate system through equation

where the matrix O has elements \({O^{\hat i}}_j\). If the transformation matrix O is orthogonal, then O−1 = OT, and Equation (6) simplifies to

See [23, 42, 50, 60] for more details.

For a standard Michelson, equal-arm interferometric configuration Δν is given in terms of oneway frequency changes y21 and y31 (see Equations (1) and (2) with L2 = L3 = L, where we assume that the mass 1 corresponds to the corner station of the interferometer) by the expression [93]

In the long-wavelength approximation Equation (8) reduces to

The difference of the phase fluctuations ΔΦ(t) measured, say, by a photo detector, is related to the corresponding relative frequency fluctuations Δν by

By virtue of Equation (9) the phase change can be written as

where the function h,

is the response of the interferometer to a gravitational wave in the long-wavelength approximation. In this approximation the response of a laser interferometer is usually derived from the equation of geodesic deviation (then the response is defined as the difference between the relative wave-induced changes of the proper lengths of the two arms, i.e., h(t):= ΔL(t)/L). There are important cases where the long-wavelength approximation is not valid. These include the space-borne LISA detector for gravitational-wave frequencies larger than a few mHz and satellite Doppler tracking measurements.

In the case of a bar detector the long-wavelength approximation is very accurate and the detector’s response is defined as hB(t):= ΔL(t)/L, where ΔL is the wave-induced change of the proper length L of the bar. The response hB is given by

where n is the unit vector along the symmetry axis of the bar.

In most cases of interest the response of the detector to a gravitational wave can be written as a linear combination of four constant amplitudes a(k),

where the four functions h(k) depend on a set of parameters ξμ but are independent of the parameters a(k). The parameters a(k) are called extrinsic parameters whereas the parameters ξμ are called intrinsic. In the long-wavelength approximation the functions h(k) are given by

where Φ(t; ξμ) is the phase modulation of the signal and u(t;ξμ), v(t; ξμ) are slowly varying amplitude modulations.

Equation (14) is a model of the response of the space-based detector LISA to gravitational waves from a binary system [60], whereas Equation (15) is a model of the response of a ground-based detector to a continuous source of gravitational waves like a rotating neutron star [50]. The gravitational-wave signal from spinning neutron stars may consist of several components of the form (14). For short observation times over which the amplitude modulation functions are nearly constant, the response can be approximated by

where A0 and Φ0 are constant amplitude and initial phase, respectively, and g(t; ξμ) is a slowly varying function of time. Equation (16) is a good model for a response of a detector to the gravitational wave from a coalescing binary system [92, 22]. We would like to stress that not all deterministic gravitational-wave signals may be cast into the general form (14).

3 Statistical Theory of Signal Detection

The gravitational-wave signal will be buried in the noise of the detector and the data from the detector will be a random process. Consequently the problem of extracting the signal from the noise is a statistical one. The basic idea behind the signal detection is that the presence of the signal changes the statistical characteristics of the data x, in particular its probability distribution. When the signal is absent the data have probability density function (pdf) p0(x), and when the signal is present the pdf is p1(x).

A full exposition of the statistical theory of signal detection that is outlined here can be found in the monographs [102, 56, 98, 96, 66, 44, 77]. A general introduction to stochastic processes is given in [100]. Advanced treatment of the subject can be found in [64, 101].

The problem of detecting the signal in noise can be posed as a statistical hypothesis testing problem. The null hypothesis H0 is that the signal is absent from the data and the alternative hypothesis H1 is that the signal is present. A hypothesis test (or decision rule) δ is a partition of the observation set into two sets, \({\mathcal R}\) and its complement \(R^\prime\). If data are in \({\mathcal R}\) we accept the null hypothesis, otherwise we reject it. There are two kinds of errors that we can make. A type I error is choosing hypothesis H1 when H0 is true and a type II error is choosing H0 when H1 is true. In signal detection theory the probability of a type I error is called the false alarm probability, whereas the probability of a type II error is called the false dismissal probability. 1 − (false dismissal probability) is the probability of detection of the signal. In hypothesis testing the probability of a type I error is called the significance of the test, whereas 1 − (probability of type II error) is called the power of the test.

The problem is to find a test that is in some way optimal. There are several approaches to find such a test. The subject is covered in detail in many books on statistics, for example see references [54, 41, 62].

3.1 Bayesian approach

In the Bayesian approach we assign costs to our decisions; in particular we introduce positive numbers Cij, i, j = 0, 1, where Cij is the cost incurred by choosing hypothesis Hi when hypothesis Hj is true. We define the conditional risk R of a decision rule δ for each hypothesis as

where Pj is the probability distribution of the data when hypothesis Hj is true. Next we assign probabilities π0 and π1 = 1 − π0 to the occurrences of hypothesis H0 and H1, respectively. These probabilities are called a priori probabilities or priors. We define the Bayes risk as the overall average cost incurred by the decision rule δ:

Finally we define the Bayes rule as the rule that minimizes the Bayes risk r(δ).

3.2 Minimax approach

Very often in practice we do not have the control over or access to the mechanism generating the state of nature and we are not able to assign priors to various hypotheses. In such a case one criterion is to seek a decision rule that minimizes, over all δ, the maximum of the conditional risks, R0(δ) and R1(δ). A decision rule that fulfills that criterion is called minimax rule.

3.3 Neyman-Pearson approach

In many problems of practical interest the imposition of a specific cost structure on the decisions made is not possible or desirable. The Neyman-Pearson approach involves a trade-off between the two types of errors that one can make in choosing a particular hypothesis. The Neyman-Pearson design criterion is to maximize the power of the test (probability of detection) subject to a chosen significance of the test (false alarm probability).

3.4 Likelihood ratio test

It is remarkable that all three very different approaches — Bayesian, minimax, and Neyman-Pearson — lead to the same test called the likelihood ratio test [34]. The likelihood ratio Λ is the ratio of the pdf when the signal is present to the pdf when it is absent:

We accept the hypothesis H1 if Λ > k, where k is the threshold that is calculated from the costs Cij, priors πi, or the significance of the test depending on what approach is being used.

3.4.1 Gaussian case — The matched filter

Let h be the gravitational-wave signal and let n be the detector noise. For convenience we assume that the signal h is a continuous function of time t and that the noise n is a continuous random process. Results for the discrete time data that we have in practice can then be obtained by a suitable sampling of the continuous-in-time expressions. Assuming that the noise is additive the data x can be written as

In addition, if the noise is a zero-mean, stationary, and Gaussian random process, the log likelihood function is given by

where the scalar product (· | ·) is defined by

In Equation (22) ℜ denotes the real part of a complex expression, the tilde denotes the Fourier transform, the asterisk is complex conjugation, and \({\tilde S}\) is the one-sided spectral density of the noise in the detector, which is defined through equation

where E denotes the expectation value.

From the expression (21) we see immediately that the likelihood ratio test consists of correlating the data x with the signal h that is present in the noise and comparing the correlation to a threshold. Such a correlation is called the matched filter. The matched filter is a linear operation on the data.

An important quantity is the optimal signal-to-noise ratio ρ defined by

We see in the following that ρ determines the probability of detection of the signal. The higher the signal-to-noise ratio the higher the probability of detection.

An interesting property of the matched filter is that it maximizes the signal-to-noise ratio over all linear filters [34]. This property is independent of the probability distribution of the noise.

4 Parameter Estimation

Very often we know the waveform of the signal that we are searching for in the data in terms of a finite number of unknown parameters. We would like to find optimal procedures of estimating these parameters. An estimator of a parameter θ is a function \(\hat \theta (x)\) that assigns to each data the “best” guess of the true value of θ. Note that because \(\hat \theta (x)\) depends on the random data it is a random variable. Ideally we would like our estimator to be (i) unbiased, i.e., its expectation value to be equal to the true value of the parameter, and (ii) of minimum variance. Such estimators are rare and in general difficult to find. As in the signal detection there are several approaches to the parameter estimation problem. The subject is exposed in detail in reference [63]. See also [103] for a concise account.

4.1 Bayesian estimation

We assign a cost function C(θ′, θ) of estimating the true value of θ as θ′. We then associate with an estimator \({\hat \theta}\) a conditional risk or cost averaged over all realizations of data x for each value of the parameter θ:

where X is the set of observations and p(x, θ) is the joint probability distribution of data x and parameter θ. We further assume that there is a certain a priori probability distribution π(θ) of the parameter θ. We then define the Bayes estimator as the estimator that minimizes the average risk defined as

where E is the expectation value with respect to an a priori distribution π, and Θ is the set of observations of the parameter θ. It is not difficult to show that for a commonly used cost function

the Bayesian estimator is the conditional mean of the parameter θ given data x, i.e.,

where p(θ|x) is the conditional probability density of parameter θ given the data x.

4.2 Maximum a posteriori probability estimation

Suppose that in a given estimation problem we are not able to assign a particular cost function C(θ′, θ). Then a natural choice is a uniform cost function equal to 0 over a certain interval Iθ of the parameter θ. From Bayes theorem [20] we have

where p(x) is the probability distribution of data x. Then from Equation (26) one can deduce that for each data x the Bayes estimate is any value of θ that maximizes the conditional probability p(θ|x). The density p(θ|x) is also called the a posteriori probability density of parameter θ and the estimator that maximizes p(θ|x) is called the maximum a posteriori (MAP) estimator. It is denoted by \({{\hat \theta}_{{\rm{MAP}}}}\). We find that the MAP estimators are solutions of the following equation

which is called the MAP equation.

4.3 Maximum likelihood estimation

Often we do not know the a priori probability density of a given parameter and we simply assign to it a uniform probability. In such a case maximization of the a posteriori probability is equivalent to maximization of the probability density p(x, θ) treated as a function of θ. We call the function l(θ, x):= p(x, θ) the likelihood function and the value of the parameter θ that maximizes l(θ, x) the maximum likelihood (ML) estimator. Instead of the function l we can use the function Λ(θ, x) = l(θ, x)/p(x) (assuming that p(x) > 0). Λ is then equivalent to the likelihood ratio [see Equation (19)] when the parameters of the signal are known. Then the ML estimators are obtained by solving the equation

which is called the ML equation.

4.3.1 Gaussian case

For the general gravitational-wave signal defined in Equation (14) the log likelihood function is given by

where the components of the column vector N and the matrix M are given by

with x(t) = n(t) + h(t), and where n(t) is a zero-mean Gaussian random process. The ML equations for the extrinsic parameters a can be solved explicitly and their ML estimators â are given by

Substituting â into log Λ we obtain a function

that we call the \({\mathcal F}\)-statistic. The \({\mathcal F}\)-statistic depends (nonlinearly) only on the intrinsic parameters \({\xi ^\mu}\).

Thus the procedure to detect the signal and estimate its parameters consists of two parts. The first part is to find the (local) maxima of the \({\mathcal F}\)-statistic in the intrinsic parameter space. The ML estimators of the intrinsic parameters are those for which the \({\mathcal F}\)-statistic attains a maximum. The second part is to calculate the estimators of the extrinsic parameters from the analytic formula (34), where the matrix M and the correlations N are calculated for the intrinsic parameters equal to their ML estimators obtained from the first part of the analysis. We call this procedure the maximum likelihood detection. See Section 4.8 for a discussion of the algorithms to find the (local) maxima of the \({\mathcal F}\)-statistic.

4.4 Fisher information

It is important to know how good our estimators are. We would like our estimator to have as small variance as possible. There is a useful lower bound on variances of the parameter estimators called Cramer-Rao bound. Let us first introduce the Fisher information matrix Γ with the components defined by

The Cramèr-Rao bound states that for unbiased estimators the covariance matrix of the estimators C ≥ Γ−1. (The inequality A ≥ B for matrices means that the matrix A−B is nonnegative definite.)

A very important property of the ML estimators is that asymptotically (i.e., for a signal-to-noise ratio tending to infinity) they are (i) unbiased, and (ii) they have a Gaussian distribution with covariance matrix equal to the inverse of the Fisher information matrix.

4.4.1 Gaussian case

In the case of Gaussian noise the components of the Fisher matrix are given by

For the case of the general gravitational-wave signal defined in Equation (14) the set of the signal parameters θ splits naturally into extrinsic and intrinsic parameters: θ = (a(k), ξμ). Then the Fisher matrix can be written in terms of block matrices for these two sets of parameters as

where the top left block corresponds to the extrinsic parameters, the bottom right block corresponds to the intrinsic parameters, the superscript T denotes here transposition over the extrinsic parameter indices, and the dot stands for the matrix multiplication with respect to these parameters. Matrix M is given by Equation (33), and the matrices F and S are defined as follows:

The covariance matrix C, which approximates the expected covariances of the ML parameter estimators, is defined as Γ−1. Using the standard formula for the inverse of a block matrix [67] we have

where

We call \({{\bar \Gamma}^{\mu \nu}}\) (the Schur complement of M) the projected Fisher matrix (onto the space of intrinsic parameters). Because the projected Fisher matrix is the inverse of the intrinsic-parameter submatrix of the covariance matrix C, it expresses the information available about the intrinsic parameters that takes into account the correlations with the extrinsic parameters. Note that \({{\bar \Gamma}^{\mu \nu}}\) is still a function of the putative extrinsic parameters. We next define the normalized projected Fisher matrix

where \(\rho = \sqrt {{{\rm{a}}^{\rm{T}}} \cdot {\rm{M}} \cdot {\rm{a}}}\) is the signal-to-noise ratio. From the Rayleigh principle [67] follows that the minimum value of the component \(\bar \Gamma _n^{\mu \nu}\) is given by the smallest eigenvalue (taken with respect to the extrinsic parameters) of the matrix ((S − FT · M−1 · F) · M−1)μν. Similarly, the maximum value of the component \(\bar \Gamma _n^{\mu \nu}\) is given by the largest eigenvalue of that matrix. Because the trace of a matrix is equal to the sum of its eigenvalues, the matrix

where the trace is taken over the extrinsic-parameter indices, expresses the information available about the intrinsic parameters, averaged over the possible values of the extrinsic parameters. Note that the factor 1/4 is specific to the case of four extrinsic parameters. We call \({{\tilde \Gamma}^{\mu \nu}}\) the reduced Fisher matrix. This matrix is a function of the intrinsic parameters alone. We see that the reduced Fisher matrix plays a key role in the signal processing theory that we review here. It is used in the calculation of the threshold for statistically significant detection and in the formula for the number of templates needed to do a given search.

For the case of the signal

the normalized projected Fisher matrix \({{\bar \Gamma}_n}\) is independent of the extrinsic parameters A0 and Φ0, and it is equal to the reduced matrix \({\tilde \Gamma}\) [74]. The components of \({\tilde \Gamma}\) are given by

where \(\Gamma _0^{ij}\) is the Fisher matrix for the signal g(t; ξμ) cos(Φ(t; ξμ) − Φ0).

Fisher matrix has been extensively used to assess the accuracy of estimation of astrophysically interesting parameters of gravitational-wave signals. First calculations of Fisher matrix concerned gravitational-wave signals from inspiralling binaries in quadrupole approximation [40, 58] and from quasi-normal modes of Kerr black hole [38]. Cutler and Flanagan [32] initiated the study of the implications of higher PN order phasing formula as applied to the parameter estimation of inspiralling binaries. They used the 1.5PN phasing formula to investigate the problem of parameter estimation, both for spinning and non-spinning binaries, and examined the effect of the spin-orbit coupling on the estimation of parameters. The effect of the 2PN phasing formula was analyzed independently by Poisson and Will [76] and Królak, Kokkotas and Schäfer [57]. In both of these works the focus was to understand the new spin-spin coupling term appearing at the 2PN order when the spins were aligned perpendicular to the orbital plane. Compared to [57], [76] also included a priori information about the magnitude of the spin parameters, which then leads to a reduction in the rms errors in the estimation of mass parameters. The case of 3.5PN phasing formula was studied in detail by Arun et al. [12]. Inclusion of 3.5PN effects leads to an improved estimate of the binary parameters. Improvements are relatively smaller for lighter binaries.

Various authors have investigated the accuracy with which LISA detector can determine binary parameters including spin effects. Cutler [30] determined LISA’s angular resolution and evaluated the errors of the binary masses and distance considering spins aligned or anti-aligned with the orbital angular momentum. Hughes [46] investigated the accuracy with which the redshift can be estimated (if the cosmological parameters are derived independently), and considered the blackhole ring-down phase in addition to the inspiralling signal. Seto [89] included the effect of finite armlength (going beyond the long wavelength approximation) and found that the accuracy of the distance determination and angular resolution improve. This happens because the response of the instrument when the armlength is finite depends strongly on the location of the source, which is tightly correlated with the distance and the direction of the orbital angular momentum. Vecchio [97] provided the first estimate of parameters for precessing binaries when only one of the two supermassive black holes carries spin. He showed that modulational effects decorrelate the binary parameters to some extent, resulting in a better estimation of the parameters compared to the case when spins are aligned or antialigned with orbital angular momentum. Hughes and Menou [47] studied a class of binaries, which they called “golden binaries,” for which the inspiral and ring-down phases could be observed with good enough precision to carry out valuable tests of strong-field gravity. Berti, Buonanno and Will [21] have shown that inclusion of non-precessing spin-orbit and spin-spin terms in the gravitational-wave phasing generally reduces the accuracy with which the parameters of the binary can be estimated. This is not surprising, since the parameters are highly correlated, and adding parameters effectively dilutes the available information.

4.5 False alarm and detection probabilities — Gaussian case

4.5.1 Statistical properties of the \({\mathcal F}\)-statistic

We first present the false alarm and detection pdfs when the intrinsic parameters of the signal are known. In this case the statistic \({\mathcal F}\) is a quadratic form of the random variables that are correlations of the data. As we assume that the noise in the data is Gaussian and the correlations are linear functions of the data, \({\mathcal F}\) is a quadratic form of the Gaussian random variables. Consequently \({\mathcal F}\)-statistic has a distribution related to the χ2 distribution. One can show (see Section III B in [49]) that for the signal given by Equation (14), \(2{\mathcal F}\) has a χ2 distribution with 4 degrees of freedom when the signal is absent and noncentral χ2 distribution with 4 degrees of freedom and non-centrality parameter equal to signal-to-noise ratio (h|h) when the signal is present.

As a result the pdfs p0 and p1 of \({\mathcal F}\) when the intrinsic parameters are known and when respectively the signal is absent and present are given by

where n is the number of degrees of freedom of χ2 distributions and In/2−1 is the modified Bessel function of the first kind and order n/2−1. The false alarm probability PF is the probability that \({\mathcal F}\) exceeds a certain threshold \({{\mathcal F}_0}\) when there is no signal. In our case we have

The probability of detection PD is the probability that \({\mathcal F}\) exceeds the threshold \({{\mathcal F}_0}\) when the signal-to-noise ratio is equal to ρ:

The integral in the above formula can be expressed in terms of the generalized Marcum Q-function [94, 44], Q(α, β) = PD(α, β2/2). We see that when the noise in the detector is Gaussian and the intrinsic parameters are known, the probability of detection of the signal depends on a single quantity: the optimal signal-to-noise ratio ρ.

4.5.2 False alarm probability

Next we return to the case when the intrinsic parameters ξ are not known. Then the statistic \({\mathcal F}(\xi)\) given by Equation (35) is a certain generalized multiparameter random process called the random field (see Adler’s monograph [4] for a comprehensive discussion of random fields). If the vector ξ has one component the random field is simply a random process. For random fields we can define the autocovariance function \({\mathcal C}\) just in the same way as we define such a function for a random process:

where ξ and ξ′ are two values of the intrinsic parameter set, and E0 is the expectation value when the signal is absent. One can show that for the signal (14) the autocovariance function \({\mathcal C}\) is given

where

We have \({\mathcal C}(\xi, \xi) = 1\).

One can estimate the false alarm probability in the following way [50]. The autocovariance function \({\mathcal C}\) tends to zero as the displacement Δξ = ξ′ − ξ increases (it is maximal for Δξ = 0). Thus we can divide the parameter space into elementary cells such that in each cell the autocovariance function \({\mathcal C}\) is appreciably different from zero. The realizations of the random field within a cell will be correlated (dependent), whereas realizations of the random field within each cell and outside the cell are almost uncorrelated (independent). Thus the number of cells covering the parameter space gives an estimate of the number of independent realizations of the random field. The correlation hypersurface is a closed surface defined by the requirement that at the boundary of the hypersurface the correlation \({\mathcal C}\) equals half of its maximum value. The elementary cell is defined by the equation

for ξ at cell center and ξ′ on cell boundary. To estimate the number of cells we perform the Taylor expansion of the autocorrelation function up to the second-order terms:

As \({\mathcal C}\) attains its maximum value when ξ−ξ′ = 0, we have

Let us introduce the symmetric matrix

then the approximate equation for the elementary cell is given by

It is interesting to find a relation between the matrix G and the Fisher matrix. One can show (see [60], Appendix B) that the matrix G is precisely equal to the reduced Fisher matrix \({\tilde \Gamma}\) given by Equation (43).

Let K be the number of the intrinsic parameters. If the components of the matrix G are constant (independent of the values of the parameters of the signal) the above equation is an equation for a hyperellipse. The K-dimensional Euclidean volume Vcell of the elementary cell defined by Equation (57) equals

where Γ denotes the Gamma function. We estimate the number Nc of elementary cells by dividing the total Euclidean volume V of the K-dimensional parameter space by the volume Vcell of the elementary cell, i.e. we have

The components of the matrix G are constant for the signal h(t; A0, Φ0, ξμ) = A0cos (Φ(t; Φμ) − Φ0) when the phase Φ(t; ξμ) is a linear function of the intrinsic parameters ξμ.

To estimate the number of cells in the case when the components of the matrix G are not constant, i.e. when they depend on the values of the parameters, we write Equation (59) as

This procedure can be thought of as interpreting the matrix G as the metric on the parameter space. This interpretation appeared for the first time in the context of gravitational-wave data analysis in the work by Owen [74], where an analogous integral formula was proposed for the number of templates needed to perform a search for gravitational-wave signals from coalescing binaries.

The concept of number of cells was introduced in [50] and it is a generalization of the idea of an effective number of samples introduced in [36] for the case of a coalescing binary signal.

We approximate the probability distribution of \({\mathcal F}(\xi)\) in each cell by the probability \({p_0}({\mathcal F})\) when the parameters are known [in our case by probability given by Equation (46)]. The values of the statistic \({\mathcal F}\) in each cell can be considered as independent random variables. The probability that \({\mathcal F}\) does not exceed the threshold \({{\mathcal F}_0}\) in a given cell is \(1 - {P_{\rm{F}}}({{\mathcal F}_0})\), where \({P_{\rm{F}}}({{\mathcal F}_0})\) is given by Equation (48). Consequently the probability that \({\mathcal F}\) does not exceed the threshold \({{\mathcal F}_0}\) in all the Nc cells is \({[1 - {P_{\rm{F}}}({{\mathcal F}_0})]^{{N_{\rm{c}}}}}\). The probability \(P_F^T\) that \({\mathcal F}\) exceeds \({{\mathcal F}_0}\) in one or more cell is thus given by

This by definition is the false alarm probability when the phase parameters are unknown. The number of false alarms NF is given by

A different approach to the calculation of the number of false alarms using the Euler characteristic of level crossings of a random field is described in [49].

It was shown (see [29]) that for any finite \({{\mathcal F}_0}\) and Nc, Equation (61) provides an upper bound for the false alarm probability. Also in [29] a tighter upper bound for the false alarm probability was derived by modifying a formula obtained by Mohanty [68]. The formula amounts essentially to introducing a suitable coefficient multiplying the number of cells Nc.

4.5.3 Detection probability

When the signal is present a precise calculation of the pdf of \({\mathcal F}\) is very difficult because the presence of the signal makes the data random process x(t) non-stationary. As a first approximation we can estimate the probability of detection of the signal when the parameters are unknown by the probability of detection when the parameters of the signal are known [given by Equation (49)]. This approximation assumes that when the signal is present the true values of the phase parameters fall within the cell where \({\mathcal F}\) has a maximum. This approximation will be the better the higher the signal-to-noise ratio ρ is.

4.6 Number of templates

To search for gravitational-wave signals we evaluate the \({\mathcal F}\)-statistic on a grid in parameter space. The grid has to be sufficiently fine such that the loss of signals is minimized. In order to estimate the number of points of the grid, or in other words the number of templates that we need to search for a signal, the natural quantity to study is the expectation value of the \({\mathcal F}\)-statistic when the signal is present. We have

The components of the matrix Q are given in Equation (52). Let us rewrite the expectation value (63) in the following form,

where ρ is the signal-to-noise ratio. Let us also define the normalized correlation function

From the Rayleigh principle [67] it follows that the minimum of the normalized correlation function is equal to the smallest eigenvalue of the normalized matrix QT·M ′−1·Q·M−1, whereas the maximum is given by its largest eigenvalue. We define the reduced correlation function as

As the trace of a matrix equals the sum of its eigenvalues, the reduced correlation function \({\mathcal C}\) is equal to the average of the eigenvalues of the normalized correlation function \({{\mathcal C}_{\rm{n}}}\). In this sense we can think of the reduced correlation function as an “average” of the normalized correlation function. The advantage of the reduced correlation function is that it depends only on the intrinsic parameters ξ, and thus it is suitable for studying the number of grid points on which the \({\mathcal F}\)-statistic needs to be evaluated. We also note that the normalized correlation function \({\mathcal C}\) precisely coincides with the autocovariance function \({\mathcal C}\) of the \({\mathcal F}\)-statistic given by Equation (51).

Like in the calculation of the number of cells in order to estimate the number of templates we perform a Taylor expansion of \({\mathcal C}\) up to second order terms around the true values of the parameters, and we obtain an equation analogous to Equation (57),

where G is given by Equation (56). By arguments identical to those in deriving the formula for the number of cells we arrive at the following formula for the number of templates:

When C0 = 1/2 the above formula coincides with the formula for the number Nc of cells, Equation (60). Here we would like to place the templates sufficiently closely so that the loss of signals is minimized. Thus 1 − C0 needs to be chosen sufficiently small. The formula (68) for the number of templates assumes that the templates are placed in the centers of hyperspheres and that the hyperspheres fill the parameter space without holes. In order to have a tiling of the parameter space without holes we can place the templates in the centers of hypercubes which are inscribed in the hyperspheres. Then the formula for the number of templates reads

For the case of the signal given by Equation (16) our formula for number of templates is equivalent to the original formula derived by Owen [74]. Owen [74] has also introduced a geometric approach to the problem of template placement involving the identification of the Fisher matrix with a metric on the parameter space. An early study of the template placement for the case of coalescing binaries can be found in [84, 35, 19]. Applications of the geometric approach of Owen to the case of spinning neutron stars and supernova bursts are given in [24, 11].

The problem of how to cover the parameter space with the smallest possible number of templates, such that no point in the parameter space lies further away from a grid point than a certain distance, is known in mathematical literature as the covering problern [28]. The maximum distance of any point to the next grid point is called the covering radius R. An important class of coverings are lattice coverings. We define a lattice in K-dimensional Euclidean space ℝ>K to be the set of points including 0 such that if u and v are lattice points, then also u + v and u−v are lattice points. The basic building block of a lattice is called the fundamental region. A lattice covering is a covering of ℝ>K by spheres of covering radius R, where the centers of the spheres form a lattice. The most important quantity of a covering is its thickness Θ defined as

In the case of a two-dimensional Euclidean space the best covering is the hexagonal covering and its thickness ≃ 1.21. For dimensions higher than 2 the best covering is not known. We know however the best lattice covering for dimensions K ≤ 23. These are so-called \(A_K^\ast\) lattices which have a thickness \({\Theta _{A_K^\ast}}\) equal to

where VK is the volume of the K-dimensional sphere of unit radius.

For the case of spinning neutron stars a 3-dimensional grid was constructed consisting of prisms with hexagonal bases [16]. This grid has a thickness around 1.84 which is much better than the cubic grid which has thickness of approximately 2.72. It is worse than the best lattice covering which has the thickness around 1.46. The advantage of an \(A_K^\ast\) lattice over the hypercubic lattice grows exponentially with the number of dimensions.

4.7 Suboptimal filtering

To extract signals from the noise one very often uses filters that are not optimal. We may have to choose an approximate, suboptimal filter because we do not know the exact form of the signal (this is almost always the case in practice) or in order to reduce the computational cost and to simplify the analysis. The most natural and simplest way to proceed is to use as our statistic the \({\mathcal F}\)-statistic where the filters \(h_k^\prime(t;\zeta)\) are the approximate ones instead of the optimal ones matched to the signal. In general the functions \(h_k^\prime(t;\zeta)\) will be different from the functions hk(t; ξ) used in optimal filtering, and also the set of parameters ζ will be different from the set of parameters ξ in optimal filters. We call this procedure the suboptimal filtering and we denote the suboptimal statistic by \({{\mathcal F}_{\rm{s}}}\).

We need a measure of how well a given suboptimal filter performs. To find such a measure we calculate the expectation value of the suboptimal statistic. We get

where

Let us rewrite the expectation value \({\rm{E}}\left[ {{{\mathcal F}_{\rm{s}}}} \right]\) in the following form,

where ρ is the optimal signal-to-noise ratio. The expectation value \({\rm{E}}\left[ {{{\mathcal F}_{\rm{s}}}} \right]\) reaches its maximum equal to 2 + ρ2/2 when the filter is perfectly matched to the signal. A natural measure of the performance of a suboptimal filter is the quantity FF defined by

We call the quantity FF the generalized fitting factor.

In the case of a signal given by

the generalized fitting factor defined above reduces to the fitting factor introduced by Apostolatos [9]:

The fitting factor is the ratio of the maximal signal-to-noise ratio that can be achieved with suboptimal filtering to the signal-to-noise ratio obtained when we use a perfectly matched, optimal filter. We note that for the signal given by Equation (76), FF is independent of the value of the amplitude A0. For the general signal with 4 constant amplitudes it follows from the Rayleigh principle that the fitting factor is the maximum of the largest eigenvalue of the matrix QT · M′−1 · Q · M−1 over the intrinsic parameters of the signal.

For the case of a signal of the form

where Φ0 is a constant phase, the maximum over Φ0 in Equation (77) can be obtained analytically. Moreover, assuming that over the bandwidth of the signal the spectral density of the noise is constant and that over the observation time cos(Φ(t; ξ)) oscillates rapidly, the fitting factor is approximately given by

In designing suboptimal filters one faces the issue of how small a fitting factor one can accept. A popular rule of thumb is accepting FF = 0. 97. Assuming that the amplitude of the signal and consequently the signal-to-noise ratio decreases inversely proportional to the distance from the source this corresponds to 10% loss of the signals that would be detected by a matched filter.

Proposals for good suboptimal (search) templates for the case of coalescing binaries are given in [26, 91] and for the case spinning neutron stars in [49, 16].

4.8 Algorithms to calculate the \({\mathcal F}\)-statistic

4.8.1 The two-step procedure

In order to detect signals we search for threshold crossings of the \({\mathcal F}\)-statistic over the intrinsic parameter space. Once we have a threshold crossing we need to find the precise location of the maximum of \({\mathcal F}\) in order to estimate accurately the parameters of the signal. A satisfactory procedure is the two-step procedure. The first step is a coarse search where we evaluate \({\mathcal F}\) on a coarse grid in parameter space and locate threshold crossings. The second step, called fine search, is a refinement around the region of parameter space where the maximum identified by the coarse search is located.

There are two methods to perform the fine search. One is to refine the grid around the threshold crossing found by the coarse search [70, 68, 91, 88], and the other is to use an optimization routine to find the maximum of \({\mathcal F}\) [49, 60]. As initial value to the optimization routine we input the values of the parameters found by the coarse search. There are many maximization algorithms available. One useful method is the Nelder-Mead algorithm [61] which does not require computation of the derivatives of the function being maximized.

4.8.2 Evaluation of the \({\mathcal F}\)-statistic

Usually the grid in parameter space is very large and it is important to calculate the optimum statistic as efficiently as possible. In special cases the \({\mathcal F}\)-statistic given by Equation (35) can be further simplified. For example, in the case of coalescing binaries F can be expressed in terms of convolutions that depend on the difference between the time-of-arrival (TOA) of the signal and the TOA parameter of the filter. Such convolutions can be efficiently computed using Fast Fourier Transforms (FFTs). For continuous sources, like gravitational waves from rotating neutron stars observed by ground-based detectors [49] or gravitational waves form stellar mass binaries observed by space-borne detectors [60], the detection statistic \({\mathcal F}\) involves integrals of the general form

where \({{\tilde \xi}^\mu}\) are the intrinsic parameters excluding the frequency parameter ω, m is the amplitude modulation function, and ωΦmod the phase modulation function. The amplitude modulation function is slowly varying comparing to the exponential terms in the integral (80). We see that the integral (80) can be interpreted as a Fourier transform (and computed efficiently with an FFT), if Φmod = 0 and if m does not depend on the frequency ω. In the long-wavelength approximation the amplitude function m does not depend on the frequency. In this case Equation (80) can be converted to a Fourier transform by introducing a new time variable tb [87],

Thus in order to compute the integral (80), for each set of the intrinsic parameters \({{\tilde \xi}^\mu}\) we multiply the data by the amplitude modulation function m, resample according to Equation (81), and perform the FFT. In the case of LISA detector data when the amplitude modulation m depends on frequency we can divide the data into several band-passed data sets, choosing the bandwidth for each set sufficiently small so that the change of m exp(iωmod) is small over the band. In the integral (80) we can then use as the value of the frequency in the amplitude and phase modulation function the maximum frequency of the band of the signal (see [60] for details).

4.8.3 Comparison with the Cramèr-Rao bound

In order to test the performance of the maximization method of the \({\mathcal F}\) statistic it is useful to perform Monte Carlo simulations of the parameter estimation and compare the variances of the estimators with the variances calculated from the Fisher matrix. Such simulations were performed for various gravitational-wave signals [55, 19, 49]. In these simulations we observe that above a certain signal-to-noise ratio, that we call the threshold signal-to-noise ratio, the results of the Monte Carlo simulations agree very well with the calculations of the rms errors from the inverse of the Fisher matrix. However, below the threshold signal-to-noise ratio they differ by a large factor. This threshold effect is well-known in signal processing [96]. There exist more refined theoretical bounds on the rms errors that explain this effect, and they were also studied in the context of the gravitational-wave signal from a coalescing binary [72]. Use of the Fisher matrix in the assessment of the parameter estimators has been critically examined in [95] where a criterion has been established for signal-to-noise ratio above which the inverse of the Fisher matrix approximates well covariance of the estimators of the parameters.

Here we present a simple model that explains the deviations from the covariance matrix and reproduces well the results of the Monte Carlo simulations. The model makes use of the concept of the elementary cell of the parameter space that we introduced in Section 4.5.2. The calculation given below is a generalization of the calculation of the rms error for the case of a monochromatic signal given by Rife and Boorstyn [81].

When the values of parameters of the template that correspond to the maximum of the functional \({\mathcal F}\) fall within the cell in the parameter space where the signal is present, the rms error is satisfactorily approximated by the inverse of the Fisher matrix. However, sometimes as a result of noise the global maximum is in the cell where there is no signal. We then say that an outlier has occurred. In the simplest case we can assume that the probability density of the values of the outliers is uniform over the search interval of a parameter, and then the rms error is given by

where Δ is the length of the search interval for a given parameter. The probability that an outlier occurs will be the higher the lower the signal-to-noise ratio is. Let q be the probability that an outlier occurs. Then the total variance σ2 of the estimator of a parameter is the weighted sum of the two errors

where σCR is the rms errors calculated from the covariance matrix for a given parameter. One can show [49] that the probability q can be approximated by the following formula:

where p0 and p1 are the probability density functions of false alarm and detection given by Equations (46) and (47), respectively, and where Nc is the number of cells in the parameter space. Equation (84) is in good but not perfect agreement with the rms errors obtained from the Monte Carlo simulations (see [49]). There are clearly also other reasons for deviations from the Cramèr-Rao bound. One important effect (see [72]) is that the functional F has many local subsidiary maxima close to the global one. Thus for a low signal-to-noise the noise may promote the subsidiary maximum to a global one.

4.9 Upper limits

Detection of a signal is signified by a large value of the \({\mathcal F}\)-statistic that is unlikely to arise from the noise-only distribution. If instead the value of \({\mathcal F}\) is consistent with pure noise with high probability we can place an upper limit on the strength of the signal. One way of doing this is to take the loudest event obtained in the search and solve the equation

for signal-to-noise ratio ρUL, where PD is the detection probability given by Equation (49), \({\mathcal F_{\rm{L}}}\) is the value of the \({\mathcal F}\)-statistic corresponding to the loudest event, and β is a chosen confidence [15, 1]. Then ρUL is the desired upper limit with confidence β.

When gravitational-wave data do not conform to a Gaussian probability density assumed in Equation (49), a more accurate upper limit can be obtained by injecting the signals into the detector’s data and thereby estimating the probability of detection PD [3].

4.10 Network of detectors

Several gravitational-wave detectors can observe gravitational waves from the same source. For example a network of bar detectors can observe a gravitational-wave burst from the same supernova explosion, or a network of laser interferometers can detect the inspiral of the same compact binary system. The space-borne LISA detector can be considered as a network of three detectors that can make three independent measurements of the same gravitational-wave signal. Simultaneous observations are also possible among different types of detectors, for example a search for supernova bursts can be performed simultaneously by bar and laser detectors [17].

We consider the general case of a network of detectors. Let h be the signal vector and let n be the noise vector of the network of detectors, i.e., the vector component hk is the response of the gravitational-wave signal in the kth detector with noise nk. Let us also assume that each nk has zero mean. Assuming that the noise in all detectors is additive the data vector x can be written as

In addition if the noise is a stationary, Gaussian, and continuous random process the log likelihood function is given by

In Equation (87) the scalar product (· |·) is defined by

where \({{\rm{\tilde S}}}\) is the one-sided cross spectral density matrix of the noises of the detector network which is defined by (here E denotes the expectation value)

The analysis is greatly simplified if the cross spectrum matrix S is diagonal. This means that the noises in various detectors are uncorrelated. This is the case when the detectors of the network are in widely separated locations like for example the two LIGO detectors. However, this assumption is not always satisfied. An important case is the LISA detector where the noises of the three independent responses are correlated. Nevertheless for the case of LISA one can find a set of three combinations for which the noises are uncorrelated [78, 80]. When the cross spectrum matrix is diagonal the optimum \({\mathcal F}\)-statistic is just the sum of \({\mathcal F}\)-statistics in each detector.

A derivation of the likelihood function for an arbitrary network of detectors can be found in [39], and applications of optimal filtering for the special cases of observations of coalescing binaries by networks of ground-based detectors are given in [48, 32, 75], for the case of stellar mass binaries observed by LISA space-borne detector in [60], and for the case of spinning neutron stars observed by ground-based interferometers in [33]. The reduced Fisher matrix (Equation 43) for the case of a network of interferometers observing spinning neutron stars has been derived and studied in [79].

A least square fit solution for the estimation of the sky location of a source of gravitational waves by a network of detectors for the case of a broad band burst was obtained in [43].

There is also another important method for analyzing the data from a network of detectors — the search for coincidences of events among detectors. This analysis is particularly important when we search for supernova bursts the waveforms of which are not very well known. Such signals can be easily mimicked by non-Gaussian behavior of the detector noise. The idea is to filter the data optimally in each of the detector and obtain candidate events. Then one compares parameters of candidate events, like for example times of arrivals of the bursts, among the detectors in the network. This method is widely used in the search for supernovae by networks of bar detectors [14].

4.11 Non-stationary, non-Gaussian, and non-linear data

Equations (34) and (35) provide maximum likelihood estimators only when the noise in which the signal is buried is Gaussian. There are general theorems in statistics indicating that the Gaussian noise is ubiquitous. One is the central limit theorem which states that the mean of any set of variates with any distribution having a finite mean and variance tends to the normal distribution. The other comes from the information theory and says that the probability distribution of a random variable with a given mean and variance which has the maximum entropy (minimum information) is the Gaussian distribution. Nevertheless, analysis of the data from gravitational-wave detectors shows that the noise in the detector may be non-Gaussian (see, e.g., Figure 6 in [13]). The noise in the detector may also be a non-linear and a non-stationary random process.

The maximum likelihood method does not require that the noise in the detector is Gaussian or stationary. However, in order to derive the optimum statistic and calculate the Fisher matrix we need to know the statistical properties of the data. The probability distribution of the data may be complicated, and the derivation of the optimum statistic, the calculation of the Fisher matrix components and the false alarm probabilities may be impractical. There is however one important result that we have already mentioned. The matched-filter which is optimal for the Gaussian case is also a linear filter that gives maximum signal-to-noise ratio no matter what is the distribution of the data. Monte Carlo simulations performed by Finn [39] for the case of a network of detectors indicate that the performance of matched-filtering (i.e., the maximum likelihood method for Gaussian noise) is satisfactory for the case of non-Gaussian and stationary noise.

Allen et al. [7, 8] derived an optimal (in the Neyman-Pearson sense, for weak signals) signal processing strategy when the detector noise is non-Gaussian and exhibits tail terms. This strategy is robust, meaning that it is close to optimal for Gaussian noise but far less sensitive than conventional methods to the excess large events that form the tail of the distribution. This strategy is based on an locally optimal test ([53]) that amounts to comparing a first non-zero derivative Λn,

of the likelihood ratio with respect to the amplitude of the signal with a threshold instead of the likelihood ratio itself.

In the remaining part of this section we review some statistical tests and methods to detect non-Gaussianity, non-stationarity, and non-linearity in the data. A classical test for a sequence of data to be Gaussian is the Kolmogorov-Smirnov test [27]. It calculates the maximum distance between the cumulative distribution of the data and that of a normal distribution, and assesses the significance of the distance. A similar test is the Lillifors test [27], but it adjusts for the fact that the parameters of the normal distribution are estimated from the data rather than specified in advance. Another test is the Jarque-Bera test [51] which determines whether sample skewness and kurtosis are unusually different from their Gaussian values.

Let xk and ul be two discrete in time random processes (−∞ < k, l < ∞) and let ul be independent and identically distributed (i.i.d.). We call the process xk linear if it can be represented by

where al are constant coefficients. If ul is Gaussian (non-Gaussian), we say that xl is linear Gaussian (non-Gaussian). In order to test for linearity and Gaussianity we examine the third-order cumulants of the data. The third-order cumulant Ckl of a zero mean stationary process is defined by

The bispectrum S2(f1, f2) is the two-dimensional Fourier transform of Ckl. The bicoherence is defined as

where S(f) is the spectral density of the process xk. If the process is Gaussian then its bispectrum and consequently its bicoherence is zero. One can easily show that if the process is linear then its bicoherence is constant. Thus if the bispectrum is not zero, then the process is non-Gaussian; if the bicoherence is not constant then the process is also non-linear. Consequently we have the following hypothesis testing problems:

-

1.

H1: The bispectrum of xk is nonzero.

-

2.

H0: The bispectrum of xk is zero.

If Hypothesis 1 holds, we can test for linearity, that is, we have a second hypothesis testing problem:

-

3.

\({\rm{H}}_1^\prime\): The bicoherence of xk is not constant.

-

4.

\({H''_1}\): The bicoherence of xk is a constant.

If Hypothesis 4 holds, the process is linear.

Using the above tests we can detect non-Gaussianity and, if the process is non-Gaussian, non-linearity of the process. The distribution of the test statistic B(f1, f2), Equation (93), can be calculated in terms of χ2 distributions. For more details see [45].

It is not difficult to examine non-stationarity of the data. One can divide the data into short segments and for each segment calculate the mean, standard deviation and estimate the spectrum. One can then investigate the variation of these quantities from one segment of the data to the other. This simple analysis can be useful in identifying and eliminating bad data. Another quantity to examine is the autocorrelation function of the data. For a stationary process the autocorrelation function should decay to zero. A test to detect certain non-stationarities used for analysis of econometric time series is the Dickey-Fuller test [25]. It models the data by an autoregressive process and it tests whether values of the parameters of the process deviate from those allowed by a stationary model. A robust test for detection non-stationarity in data from gravitational-wave detectors has been developed by Mohanty [69]. The test involves applying Student’s t-test to Fourier coefficients of segments of the data.

References

Abbott, B. et al. (LIGO Scientific Collaboration), “Analysis of LIGO data for gravitational waves from binary neutron stars”, Phys. Rev. D, 69, 122001, 1–16, (2004). Related online version (cited on 8 January 2005): http://arXiv.org/abs/gr-qc/0308069.

Abbott, B. et al. (LIGO Scientific Collaboration), “First upper limits from LIGO on gravitational wave bursts”, Phys. Rev. D, 69, 102001, 1–21, (2004). Related online version (cited on 8 January 2005): http://arXiv.org/abs/gr-qc/0312056.

Abbott, B. et al. (LIGO Scientific Collaboration), “Setting upper limits on the strength of periodic gravitational waves from PSR J1939+2134 using the first science data from the GEO 600 and LIGO detectors”, Phys. Rev. D, 69, 082004, 1–16, (2004). Related online version (cited on 8 January 2005): http://arXiv.org/abs/gr-qc/0308050.

Adler, R.J., The Geometry of Random Fields, (Wiley, Chichester, U.K.; New York, U.S.A., 1981).

Allen, B., “The stochastic gravity-wave background: Sources and detection”, in Marck, J.-A., and Lasota, J.-P., eds., Relativistic gravitation and gravitational radiation, Proceedings of the Les Houches School of Physics, held in Les Houches, Haute Savoie, 26 September–6 October, 1995, Cambridge Contemporary Astrophysics, (Cambridge University Press, Cambridge, U.K., 1997). Related online version (cited on 8 January 2005): http://arXiv.org/abs/astro-ph/9604033.

Allen, B., Blackburn, J.K., Brady, P.R., Creighton, J.D., Creighton, T., Droz, S., Gillespie, A.D., Hughes, S.A., Kawamura, S., Lyons, T.T., Mason, J.E., Owen, B.J., Raab, F.J., Regehr, M.W., Sathyaprakash, B.S., Savage Jr, R.L., Whitcomb, S., and Wiseman, A.G., “Observational Limit on Gravitational Waves from Binary Neutron Stars in the Galaxy”, Phys. Rev. Lett., 83, 1498–1501, (1999). Related online version (cited on 8 January 2005): http://arXiv.org/abs/gr-qc/9903108.

Allen, B., Creighton, D.E., Flanagan, E.E., and Romano, J.D., “Robust statistics for deterministic and stochastic gravitational waves in non-Gaussian noise I: Frequentist analyses”, Phys. Rev. D, 65, 122002, (2002). Related online version (cited on 10 June 2007): http://arxiv.org/abs/gr-qc/0105100. (document)

Allen, B., Creighton, D.E., Flanagan, É.É., and Romano, J.D., “Robust statistics for deterministic and stochastic gravitational waves in non-Gaussian noise I: Bayesian analyses”, Phys. Rev. D, 67, 122002, (2003). Related online version (cited on 10 June 2007): http://arxiv.org/abs/gr-qc/0205015. (document)

Apostolatos, T.A., “Search templates for gravitational waves from precessing, inspiraling binaries”, Phys. Rev. D, 52, 605–620, (1995).

Armstrong, J.W., Estabrook, F.B., and Tinto, M., “Time-Delay Interferometry for Space-Based Gravitational Wave Searches”, Astrophys. J., 527, 814–826, (1999).

Arnaud, N., Barsuglia, M., Bizouard, M., Brisson, V., Cavalier, F., Davier, M., Hello, P., Kreckelbergh, S., and Porter, E.K., “Coincidence and coherent data analysis methods for gravitational wave bursts in a network of interferometric detectors”, Phys. Rev. D, 68, 102001, 1–18, (2003). Related online version (cited on 8 January 2005): http://arXiv.org/abs/gr-qc/0307100.

Arun, K.G., Iyer, B.R., Sathyaprakash, B.S., and Sundararajan, P.A., “Parameter estimation of inspiralling compact binaries using 3.5 post-Newtonian gravitational wave phasing: The non-spinning case”, Phys. Rev. D, 71, 084008, 1–16, (2005). Related online version (cited on 23 July 2007): http://arXiv.org/abs/gr-qc/0411146v4. Erratum: Phys. Rev. D, 72, 069903, (2005). (document)

Astone, P., “Long-term operation of the Rome “Explorer” cryogenic gravitational wave detector”, Phys. Rev. D, 47, 362–375, (1993).

Astone, P., Babusci, D., Baggio, L., Bassan, M., Blair, D.G., Bonaldi, M., Bonifazi, P., Busby, D., Carelli, P., Cerdonio, M., Coccia, E., Conti, L., Cosmelli, C., D’Antonio, S., Fafone, V., Falferi, P., Fortini, P., Frasca, S., Giordano, G., Hamilton, W.O., Heng, I.S., Ivanov, E.N., Johnson, W.W., Marini, A., Mauceli, E., McHugh, M.P., Mezzena, R., Minenkov, Y., Modena, I., Modestino, G., Moleti, A., Ortolan, A., Pallottino, G.V., Pizzella, G., Prodi, G.A., Quintieri, L., Rocchi, A., Rocco, E., Ronga, F., Salemi, F., Santostasi, G., Taffarello, L., Terenzi, R., Tobar, M.E., Torrioli, G., Vedovato, G., Vinante, A., Visco, M., Vitale, S., and Zendri, J.P., “Methods and results of the IGEC search for burst gravitational waves in the years 1997–2000”, Phys. Rev. D, 68, 022001, 1–33, (2003).

Astone, P., Babusci, D., Bassan, M., Borkowski, K.M., Coccia, E., D’Antonio, S., Fafone, V., Giordano, G., Jaranowski, P., Królak, A., Marini, A., Minenkov, Y., Modena, I., Modestino, G., Moleti, A., Pallottino, G.V., Pietka, M., Pizzella, G., Quintieri, L., Rocchi, A., Ronga, F., Terenzi, R., and Visco, M., “All-sky upper limit for gravitational radiation from spinning neutron stars”, Class. Quantum Grav., 20, S665–S676, (2003). Paper from the 7th Gravitational Wave Data Analysis Workshop, Kyoto, Japan, 17–19 December 2002.

Astone, P., Borkowski, K.M., Jaranowski, P., and Krolak, A., “Data Analysis of gravitational-wave signals from spinning neutron stars. IV. An all-sky search”, Phys. Rev. D, 65, 042003, 1–18, (2002).

Astone, P., Lobo, J.A., and Schutz, B.F., “Coincidence experiments between interferometric and resonant bar detectors of gravitational waves”, Class. Quantum Grav., 11, 2093–2112, (1994).

Balasubramanian, R., and Dhurandhar, S.V., “Estimation of parameters of gravitational waves from coalescing binaries”, Phys. Rev. D, 57, 3408–3422, (1998).

Balasubramanian, R., Sathyaprakash, B.S., and Dhurandhar, S.V., “Gravitational waves from coalescing binaries: detection strategies and Monte Carlo estimation of parameters”, Phys. Rev. D, 53, 3033–3055, (1996). Related online version (cited on 8 January 2005): http://arXiv.org/abs/gr-qc/9508011. Erratum: Phys. Rev. D, 54, 1860, (1996).

Bayes, T., “An essay towards solving a problem in doctrine of chances”, Philos. Trans. R. Soc. London, 53, 293–315, (1763).

Berti, E., Buonanno, A., and Will, C.M., “Estimating spinning binary parameters and testing alternative theories of gravity with LISA”, Phys. Rev. D, 71, 084025, 1–24, (2005). Related online version (cited on 23 July 2007): http://arXiv.org/abs/gr-qc/0411129v2. (document)

Blanchet, L., “Gravitational Radiation from Post-Newtonian Sources and Inspiralling Compact Binaries”, Living Rev. Relativity, 9, lrr-2006-4, (2006). URL (cited on 10 June 2007): http://www.livingreviews.org/lrr-2006-4.

Bonazzola, S., and Gourgoulhon, E., “Gravitational waves from pulsars: Emission by the magnetic field induced distortion”, Astron. Astrophys., 312, 675–690, (1996). Related online version (cited on 8 January 2005): http://arXiv.org/abs/astro-ph/9602107.

Brady, P.R., Creighton, T., Cutler, C., and Schutz, B.F., “Searching for periodic sources with LIGO”, Phys. Rev. D, 57, 2101–2116, (1998). Related online version (cited on 8 January 2005): http://arXiv.org/abs/gr-qc/9702050.

Brooks, C., Introductory econometrics for finance, (Cambridge University Press, Cambridge, U.K.; New York, U.S.A., 2002).

Buonanno, A., Chen, Y., and Vallisneri, M., “Detection template families for gravitational waves from the final stages of binary-black-hole inspirals: Nonspinning case”, Phys. Rev. D, 67, 024016, 1–50, (2003). Related online version (cited on 8 January 2005): http://arXiv.org/abs/gr-qc/0205122.

Conover, W.J., Practical Nonparametric Statistics, (Wiley, New York, U.S.A., 1998), 3rd edition.

Conway, J.H., and Sloane, N.J.A., Sphere Packings, Lattices and Groups, vol. 290 of Grundlehren der mathematischen Wissenschaften, (Springer, New York, U.S.A., 1999), 3rd edition.

Croce, R.P., Demma, T., Longo, M., Marano, S., Matta, V., Pierro, V., and Pinto, I.M., “Correlator bank detection of gravitational wave chirps—False-alarm probability, template density, and thresholds: Behind and beyond the minimal-match issue”, Phys. Rev. D, 70, 122001, 1–19, (2004). Related online version (cited on 8 January 2005): http://arXiv.org/abs/gr-qc/0405023.

Cutler, C., “Angular resolution of the LISA gravitational wave detector”, Phys. Rev. D, 57, 7089–7102, (1998). Related online version (cited on 23 July 2007): http://arXiv.org/abs/gr-qc/9703068v1. (document)

Cutler, C., Apostolatos, T.A., Bildsten, L., Finn, L.S., Flanagan, É.É., Kennefick, D., Marković, D.M., Ori, A., Poisson, E., and Sussman, G.J., “The Last Three Minutes: Issues in Gravitational-Wave Measurements of Coalescing Compact Binaries”, Phys. Rev. Lett., 70, 2984–2987, (1993). Related online version (cited on 8 January 2005): http://arXiv.org/abs/astro-ph/9208005.

Cutler, C., and Flanagan, É.É., “Gravitational waves from merging compact binaries: How accurately can one extract the binary’s parameters from the inspiral waveform?”, Phys. Rev. D, 49, 2658–2697, (1994). Related online version (cited on 8 January 2005): http://arXiv.org/abs/gr-qc/9402014.

Cutler, C., and Schutz, B.F., “The generalized F-statistic: multiple detectors and multiple GW pulsars”, Phys. Rev. D, 72, 063006, (2005). Related online version (cited on 10 June 2007): http://arxiv.org/abs/gr-qc/0504011. (document)

Davis, M.H.A., “A Review of Statistical Theory of Signal Detection”, in Schutz, B.F., ed., Gravitational Wave Data Analysis, Proceedings of the NATO Advanced Research Workshop, held at Dyffryn House, St. Nichols, Cardiff, Wales, 6–9 July 1987, vol. 253 of NATO ASI Series C, 73–94, (Kluwer, Dordrecht, Netherlands; Boston, U.S.A., 1989).

Dhurandhar, S.V., and Sathyaprakash, B.S., “Choice of filters for the detection of gravitational waves from coalescing binaries. II. Detection in colored noise”, Phys. Rev. D, 49, 1707–1722, (1994).

Dhurandhar, S.V., and Schutz, B.F., “Filtering coalescing binary signals: Issues concerning narrow banding, thresholds, and optimal sampling”, Phys. Rev. D, 50, 2390–2405, (1994).

Estabrook, F.B., and Wahlquist, H.D., “Response of Doppler spacecraft tracking to gravitational radiation”, Gen. Relativ. Gravit., 6, 439–447, (1975).

Finn, L.S., “Detection, measurement, and gravitational radiation”, Phys. Rev. D, 46, 5236–5249, (1992). Related online version (cited on 23 July 2007): http://arXiv.org/abs/gr-qc/9209010v1. (document)

Finn, L.S., “Aperture synthesis for gravitational-wave data analysis: Deterministic sources”, Phys. Rev. D, 63, 102001, 1–18, (2001). Related online version (cited on 8 January 2005): http://arXiv.org/abs/gr-qc/0010033.

Finn, L.S., and Chernoff, D.F., “Observing binary inspiral in gravitational radiation: One interferometer”, Phys. Rev. D, 47, 2198–2219, (1993). Related online version (cited on 8 January 2005): http://arXiv.org/abs/gr-qc/9301003.

Fisz, M., Probability Theory and Mathematical Statistics, (Wiley, New York, U.S.A., 1963).

Giampieri, G., “On the antenna pattern of an orbiting interferometer”, Mon. Not. R. Astron. Soc., 289, 185–195, (1997).

Gürsel, Y., and Tinto, M., “Nearly optimal solution to the inverse problem for gravitational-wave bursts”, Phys. Rev. D, 40, 3884–3938, (1989).

Helstrom, C.W., Statistical Theory of Signal Detection, vol. 9 of International Series of Monographs in Electronics and Instrumentation, (Pergamon Press, Oxford, U.K.; New York, U.S.A., 1968), 2nd edition.

Hinich, M.J., “Testing for Gaussianity and linearity of a stationary time series”, J. Time Series Anal., 3, 169–176, (1982).

Hughes, S.A., “Untangling the merger history of massive black holes with LISA”, Mon. Not. R. Astron. Soc., 331, 805–816, (2002). Related online version (cited on 23 July 2007): http://arXiv.org/abs/astro-ph/0108483v1. (document)

Hughes, S.A., and Menou, K., “Golden binary gravitational-wave sources: Robust probes of strong-field gravity”, Astrophys. J., 623, 689–699, (2005). Related online version (cited on 23 July 2007): http://arXiv.org/abs/astro-ph/0410148v2. (document)

Jaranowski, P., and Królak, A., “Optimal solution to the inverse problem for the gravitational wave signal of a coalescing binary”, Phys. Rev. D, 49, 1723–1739, (1994).

Jaranowski, P., and Królak, A., “Data analysis of gravitational-wave signals from spinning neutron stars. III. Detection statistics and computational requirements”, Phys. Rev. D, 61, 062001, 1–32, (2000).

Jaranowski, P., Królak, A., and Schutz, B.F., “Data Analysis of gravitational-wave signals from spinning neutron stars: The signal and its detection”, Phys. Rev. D, 58, 063001, 1–24, (1998).

Judge, G.G., Hill, R.C., Griffiths, W.E., Lutkepohl, H., and Lee, T.-C., The Theory and Practice of Econometrics, (Wiley, New York, U.S.A., 1980).

Kafka, P., “Optimal Detection of Signals through Linear Devices with Thermal Noise Sources and Application to the Munich-Frascati Weber-Type Gravitational Wave Detectors”, in De Sabbata, V., and Weber, J., eds., Topics in Theoretical and Experimental Gravitation Physics, Proceedings of the International School of Cosmology and Gravitation held in Erice, Trapani, Sicily, March 13–25, 1975, vol. 27 of NATO ASI Series B, 161, (Plenum Press, New York, U.S.A., 1977).

Kassam, S.A., Signal Detection in Non-Gaussian Noise, (Springer, New York, U.S.A., 1988). (document)

Kendall, M., and Stuart, A., The Advanced Theory of Statistics. Vol. 2: Inference and Relationship, number 2, (C. Griffin, London, 1979).