Abstract

Equations arising in general relativity are usually too complicated to be solved analytically and one must rely on numerical methods to solve sets of coupled partial differential equations. Among the possible choices, this paper focuses on a class called spectral methods in which, typically, the various functions are expanded in sets of orthogonal polynomials or functions. First, a theoretical introduction of spectral expansion is given with a particular emphasis on the fast convergence of the spectral approximation. We then present different approaches to solving partial differential equations, first limiting ourselves to the one-dimensional case, with one or more domains. Generalization to more dimensions is then discussed. In particular, the case of time evolutions is carefully studied and the stability of such evolutions investigated. We then present results obtained by various groups in the field of general relativity by means of spectral methods. Work, which does not involve explicit time-evolutions, is discussed, going from rapidly-rotating strange stars to the computation of black-hole-binary initial data. Finally, the evolution of various systems of astrophysical interest are presented, from supernovae core collapse to black-hole-binary mergers.

Similar content being viewed by others

1 Introduction

Einstein’s equations represent a complicated set of nonlinear partial differential equations for which some exact [30] or approximate [31] analytical solutions are known. But these solutions are not always suitable for physically or astrophysically interesting systems, which require an accurate description of their relativistic gravitational field without any assumption on the symmetry or with the presence of matter fields, for instance. Therefore, many efforts have been undertaken to solve Einstein’s equations with the help of computers in order to model relativistic astrophysical objects. Within this field of numerical relativity, several numerical methods have been experimented with and a large variety are currently being used. Among them, spectral methods are now increasingly popular and the goal of this review is to give an overview (at the moment it is written or updated) of the methods themselves, the groups using them and the results obtained. Although some of the theoretical framework of spectral methods is given in Sections 2 to 4, more details can be found in the books by Gottlieb and Orszag [94], Canuto et al. [56, 57, 58], Fornberg [79], Boyd [48] and Hesthaven et al. [117]. While these references have, of course, been used for writing this review, they may also help the interested reader to get a deeper understanding of the subject. This review is organized as follows: hereafter in the introduction, we briefly introduce spectral methods, their usage in computational physics and give a simple example. Section 2 gives important notions concerning polynomial interpolation and the solution of ordinary differential equations (ODE) with spectral methods. Multidomain approach is also introduced there, whereas some multidimensional techniques are described in Section 3. The cases of time-dependent partial differential equations (PDE) are treated in Section 4. The last two sections then review results obtained using spectral methods: for stationary configurations and initial data (Section 5), and for the time evolution (Section 6) of stars, gravitational waves and black holes.

1.1 About spectral methods

When doing simulations and solving PDEs, one faces the problem of representing and deriving functions on a computer, which deals only with (finite) integers. Let us take a simple example of a function f: [−1, 1] → ℝ. The most straightforward way to approximate its derivative is through finite-difference methods: first one must setup a grid

of N + 1 points in the interval, and represent f by its N + 1 values on these grid points

then, the (approximate) representation of the derivative f′ shall be, for instance,

If we suppose an equidistant grid, so that ∀i < N, xi+1 − xi = Δx = 1/N, the error in the approximation (1) will decay as Δx (first-order scheme). One can imagine higher-order schemes, with more points involved for the computation of each derivative and, for a scheme of order n, the accuracy can vary as (Δx)n = 1/Nn.

Spectral methods represent an alternate way: the function f is no longer represented through its values on a finite number of grid points, but using its coefficients (coordinates) {ci}i=0…N in a finite basis of known functions {Φi}i=0…N

A relatively simple case is, for instance, when f(x) is a periodic function of period two, and the Φ2i(x) = cos(πix), Φ2i+1(x) = sin(πix) are trigonometric functions. Equation (2) is then nothing but the truncated Fourier decomposition of f. In general, derivatives can be computed from the ci’s, with the knowledge of the expression for each derivative Φ′i(x) as a function of {Φi}i=0…N. The decomposition (2) is approximate in the sense that {Φi}i=0…N represent a complete basis of some finite-dimensional functional space, whereas f usually belongs to some other infinite-dimensional space. Moreover, the coefficients ci are computed with finite accuracy. Among the major advantages of using spectral methods is the rapid decay of the error (faster than any power of 1/N, and in practice often exponential e−N), for well-behaved functions (see Section 2.4.4); one, therefore, has an infinite-order scheme.

In a more formal and mathematical way, it is useful to work within the methods of weighted residuals (MWR, see also Section 2.5). Let us consider the PDE

where L is a linear operator, B the operator defining the boundary conditions and s is a source term. A function ū is said to be a numerical solution of this PDE if it satisfies the boundary conditions (4) and makes “small” the residual

If the solution is searched for in a finite-dimensional subspace of some given Hilbert space (any relevant \(L_U^2\) space) in terms of the expansion (2), then the functions {Φi(x)}i=0…N are called trial functions and, in addition, the choice of a set of test functions {ξi (x)}i=0…N defines the notion of smallness for the residual by means of the Hilbert space scalar product

Within this framework, various numerical methods can be classified according to the choice of the trial functions:

-

Finite differences: the trial functions are overlapping local polynomials of fixed order (lower than N).

-

Finite elements: the trial functions are local smooth functions, which are nonzero, only on subdomains of U.

-

Spectral methods: the trial functions are global smooth functions on U.

Various choices of the test functions define different types of spectral methods, as detailed in Section 2.5. Usual choices for the trial functions are (truncated) Fourier series, spherical harmonics or orthogonal families of polynomials.

1.2 Spectral methods in physics

We do not give here all the fields of physics in which spectral methods are employed, but sketching the variety of equations and physical models that have been simulated with such techniques. Spectral methods originally appeared in numerical fluid dynamics, where large spectral hydro-dynamic codes have been regularly used to study turbulence and transition to the turbulence since the seventies. For fully resolved, direct numerical calculations of Navier-Stokes equations, spectral methods were often preferred for their high accuracy. Historically, they also allowed for two or three-dimensional simulations of fluid flows, because of their reasonable computer memory requirements. Many applications of spectral methods in fluid dynamics have been discussed by Canuto et al. [56, 58], and the techniques developed in that field are of some interest to numerical relativity.

From pure fluid-dynamics simulations, spectral methods have rapidly been used in connected fields of research: geophysics [189], meteorology and climate modeling [216]. In this last research category, global circulation models are used as boundary conditions to more specific (lower-scale) models, with improved micro-physics. In this way, spectral methods are only a part of the global numerical model, combined with other techniques to bring the highest accuracy, for a given computational power. A solution to the Maxwell equations can, of course, also be obtained with spectral methods and therefore, magneto-hydrodynamics (MHD) have been studied with these techniques (see, e.g., Hollerbach [119]). This has been the case in astrophysics too, where, for example, spectral three-dimensional numerical models of solar magnetic dynamo action realized by turbulent convection have been computed [52]. And Kompaneet’s equation, describing the evolution of photon distribution function in a plasma bath at thermal equilibrium within the Fokker-Planck approximation, has been solved using spectral methods to model the X-ray emission of Her X-1 [33, 40]. In simulations of cosmological structure formation or galaxy evolution, many N-body codes rely on a spectral solver for the computation of the gravitational force by the particle-mesh algorithm. The mass corresponding to each particle is decomposed onto neighboring grid points, thus defining a density field. The Poisson equation giving the Newtonian gravitational potential is then usually solved in Fourier space for both fields [118].

To our knowledge, the first published result of the numerical solution of Einstein’s equations, using spectral methods, is the spherically-symmetric collapse of a neutron star to a black hole by Gourgoulhon in 1991 [95]. He used spectral methods as they were developed in the Meudon group by Bonazzola and Marck [44]. Later studies of quickly-rotating neutron stars [41] (stationary axisymmetric models), the collapse of a neutron star in tensor-scalar theory of gravity [156] (spherically-symmetric dynamic spacetime), and quasiequilibrium configurations of neutron star binaries [39] and of black holes [110] (three-dimensional and stationary spacetimes) have grown in complexity, up to the three-dimensional time-dependent numerical solution of Einstein’s equations [37]. On the other hand, the first fully three-dimensional evolution of the whole Einstein system was achieved in 2001 by Kidder et al. [127], where a single black hole was evolved to t ≃ 600 M − 1300 M using excision techniques. They used spectral methods as developed in the Cornell/Caltech group by Kidder et al. [125] and Pfeiffer et al. [171]. Since then, they have focused on the evolution of black-hole-binary systems, which has recently been simulated up to merger and ring down by Scheel et al. [185]. Other groups (for instance Ansorg et al. [10], Bartnik and Norton [21], Frauendiener [81] and Tichy [219]) have also used spectral methods to solve Einstein’s equations; Sections 5 and 6 are devoted to a more detailed review of these works.

1.3 A simple example

Before entering the details of spectral methods in Sections 2, 3 and 4, let us give here their spirit with the simple example of the Poisson equation in a spherical shell:

where Δ is the Laplace operator (93) expressed in spherical coordinates (r, θ, φ) (see also Section 3.2). We want to solve Equation (7) in the domain where 0 < Rmin ≤ r ≤ Rmax, θ ∈ [0, π], φ ∈ [0, 2π). This Poisson equation naturally arises in numerical relativity when, for example, solving for initial conditions or the Hamiltonian constraint in the 3+1 formalism [97]: the linear part of these equations can be cast in form (7), and the nonlinearities put into the source σ, with an iterative scheme on ϕ.

First, the angular parts of both fields are decomposed into a (finite) set of spherical harmonics \(\{Y_\ell ^m\}\) (see Section 3.2.2):

with a similar formula relating ϕ to the radial functions fℓm(r). Because spherical harmonics are eigenfunctions of the angular part of the Laplace operator, the Poisson equation can be equivalently solved as a set of ordinary differential equations for each couple (ℓ, m), in terms of the coordinate r:

We then map

and decompose each field in a (finite) basis of Chebyshev polynomials {Ti}i=0…N (see Section 2.4.3):

Each function fℓm(r) can be regarded as a column-vector Aℓm of its N + 1 coefficients aiℓm in this basis; the linear differential operator on the left-hand side of Equation (9) being, thus, a matrix Lℓm acting on the vector:

with Sℓm being the vector of the N +1 coefficients ciℓm of sℓm(r). This matrix can be computed from the recurrence relations fulfilled by the Chebyshev polynomials and their derivatives (see Section 2.4.3 for details).

The matrix L is singular because problem (7) is ill posed. One must indeed specify boundary conditions at r = Rmin and r = Rmax. For simplicity, let us suppose

To impose these boundary conditions, we adopt the tau methods (see Section 2.5.2): we build the matrix \({\bar L}\), taking L and replacing the last two lines by the boundary conditions, expressed in terms of the coefficients from the properties of Chebyshev polynomials:

Equations (14) are equivalent to boundary conditions (13), within the considered spectral approximation, and they represent the last two lines of \({\bar L}\), which can now be inverted and give the coefficients of the solution ϕ.

If one summarizes the steps:

-

1.

Setup an adapted grid for the computation of spectral coefficients (e.g., equidistant in the angular directions and Chebyshev-Gauss-Lobatto collocation points; see Section 2.4.3).

-

2.

Get the values of the source σ on these grid points.

-

3.

Perform a spherical-harmonics transform (for example, using some available library [151]), followed by the Chebyshev transform (using a Fast Fourier Transform (FFT), or a Gauss-Lobatto quadrature) of the source σ.

-

4.

For each couple of values (ℓ, m), build the corresponding matrix \({\bar L}\) with the boundary conditions, and invert the system (using any available linear-algebra package) with the coefficients of as the right-hand side.

-

5.

Perform the inverse spectral transform to get the values of ϕ on the grid points from its coefficients.

A numerical implementation of this algorithm has been reported by Grandclément et al. [109], who have observed that the error decayed as \({e^{- {\ell _{\max}}}} \cdot {e^{- N}}\), provided that the source σ was smooth. Machine round-off accuracy can be reached with ℓmax ∼ N ∼ 30, which makes the matrix inversions of step 4 very cheap in terms of CPU and the whole method affordable in terms of memory usage. These are the main advantages of using spectral methods, as shall be shown in the following sections.

2 Concepts in One Dimension

In this section the basic concept of spectral methods in one spatial dimension is presented. Some general properties of the approximation of functions by polynomials are introduced. The main formulae of the spectral expansion are then given and two sets of polynomials are discussed (Legendre and Chebyshev polynomials). A particular emphasis is put on convergence properties (i.e., the way the spectral approximation converges to the real function).

In Section 2.5, three different methods of solving an ordinary differential equation (ODE) are exhibited and applied to a simple problem. Section 2.6 is concerned with multidomain techniques. After giving some motivations for the use of multidomain decomposition, four different implementations are discussed, as well as their respective merits. One simple example is given, which uses only two domains.

For problems in more than one dimension see Section 3.

2.1 Best polynomial approximation

Polynomials are the only functions that a computer can exactly evaluate and so it is natural to try to approximate any function by a polynomial. When considering spectral methods, we use global polynomials on a few domains. This is to be contrasted with finite difference schemes, for instance, where only local polynomials are considered.

In this particular section, real functions of [−1, 1] are considered. A theorem due to Weierstrass (see for instance [65]) states that the set ℙ of all polynomials is a dense subspace of all the continuous functions on [−1, 1], with the norm ∥·∥∞. This maximum norm is defined as

This means that, for any continuous function f of [−1, 1], there exists a sequence of polynomials (pi), i ∈ ℕ that converges uniformly towards f:

This theorem shows that it is probably a good idea to approximate continuous functions by polynomials.

Given a continuous function f, the best polynomial approximation of degree N, is the polynomial \(p_N^{\ast}\) that minimizes the norm of the difference between f and itself:

Chebyshev alternate theorem states that for any continuous function f, \(p_N^{\ast}\) is unique (theorem 9.1 of [178] and theorem 23 of [149]). There exist N + 2 points xi ∈ [−1, 1] such that the error is exactly attained at those points in an alternate manner:

where δ = 0 or δ = 1. An example of a function and its best polynomial approximation is shown in Figure 1.

2.2 Interpolation on a grid

A grid X on the interval [−1, 1] is a set of N + 1 points xi ∈ [−1, 1], 0 ≤ i ≤ N. These points are called the nodes of the grid X.

Let us consider a continuous function f and a family of grids X with N + 1 nodes xi. Then, there exists a unique polynomial of degree N, \(I_N^Xf\), that coincides with f at each node:

\(I_N^Xf\) is called the interpolant of f through the grid X. \(I_N^Xf\) can be expressed in terms of the Lagrange cardinal polynomials:

where \(\ell _i^X\) are the Lagrange cardinal polynomials. By definition, \(\ell _i^X\) is the unique polynomial of degree N that vanishes at all nodes of the grid X, except at xi, where it is equal to one. It is easy to show that the Lagrange cardinal polynomials can be written as

Figure 2 shows some examples of Lagrange cardinal polynomials. An example of a function and its interpolant on a uniform grid can be seen in Figure 3.

Thanks to Chebyshev alternate theorem, one can see that the best approximation of degree N is an interpolant of the function at N + 1 nodes. However, in general, the associated grid is not known. The difference between the error made by interpolating on a given grid X can be compared to the smallest possible error for the best approximation. One can show that (see Prop. 7.1 of [178]):

where Λ is the Lebesgue constant of the grid X and is defined as:

A theorem by Erdös [72] states that, for any choice of grid X, there exists a constant C > 0 such that:

It immediately follows that ΛN → ∞ when N → ∞. This is related to a result from 1914 by Faber [73] that states that for any grid, there always exists at least one continuous function f, whose interpolant does not converge uniformly to f. An example of such failure of convergence is show in Figure 4, where the convergence of the interpolant to the function \(f = {1 \over {1 + 16{x^2}}}\) is clearly nonuniform (see the behavior near the boundaries of the interval). This is known as the Runge phenomenon.

Moreover, a theorem by Cauchy (theorem 7.2 of [178]) states that, for all functions \(f \in {{\mathcal C}^{(N + 1)}}\), the interpolation error on a grid X of N + 1 nodes is given by

where ϵ ∊ [−1, 1]. \(w_{N + 1}^X\) is the nodal polynomial of X, being the only polynomial of degree N + 1, with a leading coefficient of 1, and that vanishes on the nodes of X. It is then easy to show that

In Equation (25), one has a priori no control on the term involving fN + 1. For a given function, it can be rather large and this is indeed the case for the function f shown in Figure 4 (one can check, for instance, that ∣fN+1 (1)∣ becomes larger and larger). However, one can hope to minimize the interpolation error by choosing a grid such that the nodal polynomial is as small as possible. A theorem by Chebyshev states that this choice is unique and is given by a grid, whose nodes are the zeros of the Chebyshev polynomial TN+1 (see Section 2.3 for more details on Chebyshev polynomials). With such a grid, one can achieve

which is the smallest possible value (see Equation (18), Section 4.2, Chapter 5 of [122]). So, a grid based on nodes of Chebyshev polynomials can be expected to perform better that a standard uniform one. This is what can be seen in Figure 5, which shows the same function and its interpolants as in Figure 4, but with a Chebyshev grid. Clearly, the Runge phenomenon is no longer present. One can check that, for this choice of function f, the uniform convergence of the interpolant to the function is recovered. This is because \(\Vert w_{N + 1}^X{\Vert _\infty}\) decreases faster than fN+1/(N + 1)! increases. Of course, Faber’s result implies that this cannot be true for all the functions. There still must exist some functions for which the interpolant does not converge uniformly to the function itself (it is actually the class of functions that are not absolutely continuous, like the Cantor function).

Same as Figure 4 but using a grid based on the zeros of Chebyshev polynomials. The Runge phenomenon is no longer present.

2.3 Polynomial interpolation

2.3.1 Orthogonal polynomials

Spectral methods are often based on the notion of orthogonal polynomials. In order to define orthogonality, one must define the scalar product of two functions on an interval [−1, 1]. Let us consider a positive function w of [−1, 1] called the measure. The scalar product of f and g with respect to this measure is defined as:

A basis of Pℕ is then a set of N + 1 polynomials {pn}n=0…n · pn is of degree n and the polynomials are orthogonal: (pi, pj)w = 0 for i ≠ j.

The projection PNf of a function f on this basis is then

where the coefficients of the projection are given by

The difference between f and its projection goes to zero when N increases:

Figure 6 shows the function f = cos3 (πx/2) + (x + 1)3/8 and its projection on Chebyshev polynomials (see Section 2.4.3) for N = 4 and N = 8, illustrating the rapid convergence of PNf to f.

At first sight, the projection seems to be an interesting means of numerically representing a function. However, in practice this is not the case. Indeed, to determine the projection of a function, one needs to compute the integrals (30), which requires the evaluation of at a great number of points, making the whole numerical scheme impractical.

2.3.2 Gaussian quadratures

The main theorem of Gaussian quadratures (see for instance [57]) states that, given a measure w, there exist N + 1 positive real numbers wn and N + 1 real numbers xn ∈ [−1, 1] such that:

The wn are called the weights and the xn are the collocation points. The integer δ can take several values depending on the exact quadrature considered:

-

Gauss quadrature: δ = 1.

-

Gauss-Radau: δ = 0 and x0 = −1.

-

Gauss-Lobatto: δ = −1 and x0 = −1, xN = 1.

Gauss quadrature is the best choice because it applies to polynomials of higher degree but Gauss-Lobatto quadrature is often more useful for numerical purposes because the outermost collocation points coincide with the boundaries of the interval, making it easier to impose matching or boundary conditions. More detailed results and demonstrations about those quadratures can be found for instance in [57].

2.3.3 Spectral interpolation

As already stated in 2.3.1, the main drawback of projecting a function in terms of orthogonal polynomials comes from the difficulty to compute the integrals (30). The idea of spectral methods is to approximate the coefficients of the projection by making use of Gaussian quadratures. By doing so, one can define the interpolant of a function f by

where

The \({{\tilde f}_n}\) exactly coincides with the coefficients \({{\hat f}_n}\), if the Gaussian quadrature is applicable for computing Equation (30), that is, for all f ∈ ℙN+δ. So, in general, INf ≠ PNf and the difference between the two is called the aliasing error. The advantage of using \({{\tilde f}_n}\) is that they are computed by estimating f at the N + 1 collocation points only.

One can show that INf and f coincide at the collocation points: INf(xi) = f(xi) so that IN interpolates f on the grid, whose nodes are the collocation points. Figure 7 shows the function f = cos3(π/2) + (x + 1)3/8 and its spectral interpolation using Chebyshev polynomials, for N = 4 and N = 6.

2.3.4 Two equivalent descriptions

The description of a function f in terms of its spectral interpolation can be given in two different, but equivalent spaces:

-

in the configuration space, if the function is described by its value at the N + 1 collocation points f(xi);

-

in the coefficient space, if one works with the N + 1 coefficients \({{\tilde f}_i}\).

There is a bijection between both spaces and the following relations enable us to go from one to the other:

-

the coefficients can be computed from the values of f(xi) using Equation (34);

-

the values at the collocation points are expressed in terms of the coefficients by making use of the definition of the interpolant (33):

$$f({x_i}) = \sum\limits_{n = 0}^N {{{\tilde f}_n}{p_n}({x_i}).}$$(35)

Depending on the operation one has to perform on a given function, it may be more clever to work in one space or the other. For instance, the square root of a function is very easily given in the collocation space by \(\sqrt {f({x_i})}\), whereas the derivative can be computed in the coefficient space if, and this is generally the case, the derivatives of the basis polynomials are known, by \({f^{\prime}}(x) = \sum\limits_{n = 0}^N {{{\tilde f}_n}{p^{\prime}}_n(x)}\).

2.4 Usual polynomials

2.4.1 Sturm-Liouville problems and convergence

The Sturm-Liouville problems are eigenvalue problems of the form:

on the interval [−1, 1]. p, q and w are real-valued functions such that:

-

p(x) is continuously differentiable, strictly positive and continuous at x = ±1.

-

q(x) is continuous, non-negative and bounded.

-

w(x) is continuous, non-negative and integrable.

The solutions are then the eigenvalues λi and the eigenfunctions ui(x). The eigenfunctions are orthogonal with respect to the measure w:

Singular Sturm-Liouville problems are particularly important for spectral methods. A Sturm-Liouville problem is singular if and only if the function p vanishes at the boundaries x = ±1. One can show, that if the functions of the spectral basis are chosen to be the solutions of a singular Sturm-Liouville problem, then the convergence of the function to its interpolant is faster than any power law of N, N being the order of the expansion (see Section 5.2 of [57]). One talks about spectral convergence. Let us be precise in saying that this does not necessarily imply that the convergence is exponential. Convergence properties are discussed in more detail for Legendre and Chebyshev polynomials in Section 2.4.4.

Conversely, it can be shown that spectral convergence is not ensured when considering solutions of regular Sturm-Liouville problems [57].

In what follows, two usual types of solutions of singular Sturm-Liouville problems are considered: Legendre and Chebyshev polynomials.

2.4.2 Legendre polynomials

Legendre polynomials Pn are eigenfunctions of the following singular Sturm-Liouville problem:

In the notations of Equation (36), p = 1 − x2, q = 0, w = 1 and λn = −n(n + 1).

It follows that Legendre polynomials are orthogonal on [−1, 1] with respect to the measure w(x) = 1. Moreover, the scalar product of two polynomials is given by:

Starting from P0 = 1 and P1 = x, the successive polynomials can be computed by the following recurrence expression:

Among the various properties of Legendre polynomials, one can note that i) Pn has the same parity as n. ii) Pn is of degree n. iii) Pn(±1) = (±1)n. iv) Pn has exactly n zeros on [−1, 1]. The first polynomials are shown in Figure 8.

The weights and locations of the collocation points associated with Legendre polynomials depend on the choice of quadrature.

-

Legendre-Gauss: xi are the nodes of PN+1 and \({w_i} = {2 \over {(1 - x_i^2){{[P_{N - 1}^{\prime}({x_i})]}^2}}}\).

-

Legendre-Gauss-Radau: x0 = −1 and xi are the nodes of PN + PN+1. The weights are given by \({w_0} = {2 \over {{{(N + 1)}^2}}}\) and \({w_i} = {1 \over {{{(N + 1)}^2}}}\).

-

Legendre-Gauss-Lobatto: x0 = −1, xN = 1 and xi are the nodes of P′N. The weights are \({w_i} = {2 \over {N(N + 1)}}{1 \over {{{[{P_N}({x_i})]}^2}}}\).

These values have no analytic expression, but they can be computed numerically in an efficient way.

Some elementary operations can easily be performed on the coefficient space. Let us assume that a function f is given by its coefficients an so that \(f = \sum\limits_{n = 0}^N {{a_n}{P_n}}\). Then, the coefficients bn of \(Hf = \sum\limits_{n = 0}^N {{b_n}{P_n}}\) can be found as a function of an, for various operators H. For instance,

-

if is multiplication by x then:

$${b_n} = {n \over {2n - 1}}{a_{n - 1}} + {{n + 1} \over {2n + 3}}{a_{n + 1}}\quad (n \geq 1);$$(41) -

if is the derivative:

$${b_n} = (2n + 1)\sum\limits_{p = n + 1,p + n\;{\rm{odd}}}^N {{a_p}};$$(42) -

if is the second derivative:

$${b_n} = (n + 1/2)\sum\limits_{p = n + 2,p + n\;{\rm{even}}}^N {[p(p + 1) - n(n + 1)]{a_p}}.$$(43)

These kind of relations enable one to represent the action of H as a matrix acting on the vector of an, the product being the coefficients of Hf, i.e. the bn.

2.4.3 Chebyshev polynomials

Chebyshev polynomials Tn are eigenfunctions of the following singular Sturm-Liouville problem:

In the notation of Equation (36), \(p = \sqrt {1 - {x^2}}, q = 0,\omega = 1/\sqrt {1 - {x^2}}\) and λn = −n.

It follows that Chebyshev polynomials are orthogonal on [−1, 1] with respect to the measure \(w = 1/\sqrt {1 - {x^2}}\) and the scalar product of two polynomials is

Given that T0 = 1 and T1 = x, the higher-order polynomials can be obtained by making use of the recurrence

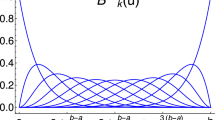

This implies the following simple properties: i) Tn has the same parity as n; ii) Tn is of degree n; iii) Tn(±1) = (±1)n; iv) Tn has exactly n zeros on [−1, 1]. The first polynomials are shown in Figure 9.

Contrary to the Legendre case, both the weights and positions of the collocation points are given by analytic formulae:

-

Chebyshev-Gauss: \({x_i} = \cos {{(2i + 1)\pi} \over {2N + 2}}\) and \({w_i} = {\pi \over {N + 1}}\).

-

Chebyshev-Gauss-Radau: \({x_i} = \cos {{2\pi i} \over {2N + 1}}\). The weights are \({w_0} = {\pi \over {2N + 1}}\) and \({w_i} = {{2\pi} \over {2N + 1}}\).

-

Chebyshev-Gauss-Lobatto: \({x_i} = \cos {{\pi i} \over N}\). The weights are \({w_0} = {w_N} = {\pi \over {2N}}\) and \({w_i} = {\pi \over N}\).

As for the Legendre case, the action of various linear operators H can be expressed in the coefficient space. This means that the coefficients bn of Hf are given as functions of the coefficients an of f. For instance,

-

if is multiplication by x:

$${b_n} = {1 \over 2}[(1 + {\delta _{0\;n - 1}}){a_{n - 1}} + {a_{n + 1}}]\quad (n \geq 1);$$(47) -

if H is the derivative:

$${b_n} = {2 \over {(1 + {\delta _{0\;n}})}}\sum\limits_{p = n + 1,p + n\;{\rm{odd}}}^N {p{a_p}};$$(48) -

if H is the second derivative:

$${b_n} = {1 \over {(1 + {\delta _{0\;n}})}}\sum\limits_{p = n + 2,p + n\;{\rm{even}}}^N {p({p^2} - {n^2}){a_p}}.$$(49)

2.4.4 Convergence properties

One of the main advantages of spectral methods is the very fast convergence of the interpolant INf to the function f, at least for smooth enough functions. Let us consider a \({{\mathcal C}^m}\) function f; one can place the following upper bounds on the difference between f and its interpolant INf:

-

For Legendre:

$${\left\Vert {{I_N}f - f} \right\Vert _{{L^2}}} \leq {{{C_1}} \over {{N^{m - 1/2}}}}\sum\limits_{k = 0}^m {{{\left\Vert {{f^{(k)}}} \right\Vert}_{{L^2}}}}.$$(50) -

For Chebyshev:

$${\left\Vert {{I_N}f - f} \right\Vert _{L_w^2}} \leq {{{C_2}} \over {{N^m}}}\sum\limits_{k = 0}^m {{{\left\Vert {{f^{(k)}}} \right\Vert}_{L_w^2}}}.$$(51)$${\left\Vert {{I_N}f - f} \right\Vert _\infty} \leq {{{C_3}} \over {{N^{m - 1/2}}}}\sum\limits_{k = 0}^m {{{\left\Vert {{f^{(k)}}} \right\Vert}_{L_w^2}}}.$$(52)

The Ci are positive constants. An interesting limit of the above estimates concerns a \({{\mathcal C}^\infty}\) function. One can then see that the difference between f and INf decays faster than any power of N. This is spectral convergence. Let us be precise in saying that this does not necessarily imply that the error decays exponentially (think about exp \(\left({- \sqrt N} \right)\) for instance). Exponential convergence is achieved only for analytic functions, i.e. functions that are locally given by a convergent power series.

An example of this very fast convergence is shown in Figure 10. The error clearly decays exponentially, the function being analytic, until it reaches the level of machine precision, 10−14 (one is working in double precision in this particular case). Figure 10 illustrates the fact that, with spectral methods, very good accuracy can be reached with only a moderate number of coefficients.

If the function is less regular (i.e. not \({{\mathcal C}^\infty}\)), the error only decays as a power law, thus making the use of spectral method less appealing. It can easily be seen in the worst possible case: that of a discontinuous function. In this case, the estimates (50–52) do not even ensure convergence. Figure 11 shows a step function and its interpolant for various values of N. One can see that the maximum difference between the function and its interpolant does not go to zero even when N is increasing. This is known as the Gibbs phenomenon.

Finally, Equation (52) shows that, if m > 0, the interpolant converges uniformly to the function. Continuous functions that do not converge uniformly to their interpolant, whose existence has been shown by Faber [73] (see Section 2.2), must belong to the \({{\mathcal C}^0}\) functions. Indeed, for the case m = 0, Equation (52) does not prove convergence (neither do Equations (50) or (51)).

2.4.5 Trigonometric functions

A detailed presentation of the theory of Fourier transforms is beyond the scope of this work. However, there is a close link between discrete Fourier transforms and their spectral interpolation, which is briefly outlined here. More detail can be found, for instance, in [57].

The Fourier transform of a function of [0, 2] is given by:

The Fourier transform is known to converge rather rapidly to the function itself, if f is periodic. However, the coefficients an and bn are given by integrals of the form \(\int\nolimits_0^{2\pi} {f(x)\cos (nx){\rm{d}}x}\), that cannot easily be computed (as was the case for the projection of a function on orthogonal polynomials in Section 2.3.1).

The solution to this problem is also very similar to the use of the Gaussian quadratures. Let us introduce N + 1 collocation points xi = 2πi/(N + 1). Then the discrete Fourier coefficients with respect to those points are:

and the interpolant \({I_N}f\) is then given by:

The approximation made by using discrete coefficients in place of real ones is of the same nature as the one made when computing coefficients of projection (30) by means of the Gaussian quadratures. Let us mention that, in the case of a discrete Fourier transform, the first and last collocation points lie on the boundaries of the interval, as for a Gauss-Lobatto quadrature. As for the polynomial interpolation, the convergence of \({I_N}f\) to f is spectral for all periodic and \({{\mathcal C}^\infty}\) functions.

2.4.6 Choice of basis

For periodic functions of [0, 2π], the discrete Fourier transform is the natural choice of basis. If the considered function has also some symmetries, one can use a subset of the trigonometric polynomials. For instance, if the function is i) periodic on [0, 2π] and is also odd with respect to x = π, then it can be expanded in terms of sines only. If the function is not periodic, then it is natural to expand it either in Chebyshev or Legendre polynomials. Using Legendre polynomials can be motivated by the fact that the associated measure is very simple: w(x) = 1. The multidomain technique presented in Section 2.6.5 is one particular example in which such a property is required. In practice, Legendre and Chebyshev polynomials usually give very similar results.

2.5 Spectral methods for ODEs

2.5.1 The weighted residual method

Let us consider a differential equation of the form

where L is a linear second-order differential operator. The problem admits a unique solution once appropriate boundary conditions are prescribed at x = 1 and x = −1. Typically, one can specify i) the value of u (Dirichlet-type) ii) the value of its derivative ∂xu (Neumann-type) iii) a linear combination of both (Robin-type).

As for the elementary operations presented in Section 2.4.2 and 2.4.3, the action of L on u can be expressed by a matrix Lij. If the coefficients of u with respect to a given basis are the ũi, then the coefficients of Lu are

Usually, Lij can easily be computed by combining the action of elementary operations like the second derivative, the first derivative, multiplication or division by x (see Sections 2.4.2 and 2.4.3 for some examples).

A function u is an admissible solution to the problem if and only if i) it fulfills the boundary conditions exactly (up to machine accuracy) ii) it makes the residual R = Lu − S small. In the weighted residual method, one considers a set of N + 1 test functions {ξn}n=0…N on [−1, 1]. The smallness of R is enforced by demanding that

As N increases, the obtained solution gets closer and closer to the real one. Depending on the choice of the test functions and the way the boundary conditions are enforced, one gets various solvers. Three classical examples are presented below.

2.5.2 The tau method

In this particular method, the test functions coincide with the basis used for the spectral expansion, for instance the Chebyshev polynomials. Let us denote ũi and \({{\tilde {\mathcal S}}_i}\) the coefficients of the solution u and of the source \(S\), respectively.

Given the expression of Lu in the coefficient space (59) and the fact that the basis polynomials are orthogonal, the residual equations (60) are expressed as

the unknowns being the ũi. However, as such, this system does not admit a unique solution, due to the homogeneous solutions of L (i.e. the matrix associated with L is not invertible) and one has to impose boundary conditions. In the tau method, this is done by relaxing the last two equations (61) (i.e. for n = N − 1 and n = N) and replacing them by the boundary conditions at x = −1 and x = 1.

The tau method thus ensures that Lu and S have the same coefficients, excepting the last ones. If the functions are smooth, then their coefficients should decrease in a spectral manner and so the “forgotten” conditions are less and less stringent as N increases, ensuring that the computed solution converges rapidly to the real one.

As an illustration, let us consider the following equation:

with the following boundary conditions:

The exact solution is analytic and is given by

Figure 12 shows the exact solution and the numerical one, for two different values of N. One can note that the numerical solution converges rapidly to the exact one, the two being almost indistinguishable for N as small as N = 8. The numerical solution exactly fulfills the boundary conditions, no matter the value of N.

2.5.3 The collocation method

The collocation method is very similar to the tau method. They only differ in the choice of test functions. Indeed, in the collocation method one uses continuous functions that are zero at all but one collocation point. They are indeed the Lagrange cardinal polynomials already seen in Section 2.2 and can be written as ξi(xj) = δij. With such test functions, the residual equations (60) are

The value of Lu at each collocation point is easily expressed in terms of ũ by making use of (59) and one gets

Let us note that, even if the collocation method imposes that Lu and S coincide at each collocation point, the unknowns of the system written in the form (66) are the coefficients ũn and not u(xn). As for the tau method, system (66) is not invertible and boundary conditions must be enforced by additional equations. In this case, the relaxed conditions are the two associated with the outermost points, i.e. n = 0 and n = N, which are replaced by appropriate boundary conditions to get an invertible system.

Figure 13 shows both the exact and numerical solutions for Equation (62).

2.5.4 Galerkin method

The basic idea of the Galerkin method is to seek the solution u as a sum of polynomials Gi that individually verify the boundary conditions. Doing so, u automatically fulfills those conditions and they do not have to be imposed by additional equations. Such polynomials constitute a Galerkin basis of the problem. For practical reasons, it is better to choose a Galerkin basis that can easily be expressed in terms of the original orthogonal polynomials.

For instance, with boundary conditions (63), one can choose:

More generally, the Galerkin basis relates to the usual ones by means of a transformation matrix

Let us mention that the matrix M is not square. Indeed, to maintain the same degree of approximation, one can consider only N − 1 Galerkin polynomials, due to the two additional conditions they have to fulfill (see, for instance, Equations (67–68)). One can also note that, in general, the Gi are not orthogonal polynomials.

The solution u is sought in terms of the coefficients \(\tilde u_i^G\) on the Galerkin basis:

By making use of Equations (59) and (69) one can express Lu in terms of \(\tilde u_i^G\):

The test functions used in the Galerkin method are the Gi themselves, so that the residual system reads:

where the left-hand side is computed by means of Equation (71) and by expressing the Gi in terms of the Ti with Equation (69). Concerning the right-hand side, the source itself is not expanded in terms of the Galerkin basis, given that it does not fulfill the boundary conditions. Putting all the pieces together, the Galerkin system reads:

This is a system of N − 1 equations for the N − 1 unknowns \(\tilde u_i^G\) and it can be directly solved, because it is well posed. Once the \(\tilde u_i^G\) are known, one can obtain the solution in terms of the usual basis by making, once again, use of the transformation matrix:

The solution obtained by the application of this method to Equation (62) is shown in Figure 14.

2.5.5 Optimal methods

A spectral method is said to be optimal if it does not introduce an additional error to the error that would be introduced by interpolating the exact solution of a given equation.

Let us call uexact such an exact solution, unknown in general. Its interpolant is INuexact and the numerical solution of the equation is unum. The numerical method is then optimal if and only if \(\Vert {I_N}{u_{{\rm{exact}}}} - {u_{{\rm{exact}}}}{\Vert _\infty}\) and \(\Vert {u_{{\rm{num}}}} - {u_{{\rm{exact}}}}{\Vert _\infty}\) behave in the same manner when N → ∞.

In general, optimality is difficult to check because both uexact and its interpolant are unknown. However, for the test problem proposed in Section 2.5.2 this can be done. Figure 15 shows the maximum relative difference between the exact solution (64) and its interpolant and the various numerical solutions. All the curves behave in the same manner as N increases, indicating that the three methods previously presented are optimal (at least for this particular case).

The difference between the exact solution (64) of Equation (62) and its interpolant (black curve) and between the exact and numerical solutions for i) the tau method (green curve and circle symbols) ii) the collocation method (blue curve and square symbols) iii) the Galerkin method (red curve and triangle symbols).

2.6 Multidomain techniques for ODEs

2.6.1 Motivations and setting

As seen in Section 2.4.4, spectral methods are very efficient when dealing with \({{\mathcal C}^\infty}\) functions. However, they lose some of their appeal when dealing with less regular functions, the convergence to the exact functions being substantially slower. Nevertheless, the physicist has sometimes to deal with such functions. This is the case for the density jump at the surface of strange stars or the formation of shocks, to mention only two examples. In order to maintain spectral convergence, one then needs to introduce several computational domains such that the various discontinuities of the functions lie at the interface between the domains. Doing so in each domain means that one only deals with \({{\mathcal C}^\infty}\) functions.

Multidomain techniques can also be valuable when dealing with a physical space either too complicated or too large to be described by a single domain. Related to that, one can also use several domains to increase the resolution in some parts of the space where more precision is required. This can easily be done by using a different number of basis functions in different domains. One then talks about fixed-mesh refinement.

Efficient parallel processing may also require that several domains be used. Indeed, one could set a solver, dealing with each domain on a given processor, and interprocessor communication would then only be used for matching the solution across the various domains. The algorithm of Section 2.6.4 is well adapted to such purposes.

In the following, four different multidomain methods are presented to solve an equation of the type Lu = S on [−1, 1]. L is a second-order linear operator and S is a given source function. Appropriate boundary conditions are given at the boundaries x = −1 and x = 1.

For simplicity the physical space is split into two domains:

-

first domain: x ≤ 0 described by x1 = 2x + 1, x1 ∈ [−1, 1],

-

second domain: x ≥ 0 described by x2 = 2x − 1, x2 ∈ [−1, 1].

If x ≤ 0, a function u is described by its interpolant in terms of \({x_1}:{I_N}u(x) = \sum\limits_{i = 0}^N {\tilde u_i^1{T_i}({x_1}(x))}\). The same is true for x ≥ 0 with respect to the variable x2. Such a set-up is obviously appropriate to deal with problems where discontinuities occur at x = 0, that is x1 = 1 and x2 = −1.

2.6.2 The multidomain tau method

As for the standard tau method (see Section 2.5.2) and in each domain, the test functions are the basis polynomials and one writes the associated residual equations. For instance, in the domain x ≤ 0 one gets:

\({{\tilde {\mathcal S}}^1}\) being the coefficients of the source and Lij the matrix representation of the operator. As for the one-domain case, one relaxes the last two equations, keeping only N − 1 equations. The same is done in the second domain.

Two supplementary equations are enforced to ensure that the boundary conditions are fulfilled. Finally, the operator L being of second order, one needs to ensure that the solution and its first derivative are continuous at the interface x = 0. This translates to a set of two additional equations involving both domains.

So, one considers

-

N − 1 residual equations in the first domain,

-

N − 1 residual equations in the second domain,

-

2 boundary conditions,

-

2 matching conditions,

for a total of 2N + 2 equations. The unknowns are the coefficients of u in both domains (i.e. the \(\tilde u_i^1\) and the \(\tilde u_i^2\)), that is 2N + 2 unknowns. The system is well posed and admits a unique solution.

2.6.3 Multidomain collocation method

As for the standard collocation method (see Section 2.5.3) and in each domain, the test functions are the Lagrange cardinal polynomials. For instance, in the domain x ≤ 0 one gets:

Lij being the matrix representation of the operator and x1n the nth collocation point in the first domain. As for the one-domain case, one relaxes the two equations corresponding to the boundaries of the domain, keeping only N − 1 equations. The same is done in the second domain.

Two supplementary equations are enforced to ensure that the boundary conditions are fulfilled. Finally, the operator L being second order, one needs to ensure that the solution and its first derivative are continuous at the interface x = 0. This translates to a set of two additional equations involving the coefficients in both domains.

So, one considers

-

N − 1 residual equations in the first domain,

-

N − 1 residual equations in the second domain,

-

2 boundary conditions,

-

2 matching conditions,

for a total of 2N + 2 equations. The unknowns are the coefficients of u in both domains (i.e. the \(\tilde u_i^1\) and the \(\tilde u_i^2\)), that is 2N + 2 unknowns. The system is well posed and admits a unique solution.

2.6.4 Method based on homogeneous solutions

The method described here proceeds in two steps. First, particular solutions are computed in each domain. Then, appropriate linear combinations with the homogeneous solutions of the operator L are performed to ensure continuity and impose boundary conditions.

In order to compute particular solutions, one can rely on any of the methods described in Section 2.5. The boundary conditions at the boundary of each domain can be chosen (almost) arbitrarily. For instance, one can use in each domain a collocation method to solve Lu = S, demanding that the particular solution upart is zero at both ends of each interval.

Then, in order to have a solution over the whole space, one needs to add homogeneous solutions to the particular ones. In general, the operator L is second order and admits two independent homogeneous solutions g and h in each domain. Let us note that, in some cases, additional regularity conditions can reduce the number of available homogeneous solutions. The homogeneous solutions can either be computed analytically if the operator L is simple enough or numerically, but one must then have a method for solving Lu = 0.

In each domain, the physical solution is a combination of the particular solution and homogeneous ones of the type:

where α and β are constants that must be determined. In the two domains case, we are left with four unknowns. The system of equations they must satisfy is composed of i) two equations for the boundary conditions ii) two equations for the matching of u and its first derivative across the boundary between the two domains. The obtained system is called the matching system and generally admits a unique solution.

2.6.5 Variational method

Contrary to previously presented methods, the variational one is only applicable with Legendre polynomials. Indeed, the method requires that the measure be w(x) = 1. It is also useful to extract the second-order term of the operator L and to rewrite it as Lu = u″ + H, H being first order only.

In each domain, one writes the residual equation explicitly:

The term involving the second derivative of is then integrated by parts:

The test functions are the same as the ones used for the collocation method, i.e. functions being zero at all but one collocation point, in both domains (d = 1, 2): ξi(xdj) = δij. By making use of the Gauss quadratures, the various parts of Equation (79) can be expressed as (d = 1, 2 indicates the domain):

where Dij (or Hij, respectively) represents the action of the derivative (or of H, respectively) in the configuration space

For points strictly inside each domain, the integrated term [ξu′] of Equation (79) vanishes and one gets equations of the form:

This is a set of N − 1 equations for each domains (d = 1, 2). In the above form, the unknowns are the u. (xdi), i.e. the solution is sought in the configuration space.

As usual, two additional equations are provided by appropriate boundary conditions at both ends of the global domain. One also gets an additional condition by matching the solution across the boundary between the two domains.

The last equation of the system is the matching of the first derivative of the solution. However, instead of writing it “explicitly”, this is done by making use of the integrated term in Equation (79) and this is actually the crucial step of the whole method. Applying Equation (79) to the last point x1N of the first domain, one gets:

The same can be done with the first point of the second domain to get u′(x2 = −1), and the last equation of the system is obtained by demanding that u′(x1 = 1) = u’(x2 = −1) and relates the values of in both domains.

Before finishing with the variational method, it may be worthwhile to explain why Legendre polynomials are used. Suppose one wants to work with Chebyshev polynomials instead. The measure is then \(w(x) = {1 \over {\sqrt {1 - {x^2}}}}\). When one integrates the term containing u″ by parts, one gets

Because the measure is divergent at the boundaries, it is difficult, if not impossible, to isolate the term in u′. On the other hand, this is precisely the term that is needed to impose the appropriate matching of the solution.

2.6.6 Merits of the various methods

From a numerical point of view, the method based on an explicit matching using the homogeneous solutions is somewhat different from the two others. Indeed, one must solve several systems in a row and each one is of the same size as the number of points in one domain. This splitting of the different domains can also be useful for designing parallel codes. On the contrary, for both the variational and the tau method one must solve only one system, but its size is the same as the number of points in a whole space, which can be quite large for many domains settings. However, those two methods do not require one to compute the homogeneous solutions, computation that could be tricky depending on the operators involved and the number of dimensions.

The variational method may seem more difficult to implement and is only applicable with Legendre polynomials. However, on mathematical grounds, it is the only method that is demonstrated to be optimal. Moreover, some examples have been found in which the others methods are not optimal. It remains true that the variational method is very dependent on both the shape of the domains and the type of equation that needs to be solved.

The choice of one method or another thus depends on the particular situation. As for the mono-domain space, for simple test problems the results are very similar. Figure 16 shows the maximum error between the analytic solution and the numeric one for the four different methods. All errors decay exponentially and reach machine accuracy within roughly the same number of points.

Difference between the exact and numerical solutions of the following test problem. \({{{{\rm{d}}^2}u} \over {{\rm{d}}{x^2}}} + 4u = S\), with S(x < 0) = 1 and S(x > 0) = 0. The boundary conditions are u(x = −1) = 0 and u(x = 1) = 0. The black curve and circles denote results from the multidomain tau method, the red curve and squares from the method based on the homogeneous solutions, the blue curve and diamonds from the variational one, and the green curve and triangles from the collocation method.

3 Multidimensional Cases

In principle, the generalization to more than one dimension is rather straightforward if one uses the tensor product. Let us first take an example, with the spectral representation of a scalar function f(x, y) defined on the square (x, y) ∈ [−1, 1] × [−1, 1] in terms of Chebyshev polynomials. One simply writes

with Ti being the Chebyshev polynomial of degree i. The partial differential operators can also be generalized as being linear operators acting on the space ℙM⊗ℙN. Simple linear partial differential equations (PDE) can be solved by one of the methods presented in Section 2.5 (Galerkin, tau or collocation), on this MN-dimensional space. The development (88) can of course be generalized to any dimension. Some special PDE and spectral basis examples, where the differential equation decouples for some of the coordinates, will be given in Section 3.2.

3.1 Spatial coordinate systems

Most of the interesting problems in numerical relativity involve asymmetries that require the use of a full set of three-dimensional coordinates. We briefly review several coordinate sets (all orthogonal) that have been used in numerical relativity with spectral methods. They are described through the line element ds2 of the flat metric in the coordinates we discuss.

-

Cartesian (rectangular) coordinates are of course the simplest and most straightforward to implement; the line element reads ds2 = dx2 + dy2 + dz2. These coordinates are regular in all space, with vanishing connection, which makes them easy to use, since all differential operators have simple expressions and the associated triad is also perfectly regular. They are particularly well adapted to cube-like domains, see for instance [167, 171] and [81] in the case of toroidal topology.

-

Circular cylindrical coordinates have a line element ds2 = dρ2 + ρ2 dϕ2 + dz2 and exhibit a coordinate singularity on the z-axis (ρ = 0). The associated triad being also singular for ρ = 0, regular vector or tensor fields have components that are multivalued (depending on ϕ) at any point of the z-axis. As for the spherical coordinates, this can be handled quite easily with spectral methods. This coordinate system can be useful for axisymmetric or rotating systems, see [10].

-

Spherical (polar) coordinates will be discussed in more detail in Section 3.2. Their line element reads ds2 = dr2 + r2 dθ2 + r2 sin2 θ dφ2, showing a coordinate singularity at the origin (r = 0) and on the axis for which θ = 0, π. They are very useful in numerical relativity for the numerous sphere-like objects under study (stars, black hole horizons) and have mostly been implemented for shell-like domains [40, 109, 167, 219] and for spheres including the origin [44, 109].

-

Prolate spheroidal coordinates consist of a system of confocal ellipses and hyperbolae, describing an (x, z)-plane, and an angle φ giving the position as a rotation with respect to the focal axis [131]. The line element is ds2 = a2(sinh2 μ + sin2 ν) (dμ2 + dν2) + a2 sinh2 μ sin2 ν dφ2. The foci are situated at z = ±a and represent coordinate singularities for μ = 0 and ν = 0, π. These coordinates have been used in [8] with black-hole-puncture data at the foci.

-

Bispherical coordinates are obtained by the rotation of bipolar coordinates around the focal axis, with a line element ds2 = a2 (cosh η − cos χ)−2 (dη2 + dχ2 + sin2 χdφ2). As with prolate spheroidal coordinates, the foci situated at z = ±a (η → ±∞, χ = 0, π) and more generally, the focal axis, exhibit coordinate singularities. Still, the surfaces of constant are spheres situated in the z > 0(< 0) region for η > 0(< 0), respectively. Thus, these coordinates are very well adapted for the study of binary systems and in particular for excision treatment of black hole binaries [6].

3.1.1 Mappings

Choosing a smart set of coordinates is not the end of the story. As for finite elements, one would like to be able to cover some complicated geometries, like distorted stars, tori, etc… or even to be able to cover the whole space. The reason for this last point is that, in numerical relativity, one often deals with isolated systems for which boundary conditions are only known at spatial infinity. A quite simple choice is to perform a mapping from numerical coordinates to physical coordinates, generalizing the change of coordinates to [−1, 1], when using families of orthonormal polynomials or to [0, 2π] for Fourier series.

An example of how to map the [−1, 1] × [−1, 1] domain can be taken from Canuto et al. [56], and is illustrated in Figure 17: once the mappings from the four sides (boundaries) of \({\hat \Omega}\) to the four sides of Ω are known, one can construct a two-dimensional regular mapping Π, which preserves orthogonality and simple operators (see Chapter 3.5 of [56]).

The case where the boundaries of the considered domain are not known at the beginning of the computation can also be treated in a spectral way. In the case where this surface corresponds to the surface of a neutron star, two approaches have been used. First, in Bonazzola et al. [38], the star (and therefore the domain) is supposed to be “star-like”, meaning that there exists a point from which it is possible to reach any point on the surface by straight lines that are all contained inside the star. To such a point is associated the origin of a spherical system of coordinates, so that it is a spherical domain, which is regularly deformed to coincide with the shape of the star. This is done within an iterative scheme, at every step, once the position of the surface has been determined. Then, another approach has been developed by Ansorg et al. [10] using cylindrical coordinates. It is a square in the plane (ρ, z), which is mapped onto the domain describing the interior of the star. This mapping involves an unknown function, which is itself decomposed in terms of a basis of Chebyshev polynomials, so that its coefficients are part of the global vector of unknowns (as the density and gravitational field coefficients).

In the case of black-hole-binary systems, Scheel et al. [188] have developed horizon-tracking coordinates using results from control theory. They define a control parameter as the relative drift of the black hole position, and they design a feedback control system with the requirement that the adjustment they make on the coordinates be sufficiently smooth that they do not spoil the overall Einstein solver. In addition, they use a dual-coordinate approach, so that they can construct a comoving coordinate map, which tracks both orbital and radial motion of the black holes and allows them to successfully evolve the binary. The evolutions simulated in [188] are found to be unstable, when using a single rotating-coordinate frame. We note here as well the work of Bonazzola et al. [42], where another option is explored: the stroboscopic technique of matching between an inner rotating domain and an outer inertial one.

3.1.2 Spatial compactification

As stated above, the mappings can also be used to include spatial infinity into the computational domain. Such a compactification technique is not tied to spectral methods and has already been used with finite-difference methods in numerical relativity by, e.g., Pretorius [176]. However, due to the relatively low number of degrees of freedom necessary to describe a spatial domain within spectral methods, it is easier within this framework to use some resources to describe spatial infinity and its neighborhood. Many choices are possible to do so, either directly choosing a family of well-behaved functions on an unbounded interval, for example the Hermite functions (see, e.g., Section 17.4 in Boyd [48]), or making use of standard polynomial families, but with an adapted mapping. A first example within numerical relativity was given by Bonazzola et al. [41] with the simple inverse mapping in spherical coordinates.

This inverse mapping for spherical “shells” has also been used by Kidder and Finn [125], Pfeiffer et al. [171, 167], and Ansorg et al. in cylindrical [10] and spheroidal [8] coordinates. Many more elaborated techniques are discussed in Chapter 17 of Boyd [48], but to our knowledge, none have been used in numerical relativity yet. Finally, it is important to point out that, in general, the simple compactification of spatial infinity is not well adapted to solving hyperbolic PDEs and the above mentioned examples were solving only for elliptic equations (initial data, see Section 5). For instance, the simple wave equation (127) is not invariant under the mapping (89), as has been shown, e.g., by Sommerfeld (see [201], Section 23.E). Intuitively, it is easy to see that when compactifying only spatial coordinates for a wave-like equation, the distance between two neighboring grid points becomes larger than the wavelength, which makes the wave poorly resolved after a finite time of propagation on the numerical grid. For hyperbolic equations, is is therefore usually preferable to impose physically and mathematically well-motivated boundary conditions at a finite radius (see, e.g., Friedrich and Nagy [83], Rinne [179] or Buchman and Sarbach [53]).

3.1.3 Patching in more than one dimension

The multidomain (or multipatch) technique has been presented in Section 2.6 for one spatial dimension. In Bonazzola et al. [40] and Grandclément et al. [109], the three-dimensional spatial domains consist of spheres (or star-shaped regions) and spherical shells, across which the solution can be matched as in one-dimensional problems (only through the radial dependence). In general, when performing a matching in two or three spatial dimensions, the reconstruction of the global solution across all domains might need some more care to clearly write down the matching conditions (see, e.g., [167], where overlapping as well as nonoverlapping domains are used at the same time). For example in two dimensions, one of the problems that might arise is the counting of matching conditions for corners of rectangular domains, when such a corner is shared among more than three domains. In the case of a PDE where matching conditions must be imposed on the value of the solution, as well as on its normal derivative (Poisson or wave equation), it is sufficient to impose continuity of either normal derivative at the corner, the jump in the other normal derivative being spectrally small (see Chapter 13 of Canuto et al. [56]).

A now typical problem in numerical relativity is the study of binary systems (see also Sections 5.5 and 6.3) for which two sets of spherical shells have been used by Gourgoulhon et al. [100], as displayed in Figure 18. Different approaches have been proposed by Kidder et al. [128], and used by Pfeiffer [167] and Scheel et al. [188] where spherical shells and rectangular boxes are combined together to form a grid adapted to black hole binary study. Even more sophisticated setups to model fluid flows in complicated tubes can be found in [144].

Two sets of spherical domains describing a neutron star or black hole binary system. Each set is surrounded by a compactified domain of the type (89), which is not displayed

Multiple domains can thus be used to adapt the numerical grid to the interesting part (manifold) of the coordinate space; they can be seen as a technique close to the spectral element method [166]. Moreover, it is also a way to increase spatial resolution in some parts of the computational domain where one expects strong gradients to occur: adding a small domain with many degrees of freedom is the analog of fixed-mesh refinement for finite-differences.

3.2 Spherical coordinates and harmonics

Spherical coordinates (see Figure 19) are well adapted for the study of many problems in numerical relativity. Those include the numerical modeling of isolated astrophysical single objects, like a neutron star or a black hole. Indeed, stars’ surfaces have sphere-like shapes and black hole horizons have this topology as well, which is best described in spherical coordinates (eventually through a mapping, see Section 3.1.1). As these are isolated systems in general relativity, the exact boundary conditions are imposed at infinity, requiring a compactification of space, which is here achieved with the compactification of the radial coordinate r only.

When the numerical grid does not extend to infinity, e.g., when solving for a hyperbolic PDE, the boundary defined by r = const is a smooth surface, on which boundary conditions are much easier to impose. Finally, spherical harmonics, which are strongly linked with these coordinates, can simplify a lot the solution of Poisson-like or wave-like equations. On the other hand, there are some technical problems linked with this set of coordinates, as detailed hereafter, but spectral methods can handle them in a very efficient way.

3.2.1 Coordinate singularities

The transformation from spherical (r, θ, φ) to Cartesian coordinates (x, y, z) is obtained by

One immediately sees that the origin r = 0 ⇔ x = y = z = 0 is singular in spherical coordinates because neither θ nor φ can be uniquely defined. The same happens for the z-axis, where θ = 0 or π, and φ cannot be defined. Among the consequences is the singularity of some usual differential operators, like, for instance, the Laplace operator

Here, the divisions by r at the center, or by sin θ on the z-axis look singular. On the other hand, the Laplace operator, expressed in Cartesian coordinates, is a perfectly regular one and, if it is applied to a regular function, should give a well-defined result. The same should be true if one uses spherical coordinates: the operator (93) applied to a regular function should yield a regular result. This means that a regular function of spherical coordinates must have a particular behavior at the origin and on the axis, so that the divisions by r or sin θ appearing in regular operators are always well defined. If one considers an analytic function in (regular) Cartesian coordinates f(x, y, z), it can be expanded as a series of powers of x, y and z, near the origin

Placing the coordinate definitions (90)–(92) into this expression gives

and rearranging the terms in φ:

With some transformations of trigonometric functions in θ, one can express the angular part in terms of spherical harmonics \(Y_\ell ^m(\theta, \varphi)\), see Section 3.2.2, with ℓ = ∣m∣ + 2p + q and obtain the two following regularity conditions, for a given couple (ℓ, m):

-

near θ = 0, a regular scalar field is equivalent to f(θ) ∼ sin∣m∣ θ,

-

near r = 0, a regular scalar field is equivalent to f(r) ∼ rℓ.

In addition, the r-dependence translates into a Taylor series near the origin, with the same parity as ℓ. More details in the case of polar (2D) coordinates are given in Chapter 18 of Boyd [48].

If we go back to the evaluation of the Laplace operator (93), it is now clear that the result is always regular, at least for ℓ ≥ 2 and m ≥ 2. We detail the cases of ℓ = 0 and ℓ =1, using the fact that spherical harmonics are eigenfunctions of the angular part of the Laplace operator (see Equation (103)). For ℓ = 0 the scalar field f is reduced to a Taylor series of only even powers of r, therefore the first derivative contains only odd powers and can be safely divided by r. Once decomposed on spherical harmonics, the angular part of the Laplace operator (93) acting on the ℓ = 1 component reads −2/r2, which is a problem only for the first term of the Taylor expansion. On the other hand, this term cancels with the \({2 \over r}{\partial \over {\partial r}}\), providing a regular result. This is the general behavior of many differential operators in spherical coordinates: when applied to a regular field, the full operator gives a regular result, but single terms of this operator may give singular results when computed separately, the singularities canceling between two different terms.

As this may seem an argument against the use of spherical coordinates, let us stress that spectral methods are very powerful in evaluating such operators, keeping everything finite. As an example, we use Chebyshev polynomials in ξ for the expansion of the field f(r = αξ), α being a positive constant. From the Chebyshev polynomial recurrence relation (46), one has

which recursively gives the coefficients of

from those of f(ξ). The computation of this finite part g(ξ) is always a regular and linear operation on the vector of coefficients. Thus, the singular terms of a regular operator are never computed, but the result is a good one, as if the cancellation of such terms had occurred. Moreover, from the parity conditions it is possible to use only even or odd Chebyshev polynomials, which simplifies the expressions and saves computer time and memory. Of course, relations similar to Equation (97) exist for other families of orthonormal polynomials, as well as relations that divide by sin θ a function developed on a Fourier basis. The combination of spectral methods and spherical coordinates is thus a powerful tool for accurately describing regular fields and differential operators inside a sphere [44]. To our knowledge, this is the first reference showing that it is possible to solve PDEs with spectral methods inside a sphere, including the three-dimensional coordinate singularity at the origin.

3.2.2 Spherical harmonics

Spherical harmonics are the pure angular functions

where ℓ ≥ 0 and ∣m∣ ≤ ℓ. \(P_\ell ^m(\cos \theta)\) are the associated Legendre functions defined by

for m ≥ 0. The relation

gives the associated Legendre functions for negative m; note that the normalization factors can vary in the literature. This family of functions have two very important properties. First, they represent an orthogonal set of regular functions defined on the sphere; thus, any regular scalar field f(θ, φ) defined on the sphere can be decomposed into spherical harmonics

Since the harmonics are regular, they automatically take care of the coordinate singularity on the z-axis. Then, they are eigenfunctions of the angular part of the Laplace operator (noted here as Δθφ):

the associated eigenvalues being −ℓ(ℓ + 1).

The first property makes the description of scalar fields on spheres very easy: spherical harmonics are used as a decomposition basis within spectral methods, for instance in geophysics or meteorology, and by some groups in numerical relativity [21, 109, 219]. However, they could be more broadly used in numerical relativity, for example for Cauchy-characteristic evolution or matching [228, 15], where a single coordinate chart on the sphere might help in matching quantities. They can also help to describe star-like surfaces being defined by r = h(θ, φ) as event or apparent horizons [152, 23, 1]. The search for apparent horizons is also made easier: since the function h verifies a two-dimensional Poisson-like equation, the linear part can be solved directly, just by dividing by −ℓ(ℓ + 1) in the coefficient space.

The second property makes the Poisson equation,

very easy to solve (see Section 1.3). If the source and the unknown are decomposed into spherical harmonics, the equation transforms into a set of ordinary differential equations for the coefficients (see also [109]):

Then, any ODE solver can be used for the radial coordinate: spectral methods, of course, (see Section 2.5), but others have been used as well (see, e.g., Bartnik et al. [20, 21]). The same technique can be used to advance in time the wave equation with an implicit scheme and Chebyshev-tau method for the radial coordinate [44, 157].

The use of spherical-harmonics decomposition can be regarded as a basic spectral method, like Fourier decomposition. There are, therefore, publicly available “spherical harmonics transforms”, which consist of a Fourier transform in the φ-direction and a successive Fourier and Legendre transform in the θ-direction. A rather efficient one is the SpharmonicsKit/S2Kit [151], but writing one’s own functions is also possible [99].

3.2.3 Tensor components

All the discussion in Sections 3.2.1–3.2.2 has been restricted to scalar fields. For vector, or more generally tensor fields in three spatial dimensions, a vector basis (triad) must be specified to express the components. At this point, it is very important to stress that the choice of the basis is independent of the choice of coordinates. Therefore, the most straightforward and simple choice, even if one is using spherical coordinates, is the Cartesian triad \(\left({{{\rm{e}}_x} = {\partial \over {\partial x}},{{\bf{e}}_y} = {\partial \over {\partial y}},{{\bf{e}}_z} = {\partial \over {\partial z}}} \right)\). With this basis, from a numerical point of view, all tensor components can be regarded as scalars and therefore, a regular tensor can be defined as a tensor field, whose components with respect to this Cartesian frame are expandable in powers of x, y and z (as in Bardeen and Piran [19]). Manipulations and solutions of PDEs for such tensor fields in spherical coordinates are generalizations of the techniques for scalar fields. In particular, when using the multidomain approach with domains having different shapes and coordinates, it is much easier to match Cartesian components of tensor fields. Examples of use of Cartesian components of tensor fields in numerical relativity include the vector Poisson equation [109] or, more generally, the solution of elliptic systems arising in numerical relativity [171]. In the case of the evolution of the unconstrained Einstein system, the use of Cartesian tensor components is the general option, as it is done by the Caltech/Cornell group [127, 188].

The use of an orthonormal spherical basis \(\left({{{\rm{e}}_r} = {\partial \over {\partial r}},{{\bf{e}}_\theta} = {1 \over r}{\partial \over {\partial \theta}},{{\bf{e}}_\varphi} = {1 \over {r\sin \theta}}{\partial \over {\partial \varphi}}} \right)\) (see. Figure 19) requires more care. The interested reader can find more details in the work of Bonazzola et al. [44, 37]. Nevertheless, there are systems in general relativity in which spherical components of tensors can be useful:

-

When doing excision for the simulation of black holes, the boundary conditions on the excised sphere for elliptic equations (initial data) may be better formulated in terms of spherical components for the shift or the three-metric [62, 104, 123]. In particular, the component that is normal to the excised surface is easily identified with the radial component.

-

Still, in the 3+1 approach, the extraction of gravitational radiation in the wave zone is made easier if the perturbation to the metric is expressed in spherical components, because the transverse part is then straightforward to obtain [218].